Data scientist as scientist

by NIALL CARDIN, OMKAR MURALIDHARAN, and AMIR NAJMI

When working with complex systems or phenomena, the data scientist must often operate with incomplete and provisional understanding, even as she works to advance the state of knowledge. This is very much what scientists do. Our post describes how we arrived at recent changes to design principles for the Google search page, and thus highlights aspects of a data scientist’s role which involve practicing the scientific method.

When working with complex systems or phenomena, the data scientist must often operate with incomplete and provisional understanding, even as she works to advance the state of knowledge. This is very much what scientists do. Our post describes how we arrived at recent changes to design principles for the Google search page, and thus highlights aspects of a data scientist’s role which involve practicing the scientific method.

There has been debate as to whether the term “data science” is necessary. Some don’t see the point. Others argue that attaching the “science” is clear indication of a “wannabe” (think physics, chemistry, biology as opposed to computer science, social science and even creation science). We’re not going to engage in this debate but in this blog post we do focus on science. Not science pertaining to a presumed discipline of data science but rather science of the domain within which a data scientist operates.

One purpose of data science is to inform actual “business decisions”, or, more precisely, decisions of importance to the team or community served by the data scientist. The beliefs of this community are always evolving, and the process of thoughtfully generating, testing, refuting and accepting ideas looks a lot like Science. We’ll use a significant recent example we encountered to discuss how a data scientist can shape this process.

Imagine your work requires you to understand, quantify and react to a complex phenomenon or system (e.g. the power grid, a streaming music service, the human body, the weather). By definition, it is hard to disentangle causes and effects in these settings because it is rarely possible to isolate all relevant factors. You therefore cannot rely exclusively on rigorously established facts to make sense of your world — you likely also have to use intuition, working hypotheses, previously successful theories, and domain wisdom of varying reliability. It is important to make clear distinctions among each of these, and to advance the state of knowledge through concerted observation, modeling and experimentation. This is very much the work of a scientist. Within their organization, data scientists are often the custodians of this process, since it falls to them to establish what the data says, and because many of them have research backgrounds and hence training in the scientific method (“the scientific method” being a collection of generally accepted practices rather than a single unified approach).

In our world, the “fold” in the Google search page was an entrenched quasi-explanation. Newspapers often have a horizontal fold, and content above the fold is much more prominent. It is crucial to show the most enticing content above the fold, since anything below is less likely to be read. From the early days of the internet, conventional wisdom held that the initial viewport (the area above the “fold”) was similarly very important in website design. We thought this would be a particularly important effect on the Google search results page since search users expect to find information quickly. The complexity of this effect (it plausibly depended on established patterns of user attention, result quality, and the parsability of the page) meant it could be blamed for all sorts of strange experimental phenomena.

Not only did we use the fold to explain things away, we thought the fold effect was an important consideration for the design of our site. Thus designers made sure to place important content in the initial viewport. But the shift to mobile phones made this constraint increasingly restrictive. Now the most popular interfaces to the internet, phones have much smaller initial viewports. Thus satisfying the fold principle on mobile is difficult, and can lead to cramped designs that are uglier and less usable.

In retrospect, the fold effect was less scientific fact and more folk wisdom within our community of Google Search, but we hadn’t seriously questioned it. In fact, we recently investigated how changing the top of the mobile search page impacted the attention paid to the results below. We noticed a totally unexpected result early in our investigations — user click patterns are remarkably similar on desktop and mobile devices. For example, consider the proportion of clicks on the 4th search result. This proportion is nearly the same on desktop and mobile, despite the vast difference in screen sizes. This seemed incompatible with the major importance of the fold — surely results below the fold on mobile would receive disproportionately less attention and fewer clicks than they would when shown above the fold on desktop?

If we were interpreting this correctly, and there really wasn’t much of a fold effect, our discovery would have large consequences for our page design. But as any data scientist knows, surprising findings in this business are usually false. The burden of proof fell to us and required that we expand on our simple observation.

It took us a while to turn the vague hypothesis that there is no fold effect into precise, testable statements. After considering and rejecting many candidates, like user studies or eye tracking, we eventually settled on two testable expressions of the “no fold” hypothesis:

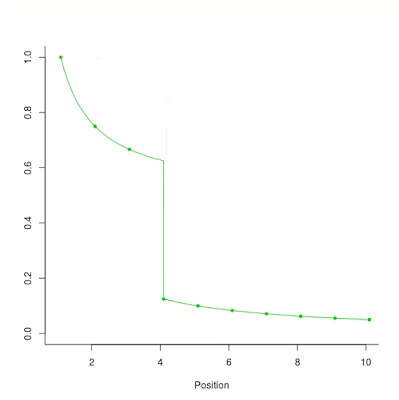

Suppose the fold effect were real. On a given query, as we move from the top of the search result page to the bottom, we should see a sharp drop in attention at the fold. Since CTR is correlated with attention, we should observe a corresponding drop in clicks at the same place. Thus, the fold effect should be visible when we look at how CTR varies by position on the page.

However, properly interpreting CTR data is tricky. Even without a fold effect, we would expect higher results to be more prominent than lower ones, simply because users tend to read the page from top to bottom. Furthermore, Google’s search ranking puts the most promising results at the top of the page, strengthening this effect. Another concern is that the Google results page sometimes contains visual elements, such as images, that may create sharp changes in user attention.

To roughly control for this effect, we compared click patterns on devices of different height. This isn’t perfect, of course, since users of these different devices will be different. For example, device usage varies by country and that in turn produces other confounding factors, such as language, which could create differences in click patterns unrelated to the fold effect we are looking for. To account for this, we sliced the data by country; we also restricted to pages without images or top ads, to get an intuition for behavior in less complex cases. Finally, to limit the effects of different user populations (and avoid speculation as to how iPhone users differ from Android users) we only studied iPhones of differing size. These represent a large fraction of traffic with a small number of known device sizes. In contrast, Android phones have much greater variety which could smooth out a potential fold effect across multiple positions.

The plot below shows how CTR varies by search position on different iPhones (and the ample Samsung Galaxy thrown in for comparison). With the filtering above, an iPhone 4 shows nearly 2 search results on the initial viewport, an iPhone 5 can completely fit 2, and an iPhone 6 can fit 3. If the fold effect were real, we should see sharp drops in CTR at the location of the fold on each device. Instead, the graph shows a smooth decay in CTR, with no sharp drops. The rate of decay is similar across devices as well, despite their different sizes.

As expected users take longer to click on results further down the page. However, we see no sharp change anywhere along these curves, as we might if a result being below the fold represents a substantial barrier to users.

Here is the effect on CTR, focusing on queries where we move results below the fold. The blue line represents mobile devices where the first search result was mostly hidden in the initial viewport. The green and peach lines show metrics for users of the same device, on experiments that left the result fully visible, or moved it up by about the same amount.

If the fold effect were large, we would see that pushing a result below the fold would be very different from moving it down while remaining above or below the fold boundary. So, for example, we should expect a much bigger fractional drop in CTR on the first result on the blue line (moves below the fold) than on the third result (moves down the same amount, but is below the fold on all lines). Instead, we see that the relative change in CTR is very similar.

These smooth curves and effects tell us that there is no sharp drop in attention at the fold. The initial viewport is more prominent because it is higher up the page, but pushing a result below the fold doesn’t lead to a dramatic drop in prominence.

At this point, we started to get excited, and allowed ourselves to start believing our surprising findings. But given that this would lead to major redesign of the mobile search page we needed additional due diligence. We ran a bunch more experiments with different devices and content manipulations. We sliced and diced the experimental data in many many ways. Our results held up, and we could confidently rule out any practically significant fold effect.

If our findings hadn’t overturned a long-established belief, we might have been content with our extensive experiments. But since the fold had been so important in our previous understanding of how users read the page, we felt we needed a substitute explanation, a “replacement story” as to why there is no fold effect on mobile. Looking at other popular websites suggested such a reason — most of them expect the user to scroll. Maybe all these sites had trained users to scroll?

To verify this, we conducted a study to see how often users scrolled. The mechanism and code path for this data were completely different from our click-based measurements. This data was also lower fidelity (lossy transmission) and would not permit fine-grained conclusions. Nevertheless, we found it valuable to understand what was going on from a different perspective. We learned that users scroll more on mobile devices than on desktop on average, and even more on smaller mobile devices than on larger mobile devices. This coarse observational result supported our replacement story.

Had there ever been a fold effect on desktop, and if so, could we detect it?

We don’t exactly know why we detect a small fold effect on desktop devices but not on mobile ones. However, it is intuitive that scrolling on desktop might be harder, particularly if the user is using a scroll bar or the keyboard instead of a touchpad or mouse wheel.

When users scroll we can infer what method they used. When they don’t, we cannot tell, so slicing by directly inferred scroll type produces a selection bias. To overcome this we looked for features that correlate with types of scroll behavior. We found that when users on Windows devices scroll, they do so about 66% of the time using a mouse wheel or touch pad. This fraction is higher, about 81%, on Apple devices. In contrast, users scroll for 14% of queries issued on Windows and just 3% on Apple.

Intuitively, the scroll bar seems less convenient, as users must move their mouse to the narrow bar before scrolling. If this were true, we should expect Windows and Apple users to have different CTR falloffs, driven by the bigger fraction of Windows users who use the scroll bar. We see a small effect in this direction in the data. Figure 7 shows the same plot as Figure 6, focusing on the comparison between Windows and Apple desktop devices. For each browser height category, the fold effect (the dip in the middle) seems slightly sharper on Windows devices. This is consistent with the idea that the average difficulty of scrolling might be higher for the mix of approaches used on Windows devices.

We resurface from our deep dive into the Google search page with the recognition that while we all have data science in common, our domains of application are very different. Each is its own separate reality rich in detail. Yet despite these differences, we may also share the abstract experience of how facts and beliefs are shaped in our respective communities, and our role in this process. We therefore believe that the data scientist operating in a complex intellectual environment can benefit from seeing herself as practitioner of the scientific method.

This post touches on a number of aspects of the making and breaking of knowledge. We saw how the falsifiability of claims and testability of hypotheses are as essential to progress as new data. Yet even within a data-driven culture, there may be entrenched non-falsifiable beliefs serving as stock explanations for a range unexpected observations. By necessity, the burden of proof falls on the investigation which overturns a widely-held belief. Such an investigation is more readily accepted if it provides a replacement paradigm. And finally, in the fast-changing world of the internet, we need to keep reproducing important facts and retesting our key beliefs. Well, maybe reproducibility applies just as much to traditional scientists as it does to us data scientists.

One purpose of data science is to inform actual “business decisions”, or, more precisely, decisions of importance to the team or community served by the data scientist. The beliefs of this community are always evolving, and the process of thoughtfully generating, testing, refuting and accepting ideas looks a lot like Science. We’ll use a significant recent example we encountered to discuss how a data scientist can shape this process.

Imagine your work requires you to understand, quantify and react to a complex phenomenon or system (e.g. the power grid, a streaming music service, the human body, the weather). By definition, it is hard to disentangle causes and effects in these settings because it is rarely possible to isolate all relevant factors. You therefore cannot rely exclusively on rigorously established facts to make sense of your world — you likely also have to use intuition, working hypotheses, previously successful theories, and domain wisdom of varying reliability. It is important to make clear distinctions among each of these, and to advance the state of knowledge through concerted observation, modeling and experimentation. This is very much the work of a scientist. Within their organization, data scientists are often the custodians of this process, since it falls to them to establish what the data says, and because many of them have research backgrounds and hence training in the scientific method (“the scientific method” being a collection of generally accepted practices rather than a single unified approach).

Of course reality is messy and complex phenomena rarely behave exactly the way you expect. Given that you can’t rely exclusively on facts, you must often decide whether to investigate unexpected observations or invoke a plausible explanation and move on. You and your community probably have a few powerful explanations — known mechanisms that can potentially account for nearly any observation and its opposite, at least on the surface. For example, in our field, we can generally blame machine learning feedback (predictions that change the data itself), budget effects (bidders running out of money in repeated auctions) or even the weather (internet usage changes in complicated ways). These quasi-explanations usually involve large, real effects and interactions so complex that arguments based on them are often non-falsifiable. They can become sinks for our discomfort when faced with unexpected observations, and may thus stop us from understanding our domain more deeply. Worse, the community may act on these ambiguous explanations, incurring real costs.

What follows is the story about a quasi-explanation that had very material consequences on our product design. The plot is simple:

Note also that this account does not involve ambiguity due to statistical uncertainty. As you can see from the tiny confidence intervals on the graphs, big data ensured that measurements, even in the finest slices, were precise.

What follows is the story about a quasi-explanation that had very material consequences on our product design. The plot is simple:

- explanation entrenchment

- anomaly

- burden of proof & critical testing

- replacement story

Note also that this account does not involve ambiguity due to statistical uncertainty. As you can see from the tiny confidence intervals on the graphs, big data ensured that measurements, even in the finest slices, were precise.

The fold in the Google search page

|

| Fig 1: A hypothetical schematic of what the fold effect might look like if properly isolated. The figure shows relative CTR (click-through rates) when the fold lies between positions 3 and 4. |

Not only did we use the fold to explain things away, we thought the fold effect was an important consideration for the design of our site. Thus designers made sure to place important content in the initial viewport. But the shift to mobile phones made this constraint increasingly restrictive. Now the most popular interfaces to the internet, phones have much smaller initial viewports. Thus satisfying the fold principle on mobile is difficult, and can lead to cramped designs that are uglier and less usable.

In retrospect, the fold effect was less scientific fact and more folk wisdom within our community of Google Search, but we hadn’t seriously questioned it. In fact, we recently investigated how changing the top of the mobile search page impacted the attention paid to the results below. We noticed a totally unexpected result early in our investigations — user click patterns are remarkably similar on desktop and mobile devices. For example, consider the proportion of clicks on the 4th search result. This proportion is nearly the same on desktop and mobile, despite the vast difference in screen sizes. This seemed incompatible with the major importance of the fold — surely results below the fold on mobile would receive disproportionately less attention and fewer clicks than they would when shown above the fold on desktop?

If we were interpreting this correctly, and there really wasn’t much of a fold effect, our discovery would have large consequences for our page design. But as any data scientist knows, surprising findings in this business are usually false. The burden of proof fell to us and required that we expand on our simple observation.

It took us a while to turn the vague hypothesis that there is no fold effect into precise, testable statements. After considering and rejecting many candidates, like user studies or eye tracking, we eventually settled on two testable expressions of the “no fold” hypothesis:

- If the screen were longer or shorter, we would observe no difference in CTR profile

- If we moved a result below the fold, there would be no “sudden” drop

Observational study

However, properly interpreting CTR data is tricky. Even without a fold effect, we would expect higher results to be more prominent than lower ones, simply because users tend to read the page from top to bottom. Furthermore, Google’s search ranking puts the most promising results at the top of the page, strengthening this effect. Another concern is that the Google results page sometimes contains visual elements, such as images, that may create sharp changes in user attention.

To roughly control for this effect, we compared click patterns on devices of different height. This isn’t perfect, of course, since users of these different devices will be different. For example, device usage varies by country and that in turn produces other confounding factors, such as language, which could create differences in click patterns unrelated to the fold effect we are looking for. To account for this, we sliced the data by country; we also restricted to pages without images or top ads, to get an intuition for behavior in less complex cases. Finally, to limit the effects of different user populations (and avoid speculation as to how iPhone users differ from Android users) we only studied iPhones of differing size. These represent a large fraction of traffic with a small number of known device sizes. In contrast, Android phones have much greater variety which could smooth out a potential fold effect across multiple positions.

The plot below shows how CTR varies by search position on different iPhones (and the ample Samsung Galaxy thrown in for comparison). With the filtering above, an iPhone 4 shows nearly 2 search results on the initial viewport, an iPhone 5 can completely fit 2, and an iPhone 6 can fit 3. If the fold effect were real, we should see sharp drops in CTR at the location of the fold on each device. Instead, the graph shows a smooth decay in CTR, with no sharp drops. The rate of decay is similar across devices as well, despite their different sizes.

|

| Fig 2: x-axis: Position of the search result (1-indexed) y-axis: Click Through Rate |

This is purely observational data, so we cannot use it to make causal claims. But the very simplicity of the study makes it more believable. It is unlikely that the various confounding factors would hide a sharp drop in clicks in all of these devices, or that they would serve to hide large underlying differences in the rate of click decay.

Alongside click behavior we can look at how long users take to get to each result on the page. We plot the geometric mean of the users’ time to first click (TTFC) for each search result.

Alongside click behavior we can look at how long users take to get to each result on the page. We plot the geometric mean of the users’ time to first click (TTFC) for each search result.

|

| Fig 3: x-axis: Position of the search result (1-indexed) y-axis: Geometric mean of the time to firsts click |

As expected users take longer to click on results further down the page. However, we see no sharp change anywhere along these curves, as we might if a result being below the fold represents a substantial barrier to users.

Randomized experiment

Motivated by the observational study, we performed randomized experiments where we showed some users additional content above the search results. This additional content sometimes pushes the first search result below the fold.

Here is the effect on CTR, focusing on queries where we move results below the fold. The blue line represents mobile devices where the first search result was mostly hidden in the initial viewport. The green and peach lines show metrics for users of the same device, on experiments that left the result fully visible, or moved it up by about the same amount.

If the fold effect were large, we would see that pushing a result below the fold would be very different from moving it down while remaining above or below the fold boundary. So, for example, we should expect a much bigger fractional drop in CTR on the first result on the blue line (moves below the fold) than on the third result (moves down the same amount, but is below the fold on all lines). Instead, we see that the relative change in CTR is very similar.

These smooth curves and effects tell us that there is no sharp drop in attention at the fold. The initial viewport is more prominent because it is higher up the page, but pushing a result below the fold doesn’t lead to a dramatic drop in prominence.

At this point, we started to get excited, and allowed ourselves to start believing our surprising findings. But given that this would lead to major redesign of the mobile search page we needed additional due diligence. We ran a bunch more experiments with different devices and content manipulations. We sliced and diced the experimental data in many many ways. Our results held up, and we could confidently rule out any practically significant fold effect.

A replacement story

To verify this, we conducted a study to see how often users scrolled. The mechanism and code path for this data were completely different from our click-based measurements. This data was also lower fidelity (lossy transmission) and would not permit fine-grained conclusions. Nevertheless, we found it valuable to understand what was going on from a different perspective. We learned that users scroll more on mobile devices than on desktop on average, and even more on smaller mobile devices than on larger mobile devices. This coarse observational result supported our replacement story.

Was there ever a fold?

We analyzed desktop data in the same way, and found that indeed we could detect a small fold effect about where it should be. The graph below shows how CTR varies by position for browsers of different height. It drops steadily at a decreasing rate, but then drops more sharply at the rough location of the fold for each browser size.

|

| Fig 5: x-axis: Position of the search result (1-indexed) axis-y: Click Through Rate |

|

| Fig 6: x-axis: Position of the search result (1-indexed) y-axis: log10(CTR[i] / CTR[i-1]) |

It was reassuring that we could detect a desktop fold effect. However, the effect was surprisingly small and explained why the original CTR curves looked so similar between desktop and mobile. It is possible that the fold effect on desktop has diminished over years, perhaps because trackpads and trackballs have made scrolling on desktops easier. But we did not pursue such an involved historical analysis — we felt we had sufficiently established “no fold on mobile” as a scientific fact in our domain.

What causes the desktop fold?

When users scroll we can infer what method they used. When they don’t, we cannot tell, so slicing by directly inferred scroll type produces a selection bias. To overcome this we looked for features that correlate with types of scroll behavior. We found that when users on Windows devices scroll, they do so about 66% of the time using a mouse wheel or touch pad. This fraction is higher, about 81%, on Apple devices. In contrast, users scroll for 14% of queries issued on Windows and just 3% on Apple.

|

| Fig 7: x-axis: Position of the search result (1-indexed) y-axis: log10(CTR[i] / CTR[i-1]) |

Intuitively, the scroll bar seems less convenient, as users must move their mouse to the narrow bar before scrolling. If this were true, we should expect Windows and Apple users to have different CTR falloffs, driven by the bigger fraction of Windows users who use the scroll bar. We see a small effect in this direction in the data. Figure 7 shows the same plot as Figure 6, focusing on the comparison between Windows and Apple desktop devices. For each browser height category, the fold effect (the dip in the middle) seems slightly sharper on Windows devices. This is consistent with the idea that the average difficulty of scrolling might be higher for the mix of approaches used on Windows devices.

Conclusion

This post touches on a number of aspects of the making and breaking of knowledge. We saw how the falsifiability of claims and testability of hypotheses are as essential to progress as new data. Yet even within a data-driven culture, there may be entrenched non-falsifiable beliefs serving as stock explanations for a range unexpected observations. By necessity, the burden of proof falls on the investigation which overturns a widely-held belief. Such an investigation is more readily accepted if it provides a replacement paradigm. And finally, in the fast-changing world of the internet, we need to keep reproducing important facts and retesting our key beliefs. Well, maybe reproducibility applies just as much to traditional scientists as it does to us data scientists.

You do say bio science 'cos that's what 'ology' means ;)

ReplyDeleteThanks for the article! I would like to add one point to your final remark about "reproducibility". I think reproducibility is a key part about what makes "data science" a "science". The laws of physics must apply anywhere in the world--something that only works in one place, but not in another isn't very useful. The same goes for prediction models in machine learning. If it doesn't generalise well into "real world data", the model isn't going to be very useful.

ReplyDelete