OpenAI Tried to Train AI Agents to Play Hide-And-Seek but Instead They Were Shocked by What They Learned

OpenAI trained agents in a simple game of hide-and-seek and learned many other different skills in the process.

Competition is one of the socio-economic dynamics that has influenced our evolutions as species. The vast amount of complexity and diversity on Earth evolved due to co-evolution and competition between organisms, directed by natural selection. By competing against a different party, we are constantly forced to improve our knowledge and skills on a specific subject. Recent developments in artificial intelligence(AI) have started to leverage some of the principles of competition to influence learning behaviors in AI agents. Specifically, the field of multi-agent reinforcement learning(MARL) has been heavily influenced by the competitive and game-theoretic dynamics. Recently, researchers from OpenAI started by training some AI agents in a simple game of hide-and-seek and they will shocked at some of the behaviors the agents developed organically. The results were captured in a fascinating research paper that was just published.

Learning by competition is one of the emerging paradigms in AI that fatefully resembles how our knowledge evolves as human species. Since we are babies, we develop new knowledge by exploring our surroundings and interacting with other people, sometimes in a collaborative fashion, sometimes in a competitive models. That dynamic contrasts with the way we architect AI systems today. While supervised learning methods remains the dominant paradigm in AI, it is relatively unpractical to apply it to many real world situations. This is even more accentuated in environments in which agents need to interact with physical objects in a relatively unknown environment. In those settings, it is more natural that agents constantly collaborate and/or compete against each other agents developing new organic knowledge.

Multi-Agent Autocurriculum and Emergent Behavior

One of the side effects of learning by competition is that agents develop behaviors that are unexpected. In AI theory, this is known as agent autocurricula and represents a first row sit to observing how knowledge develops. Imagine that you are training an AI agent to master a specific game and, suddenly, the agent finds a strategy that has never been tested before. While the autocurricula phenomenon occurs in single-agent reinforcement learning systems, it is even more impressive when develops by competition which is what is known as multi-agent autocurriculum .

In a competitive multi-agent AI environment, the different agents compete against each other in order to evaluate specific strategies. When a new successful strategy or mutation emerges, it changes the implicit task distribution neighboring agents need to solve and creates a new pressure for adaptation. These evolutionary arms races create implicit autocurricula whereby competing agents continually create new tasks for each other. A key element of multi-agent autocurriculum is that the emergent behavior learned by the agents evolves organically and is not the result of pre-built incentive mechanisms. Not surprisingly, multi-agent autocurricula has been one of the most successful techniques when comes to training AI agents in multi-player games.

Training Agents in Hide and Seek

The initial OpenAI experiments were targeted to train a series of reinforcement learning agents in mastering the game of hide and seek. In the target setting, Agents are tasked with competing in a two-team hide-and-seek game in a physics-based environment. The hiders are tasked with avoiding line of sight from the seekers, and the seekers are tasked with keeping vision of the hiders. There are objects scattered throughout the environment that the agents can grab and also lock in place. There are also randomly generated immovable rooms and walls that the agents must learn to navigate. The OpenAI environment contains no explicit incentives for agents to interact with objects. Agents are given a team-based reward; hiders are given a reward of +1 if all hiders are hidden and -1 if any hider is seen by a seeker. Seekers are given the opposite reward, -1 if all hiders are hidden and +1 otherwise. To confine agent behavior to a reasonable space, agents are penalized if they go too far outside the play area. During the preparation phase, all agents are given zero reward.

To train the hide and see agents, OpenAI researchers leveraged the training infrastructure that was used in other multi-player games like OpenAI Five and Dactyl. This type of infrastructure relies on a policy network in which agents are trained using self-play, which acts as a natural curriculum as agents always play opponents of an appropriate level. Agent policies are composed of two separate networks with different parameters — a policy network which produces an action distribution and a critic network which predicts the discounted future returns. Each object is embedded and then passed through a masked residual self attention block, similar to those used in transformers, where the attention is over objects instead of over time. Objects that are not in line-of-sight and in front of the agent are masked out such that the agent has no information of them.

Autocurriculum and Emergent Behaviors in Hide and Seek

As AI agents compete against each other in the environment explained before, they didn’t only master hide and seek but they developed as many as six distinct strategies that were not part of the initial incentives.

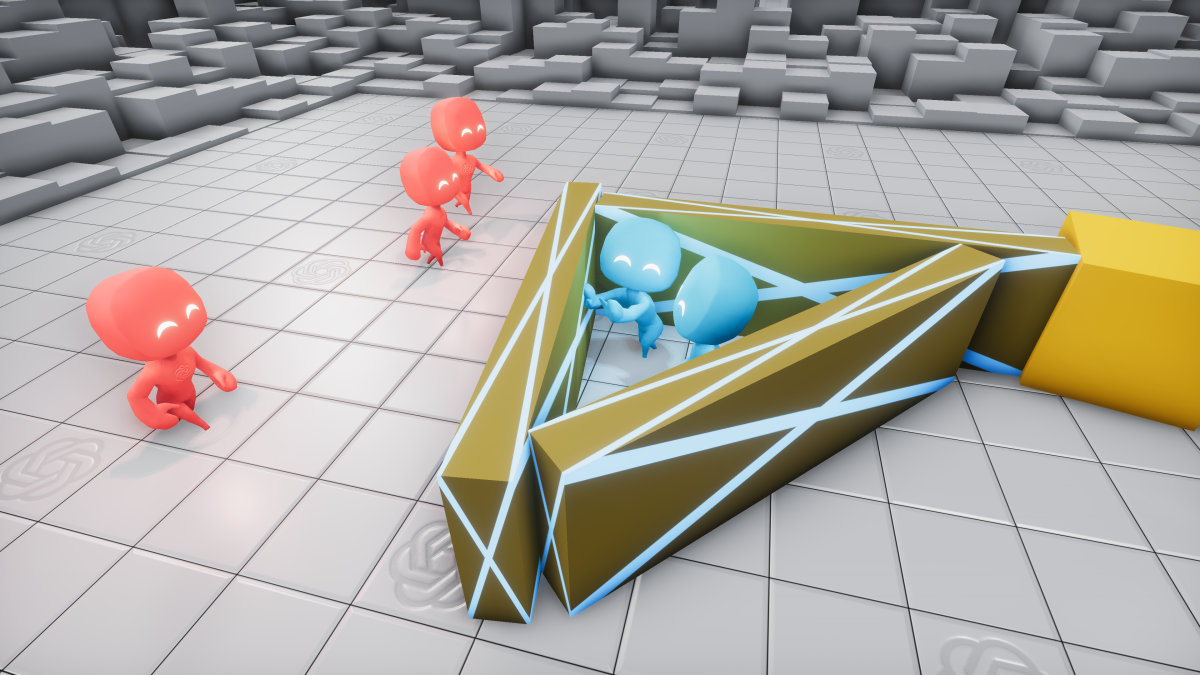

Initially, hiders and seekers learn to crudely run away and chase. After approximately 25 million episodes of hide-and-seek, the hiders learn to use the tools at their disposal and intentionally modify their environment. They begin to construct secure shelters in which to hide by moving many boxes together or against walls and locking them in place. After another 75 million episodes, the seekers also learn rudimentary tool use; they learn to move and use ramps to jump over obstacles, allowing them to enter the hiders’ shelter. 10 million episodes later, the hiders learn to defend against this strategy; the hiders learn to bring the ramps to the edge of the play area and lock them in place, seemingly removing the only tool the seekers have at their disposal. Similarly, After 380 million total episodes of training, the seekers learn to bring a box to the edge of the play area where the hiders have locked the ramps. The seekers then jump on top of the box and surf it to the hiders’ shelter; this is possible because the environment allows agents to move together with the box regardless of whether they are on the ground or not. In response, the hiders learn to lock all of the boxes in place before building their shelter. The following figure shows some of these emergent behavior.

The fascinating thing about the emergent behavior developed by the hide and seek agents is that they evolved completely organically as part of the autocurriculum induced by the internal competition. In almost all cases, the performance of the emergent behaviors was superior than those learned by intrinsic motivations.

The OpenAI hide and seek experiments were absolutely fascinating and a clear demonstration of the potential of multi-agent competitive environments as a catalyzer for learning. Many of the OpenAI techniques can be extrapolated it to other AI scenarios in which learning by competition seems like a more viable alternative than supervised training.

Original. Reposted with permission.

Related:

- This New Google Technique Help Us Understand How Neural Networks are Thinking

- The Emergence of Cooperative and Competitive AI Agents

- PySyft and the Emergence of Private Deep Learning