3 Reasons Why You Should Use Linear Regression Models Instead of Neural Networks

While there may always seem to be something new, cool, and shiny in the field of AI/ML, classic statistical methods that leverage machine learning techniques remain powerful and practical for solving many real-world business problems.

First, I’m not saying that linear regression is better than deep learning.

Second, if you know that you’re specifically interested in deep learning-related applications like computer vision, image recognition, or speech recognition, this article is probably less relevant to you.

But for everyone else, I want to give my thoughts on why I think that you’re better off learning regression analysis over deep learning. Why? Because time is a limited resource and how you allocate your time will determine how far you in your learning journey.

And so, I’m going to give my two cents on why I think you should learn regression analysis before deep learning.

But first, what is regression analysis?

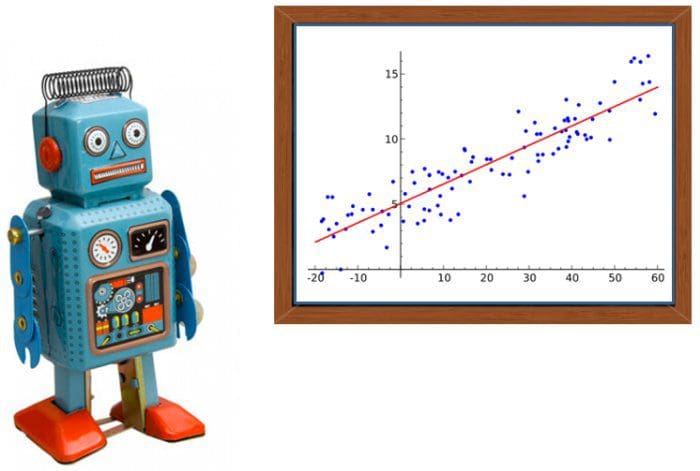

Simply put, regression analysis is commonly used interchangeably with linear regression.

More generally speaking, regression analysis refers to a set of statistical methods that are used to estimate the relationships between dependent and independent variables.

A big misconception, however, is that regression analysis solely refers to linear regression, which is not the case. There are so many statistical techniques within regression analysis that are extremely powerful and useful. This leads me to my first point:

Point #1. Regression analysis is more versatile and has wide applicability.

Linear regression and Neural networks are both models that you can use to make predictions given some inputs. But beyond making predictions, regression analysis allows you to do many more things, which include but is not limited to:

- Regression analysis allows you to understand the strength of relationships between variables. Using statistical measurements like R-squared/adjusted R-squared, regression analysis can tell you how much of the total variability in the data is explained by your model.

- Regression analysis tells you what predictors in a model are statistically significant and which are not. In simpler terms, if you give a regression model 50 features, you can find out which features are good predictors for the target variable and which aren’t.

- Regression analysis can give a confidence interval for each regression coefficient that it estimates. Not only can you estimate a single coefficient for each feature, but you can also get a range of coefficients with a level of confidence (e.g., 99% confidence) that the coefficient is in.

- and much more…

My point is that there are a bunch of statistical techniques within regression analysis that allow you to answer many more questions than just “Can we predict Y given X(s)?”

Point #2. Regression Analysis is less of a black box and is easier to communicate.

Two important factors that I always consider when choosing a model are how simple and how interpretable it is.

Why?

A simpler model means it’s easier to communicate how the model itself works and how to interpret the results of a model.

For example, it’s likely that most business users will understand the sum of least squares (i.e., line of best fit) much faster than backpropagation. This is important because businesses are interested in how the underlying logic in a model works — nothing is worse in a business than uncertainty — and a black box is a great synonym for that.

Ultimately, it’s important to understand how the numbers from a model are derived and how they can be interpreted.

Point #3. Learning Regression Analysis will give you a better understanding of statistical inference overall.

Believe it or not, learning regression analysis made me a better coder (Python and R), a better statistician, and gave me a better understanding of building models overall.

To excite you a little bit more, regression analysis helped me learn the following (not limited to this):

- Building simple and multiple regression models.

- Conducting residual analysis and applying transformations like Box-Cox.

- Calculating confidence intervals for regression coefficients and residuals.

- Determining the statistical significance of models and regression coefficients through hypothesis testing.

- Evaluating models using R squared, MSPE, MAE, MAPE, PM, the list goes on…

- Identifying multicollinearity with variance inflation factor (VIF).

- Comparing different regression models using the partial F-test.

This is just a fraction of the things that I’ve learned, and I’ve barely scratched the surface. So if you think that this sounds like a neat gig, I urge you to check it out and at least see what you can learn.

How can you learn Regression Analysis?

Recently, I’ve found that the best way to learn a new topic is to find course lectures or course notes from a college/university. It’s incredible how much stuff is available on the web for free.

In particular, I’ll leave you with two great resources that you can use to get started:

Penn State | STAT 501 Regression Methods

Terence Shin is a data enthusiast with 3+ years of experience in SQL and 2+ years of experience in Python, and a blogger on Towards Data Science and KDnuggets.

Original. Reposted with permission.