A Data Science Leader’s Guide to Managing Stakeholders

Overview

- Managing the various stakeholders in a data science project is a must-have aspect for a leader

- Delivering an end-to-end data science project is about much more than just machine learning models

- We will discuss the various stakeholders involved in a typical data science project and address various challenges you might face

Introduction

Managing stakeholders in the world of data science projects is a tricky prospect. I have seen a lot of executives and professionals get swept up in the hype around data science without properly understanding what a full-blown project entails.

And I don’t say this lightly – my career has been at the very cusp of machine learning and delivery. I hold a Ph.D. in Data Science and Machine Learning from one of the best institutions in the world and have several years of experience working with some of the top industry research labs.

I moved to Yodlee, a FinTech organization, in 2016 to run the data sciences product delivery division. The 18 months that it took me and my team to deliver the first truly data-driven solution were full of learnings. Learnings that no industry research would have taught me.

These learnings have made me appreciate the immensely non-trivial efforts that go into converting a ‘research prototype’ into a finished data-driven product that can be monetized. Here are a couple of my key learnings:

- Mathematically speaking, the most important learning from this experience is: A high quality data science solution, while being a necessary condition, is a million miles from being the sufficient condition for the end product’s success and customer delight

- The AI component needs to be in complete synergy with the rest of the components of the end-to-end system for the business problem to be solved successfully

I have penned down my experience and learning in a series of articles that will help other data science thought leaders and managers understand the scale of the task at hand.

What we Plan to Cover in this Series

In this four-article series, I will share some of these learnings in the hope that others benefit from our findings.

- In this first article, we will discuss the key stakeholders who need to be aligned for a data-driven product roadmap for products. We will also discuss some of the ways in which we may align stakeholders to broader organizational goals

- We will then talk about a framework for translating the business requirements in the second article. This framework will help you transform broad and qualitative requirements into tangible and quantitative data-driven problems. The machine learning component is often just one component of the complete solution

- The third article will cover the various practical considerations that need to be accounted for so that the machine learning component interacts synergistically with the rest of the solution

- The final article will be about various post-production monitoring and maintenance related aspects that need special attention so that the performance of the solution doesn’t deteriorate over time

I request you to share your learnings, be it similar or opposite to ours, in the comments section. You can read articles 2 and 3 of this series here:

- Article #2: How can you Convert a Business Problem into a Data Problem? A Successful Data Science Leader’s Guide

- Article #3: 4 Key Aspects of a Data Science Project Every Data Scientist and Leader Should Know

Let’s begin with part one!

Table of Contents

- The Sachin-Tendulkar-Expectation Syndrome

- Understanding the Stakeholder

- Customer Expectations from Data-Driven Solutions are ‘Fluid’

- Address the Illusion of “100% Accuracy”

- Success of AI Solutions needs a Paradigm Shift in Product Strategy and Execution

The Sachin-Tendulkar-Expectation Syndrome

Just about everybody believes that data-science/AI/machine learning is the panacea that will instantly and permanently solve every problem that plagues (or is perceived to plague) their organization. This isirrespective of where they are in the corporate hierarchy and irrespective of the degree of their interaction with the delivery group,

If you think this is an exaggeration, a cursory web-search for quotes on AI by various corporations or the number of organizations with “ai” in their URL should convince you!

I call this the Sachin-Tendulkar-Expectation-Syndrome (STES). Sachin Tendulkar is one of the best cricketers the world has seen. In the late 1990s and the early 2000s, the crowd expected Sachin to hit a century in every match he played.

This kind of expectation virtually guaranteed that he would rarely “wow” the crowd. If he did manage to hit a century, it was “met expectations” and if he didn’t then that was a “huge letdown”.

A data science delivery leader is thus tasked with the dual responsibility of:

- Delivering a practical solution that meets a reasonable business requirement, and

- Managing the disproportionate expectations of the various stakeholders

In this article, I will talk about who the main stakeholders are and share my attempts at working with them.

Understanding the Stakeholder

Broadly speaking, there are three main stakeholders. Each stakeholder has priorities which can, at times, be orthogonal to those of the others. As the data-science-delivery-leader, the first responsibility is to manage all these three stakeholders and ensure that they see the perspective of the other two while keeping the end-user of the solution at the centre of the picture. Only then can an optimal and practical solution emerge.

The three stakeholders are:

- The customer-facing team

- The executive team

- The data science team

While the first two are typically outside of the delivery leader’s immediate control, the third one is within and that makes it that much more complicated.

Customer Expectations from Data-Driven Products are ‘Fluid’

The customer-facing teams have a first-hand view of customers’ perceptions of the existing product as well as what the competition is offering. They can thus be a great litmus test to establish the “superiority” of the new and improved data-driven solution.

This is easier said than done. The customer-facing teams are always torn in the dilemmas of “don’t fix if it ain’t broke” vs. “usher in the change before competition makes you obsolete”. When it comes to data-driven solutions, it is not always easy to identify where the solution is with respect to these two extremes.

Specifically, data-driven products, if left static, will see a gradual deterioration in performance over time making it difficult to identify when the system is ‘broken’.

More importantly, if we wait for the solution to be perfect, we may end up with a solution which is perfect but too late. Or worse, perfect at solving a problem which is only of marginal extra value.

The customer-facing teams have to collectively work with the data science team and the customer to agree on when to deploy trial runs of the solution and set the expectations of ‘continuous improvements’. Even the mighty Google search engine is a lot more accurate today than it was last year. And it will surely be even more accurate a year from now, largely because the underlying data-driven techniques learn from user behavior.

Address the “Illusion of 100% Accuracy”

Customer-facing teams also have to be vary of the “illusion of 100% accuracy”. The definition of accuracy in a data-driven solution can vary from user to user. Often, the end user is forgiving of errors if their essential needs are met.

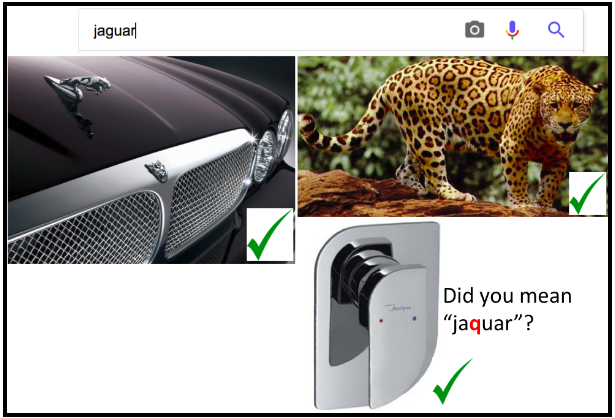

For example, let’s say we search for ‘jaguar’ on an image-search engine. Our perception of the quality of the search engine is going to be shaped broadly by two aspects:

- The actual output of the search engine, and

- Whether we were searching for ‘jaguar, the animal’ or ‘jaguar, the vehicle’

Yet another category of users may have come to the search engine looking for ‘jaquar, the company that makes sanitary fittings’ and will be ‘wow’ed if the search engine shows these images alongside the animal/vehicle images with a ‘did-you-make-a-spelling-mistake’ suggestion.

Similarly, a typical user of a web search engine is going to be satisfied even if the first few search results were not relevant but, say, the fifth search result was exactly what they were looking for.

Such educational sessions with the customer-facing teams and the customers themselves are quite imperative to manage their expectations as the new solution is rolled out.

Figure 1: Different results of an image search engine for a search term ‘jaguar’. Note that all the results can be perceived to be correct.

Figure 1: Different results of an image search engine for a search term ‘jaguar’. Note that all the results can be perceived to be correct.

A third aspect that the teams have to be made aware of is the seemingly non-deterministic nature of data-driven solutions. These solutions typically go through a training phase where they are trained on a subset of the universe of all possible data samples.

In real-world deployments, there will often be cases where the input sample, even though similar to one of the training samples, leads to an incorrect output. A good data-driven algorithm should provide generalizability (i.e., correct output on unseen input samples). But it can almost never guarantee a 100% accuracy.

This is a fundamental shift from how pure software-driven solutions work. An example of this is clicking on the ‘green “launch” icon’ on a website that will deterministically launch the new page. The customer-facing teams have to be educated about this behavior.

In one of the later articles in this series, I will discuss how the data science team should work closely with the customer-facing teams to plan for post-deployment support and ‘hot fixes’ for what I call the ‘publicity-hungry’ bugs. So keep an eye out for that!

Success of AI Solutions Needs a Paradigm Shift in Product Strategy and Execution

The second set of stakeholders is the executive team. AI/machine learning has caught the collective imagination of the mainstream media in general, but in particular also of the media that primarily caters to the technical executives.

The executives, thus, need tangible assurances that their organization is utilizing the AI revolution. At the same time, they need to be educated that the AI solutions have their own unique development, deployment and maintenance cycle. This, therefore, calls for a different timeline expectation.

I consider myself extremely lucky to have senior executives who are willing to get hands-on learning of various data-science/AI/ML concepts. They genuinely believe in the power of AI and are patient in terms of giving the data science team a long rope to build foundational data-driven solutions. These learning sessions go a long way in clearly articulating the business value of AI/ML.

It is critical in such conversations with the executive teams to distinguish between what I call the ‘consumer-AI’ and the ‘enterprise-AI’.

Source: Fabrik Brands

The popular image of ‘AI-can-solve-every-problem-imaginable’ is largely built based on problems that are low-stakes. Some typical examples of low-stakes ‘consumer-AI’ problems are things like:

- AI-driven social-media recommendation for friends, or

- AI-driven recommendation for which movie to watch

In the worst case, the recommended movie may actually be of the horror genre while it was recommended as a romantic comedy.

Contrast this with ‘enterprise-AI’, where, for example, an AI-driven solution is used to detect the number plates of cars zipping by on the freeway to flag cars which were part of criminal activities. Imagine the AI solution misfires a ‘5’ for a ‘6’ and an innocent driver is pulled over by armed patrol vehicles.

Such mistakes have the potential to lead to multimillion-dollar lawsuits. I call these situations as the high-stakes ‘enterprise-AI’ scenarios.

Strike a Fine Balance between “Data Science Coolness/Style” and “Delivery Substance”

The third set of stakeholders is the data science team. This is by far the most important stakeholder with respect to the timely delivery of the data-driven solution. This group is also typically most excited about simply using the latest coolest technology to the problem at hand, without necessarily always worrying about the appropriateness of the technology.

The delivery leader has to periodically remind the data science team that the AI is only a part of the whole puzzle and that the end consumer almost never interacts directly with the data science component. Also, the end consumer is not going to be more forgiving of the errors just because the latest coolest technology is used.

The other important role of the delivery leader is to provide enough air cover to the team from day-to-day delivery pressures. This helps them to explore deep and systematic ways to solve the problem at hand. This air cover is particularly important for the data science team (as compared to the software development team) to experiment.

This is because there exists a substantial gap between the theory of data science and its practice. Moreover, facilitating a work culture that is conducive to working on machine learning problems will also lead to higher job satisfaction. And that in turns leads to healthier employee retention!

End Notes

Now, the stakeholders are aligned on the advantages (and limitations) of the data science approach. The next step is to translate the business problem into a data-science problem. Our learnings on this step will be discussed in the next article.

Managing stakeholders is a key aspect most data science professionals are still not fully aware of. How has your experience been with this side of data science? I would love to hear your thoughts and experience. Let’s discuss in the comments section below.

About the Author

Dr. Om Deshmukh – Senior Director of Data Sciences and Innovation, Envestnet | Yodlee

Dr. Om Deshmukh is the senior director of data sciences and innovation at Envestnet|Yodlee. Om is a Ph.D. in Machine Learning from University of Maryland, College Park.

Dr. Om Deshmukh is the senior director of data sciences and innovation at Envestnet|Yodlee. Om is a Ph.D. in Machine Learning from University of Maryland, College Park.

He has made significant contributions to the field of data sciences for close to two decades now, which include 50+ patents (filed/granted) 50+ international publications and multi-million dollar top-line / bottom line impact across various business verticals. Om was recognised as one of the top 10 data scientists in India in 2017. In 2019, his team was recognised as one of the 10 best data sciences teams in India.

At Envestnet|Yodlee, Om leads a team of data scientists who drive foundational data science initiatives to mine actionable insights from transactional data. His team is also responsible for building end-to-end data science driven solutions and delivering those to the clients.

Prior to Yodlee, he was at IBM Research and Xerox Research driving technical strategic and research initiatives around data sciences and big data analytics. Om has strong ties with the academia in India and in the US. He is co-advising a Ph.D. student at IISc and serves as an industry expert for several BTech/MTech project evaluations.

Wow.. A wonderful insight and details about data science and analytics..

Thanks, Nilesh!

Great perspective Om - love the share with the community!

Glad you liked in, Kunal!

Hello OM Great starter! The perspective is well articulated. Eagerly waiting for rest 3

Thanks, Pankaj! The second article just came out. here it is: https://www.analyticsvidhya.com/blog/2019/08/data-science-leader-how-effectively-transition-business-requirements-data-driven-solutions

Great article ...lots to learn from it...thanks for the share !!

Thanks, Preeti. Glad you found it informative.

Thanks Om... Practical experience is incredibly useful in the application of any technology. Thank you for sharing yours...

Yes indeed, John! Practical experience, in particular in DS, helps sift through a lot of noise and get to the actual issues quickly.

great article OM, I completely agree with the aspects covered in this article but sometimes it becomes very challenging to explain the same paradigm of data science to your management and then data science becomes just implementing machine learning algorithms

what do you mean by "data science becomes just implementing machine learning algorithms"?