We just announced Cloudera DataFlow for the Public Cloud (CDF-PC), the first cloud-native runtime for Apache NiFi data flows. CDF-PC enables Apache NiFi users to run their existing data flows on a managed, auto-scaling platform with a streamlined way to deploy NiFi data flows and a central monitoring dashboard making it easier than ever before to operate NiFi data flows at scale in the public cloud.

In this blog post we’re revisiting the challenges that come with running Apache NiFi at scale before we take a closer look at the architecture and core features of CDF-PC.

The need for a cloud-native Apache NiFi service

Apache Nifi is a powerful tool to build data movement pipelines using a visual flow designer. Hundreds of built-in processors make it easy to connect to any application and transform data structures or data formats as needed. Since it supports both structured and unstructured data for streaming and batch integrations, Apache NiFi is quickly becoming a core component of modern data pipelines.

Apache NiFi deployments typically start out small with a limited number of users and data flows before they grow quickly once organizations realize how easy it is to implement new use cases. During this growth phase, organizations run into multi-tenancy related issues like resource contention and all their data flows sharing the same failure domain. To overcome these challenges, organizations typically start creating isolated clusters to separate data flows based on business units, use cases or SLAs. While this alleviates the multi-tenancy issues, it introduces additional management overhead and makes it harder to get a central monitoring view for data flows that are now spread across multiple clusters.

Depending on how the clusters were sized initially, organizations might also have to add additional compute resources to their clusters to keep up with the growing number of use cases and ever increasing data volumes. While NiFi nodes can be added to an existing cluster, it is a multi-step process that requires organizations to set up constant monitoring of resource usage, detect when there is enough demand to scale, automate the provisioning of a new node with the required software and set up the security configuration. Downscaling is even more involved because users have to make sure that the NiFi node they want to decommission has processed all its data and does not receive any new data to avoid potential data loss. Implementing an automated scale up and scale down procedure for NiFi clusters is complex and time consuming.

Ultimately these challenges force NiFi teams to spend a lot of time on managing the cluster infrastructure instead of building new data flows which slows down use case adoption.

A technical look at Cloudera DataFlow for the Public Cloud

When we took a close look at these challenges, we realized that we had to eliminate the infrastructure management complexities that come with large scale NiFi deployments. We want our NiFi users to be able to focus on what matters to them: Building new data flows and ensuring that these data flows meet the business SLAs.

Users shouldn’t have to worry about whether their data flow can scale to handle a change in data volume. Users shouldn’t have to manage multiple NiFi clusters if some flows need to be isolated. Users shouldn’t have to build their own central monitoring system. Instead, there should be a cloud service that allows NiFi users to easily deploy their existing data flows to a scalable runtime with a central monitoring dashboard providing the most relevant metrics for each data flow. This is what Cloudera DataFlow for the Public Cloud offers to NiFi users.

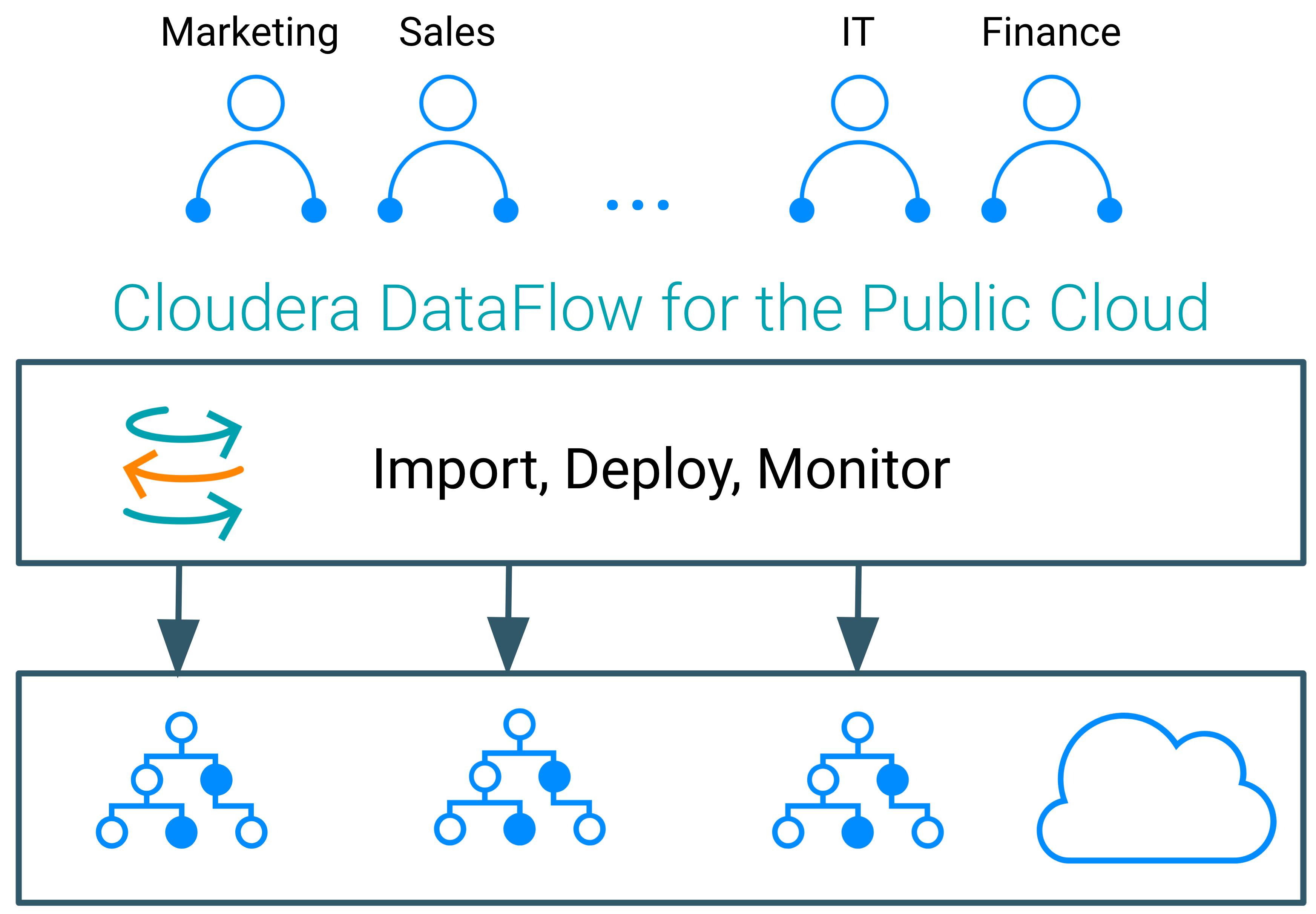

Figure 1: CDF-PC allows organizations to deploy and monitor their NiFi data flows centrally while

A new cloud-native architecture

CDF-PC is leveraging Kubernetes as the scalable runtime and it provisions NiFi clusters on top of it as needed. The foundation for CDF-PC is a brand new Kubernetes Operator developed from the ground up to manage the lifecycle of Apache NiFi clusters on Kubernetes. When users create a deployment request, the operator receives it and knows how to provision a new, fully secure NiFi cluster. We have built years of experience of running NiFi clusters securely at scale into the operator resulting in zero setup work for administrators to create new clusters. Once the clusters are created, the operator also takes care of other aspects of the life cycle like upgrading Apache NiFi to a new version or terminating a cluster.

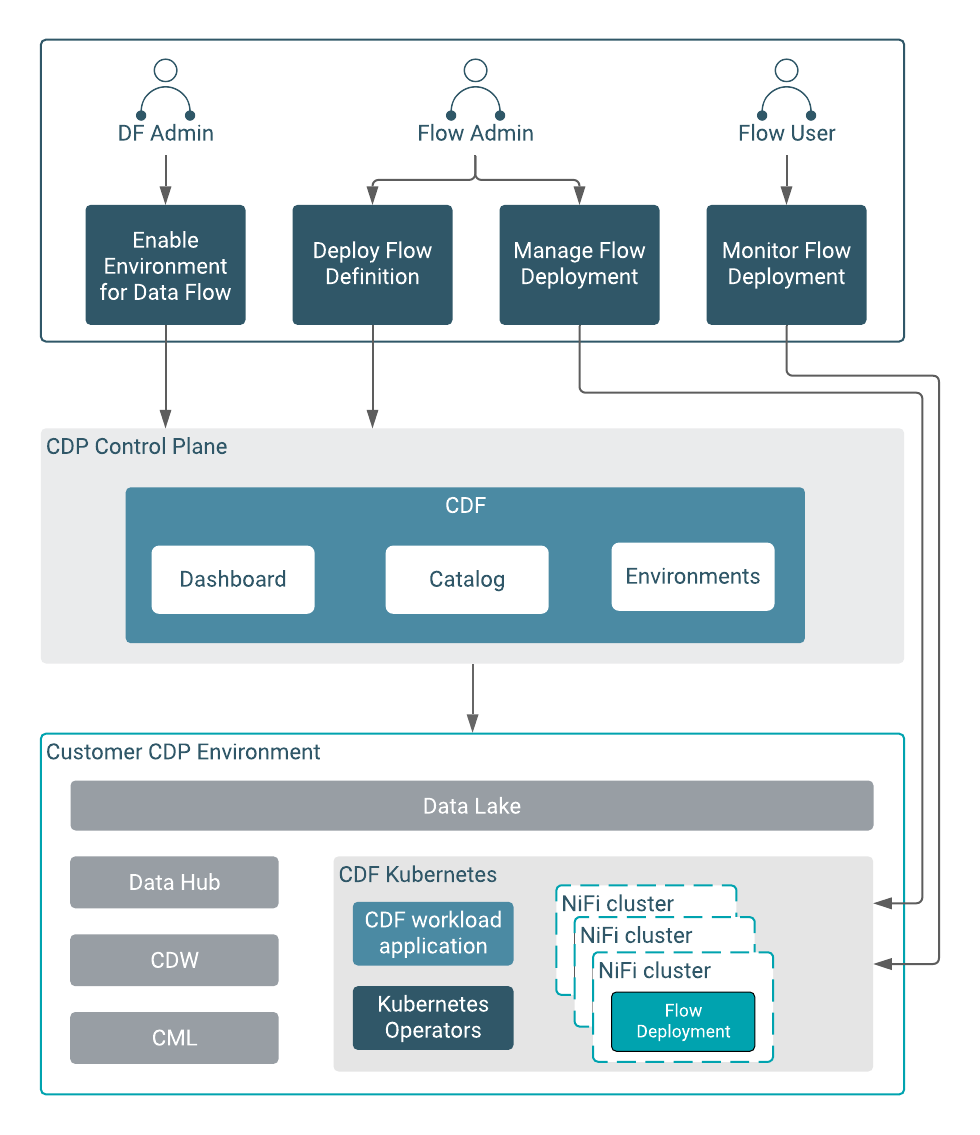

Users access the CDF-PC service through the hosted CDP Control Plane. The CDP control plane hosts critical components of CDF-PC like the Catalog, the Dashboard and the ReadyFlow Gallery. While users initiate new NiFi deployments from the control plane, the actual NiFi deployments are created in the customer cloud account. This separation between initiating deployments and monitoring in the Cloudera managed control plane vs. data processing in the customer cloud account ensures that sensitive data never leaves the customer environment while CDF-PC can still take care of managing the required infrastructure.

Figure 2: Users initiate different actions from the CDP Control Plane while data processing takes place in the customer cloud environment

Reuse your existing NiFi data flows and import them to CDF-PC

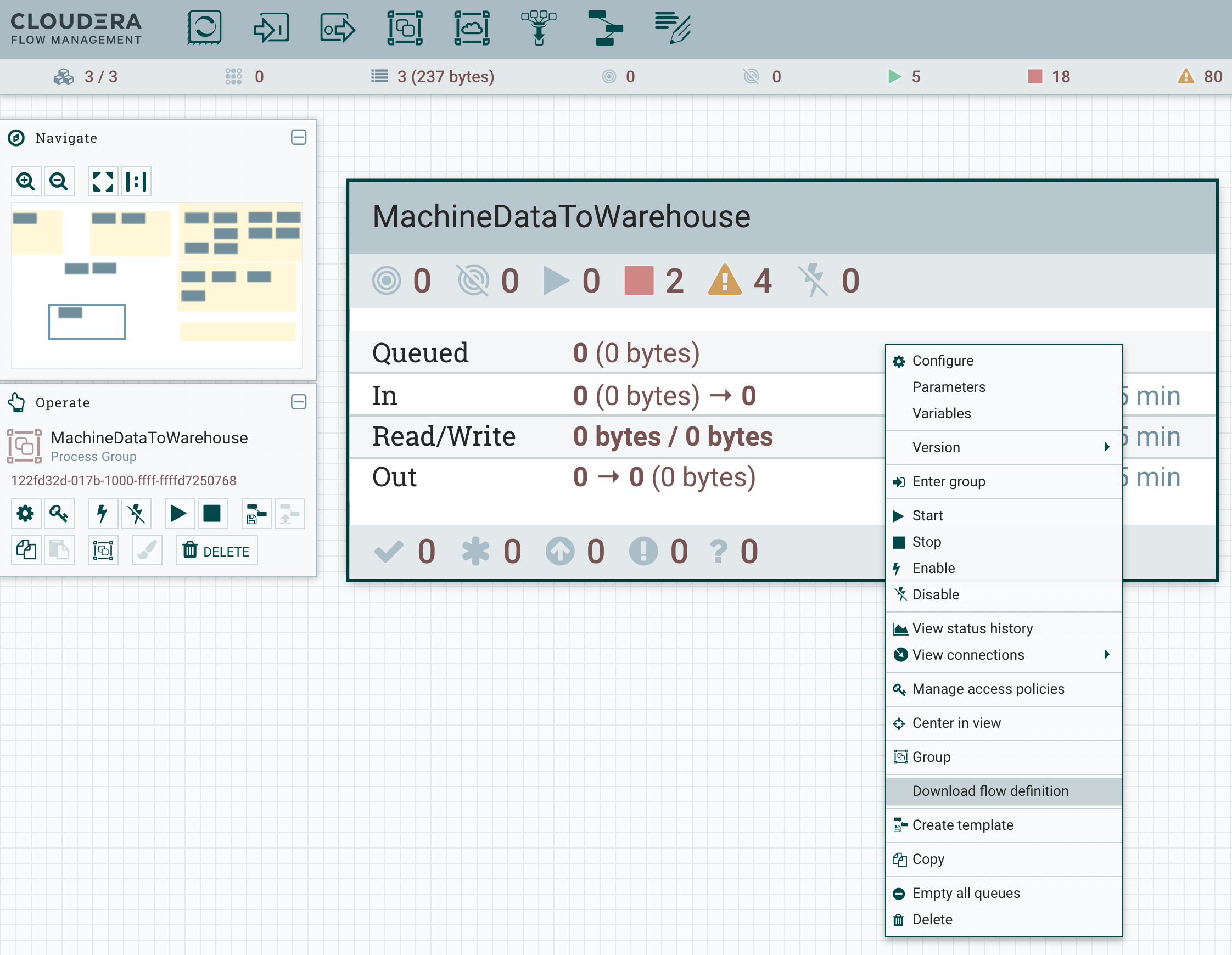

Before you can create any NiFi deployments with CDF-PC, you have to import your existing NiFi flow definitions into the Catalog. If you have an existing NiFi development cluster or production environment from where you want to export a flow, you can simply right click on a process group in the NiFi Canvas and select the “Download flow definition” option (Available starting with Apache NiFi 1.11 and later). This will create a JSON file containing the flow metadata. If you’re using the Apache NiFi Registry you can also export flow definitions from there that follow the same format.

Figure 3: Export a NiFi process group from an existing cluster

You can export a process group from any level of your NiFi data flow hierarchy giving you full flexibility on the level of isolation you want to achieve when deploying these data flows with CDF-PC. When an exported flow definition is turned into a deployment using CDF-PC you are able to allocate resources to it and treat it as an independent NiFi deployment.

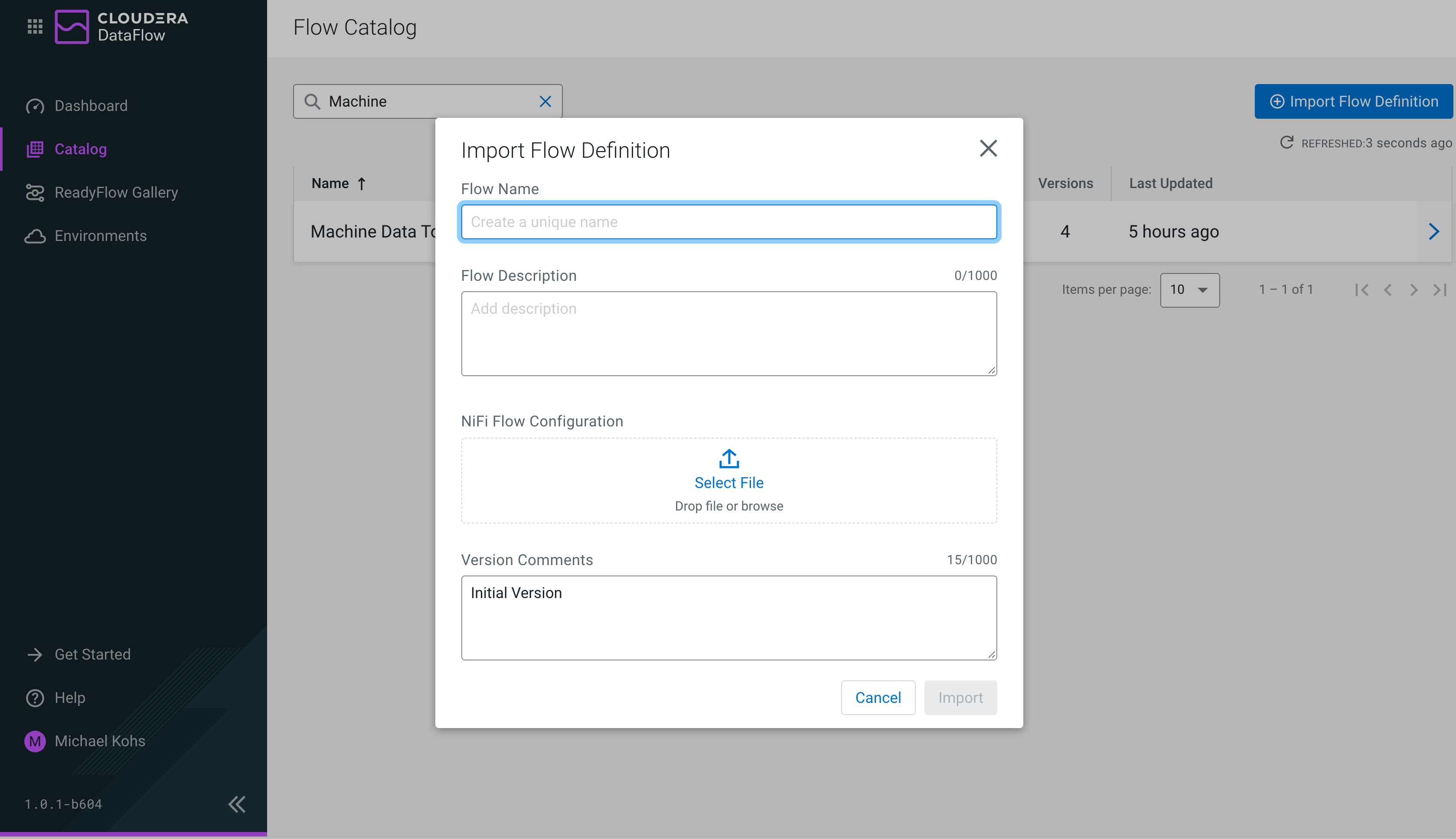

Once you have exported the process groups from your existing NiFi deployment, you can import them into the CDF-PC Flow Catalog. The flow catalog is the central repository for all flow definitions that can be deployed using CDF-PC.

Figure 4: Importing a NiFi flow definition into the CDF-PC Flow Catalog

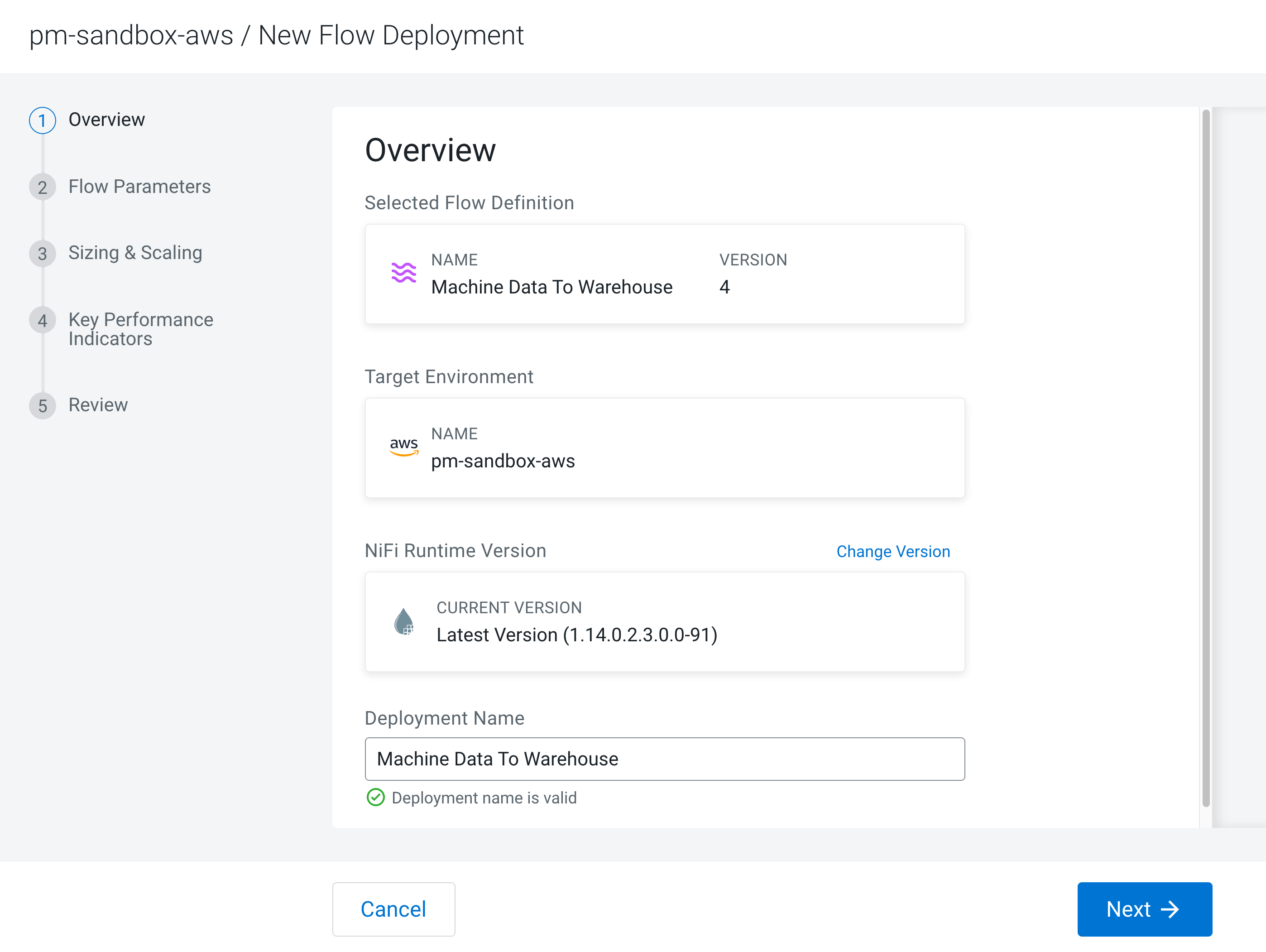

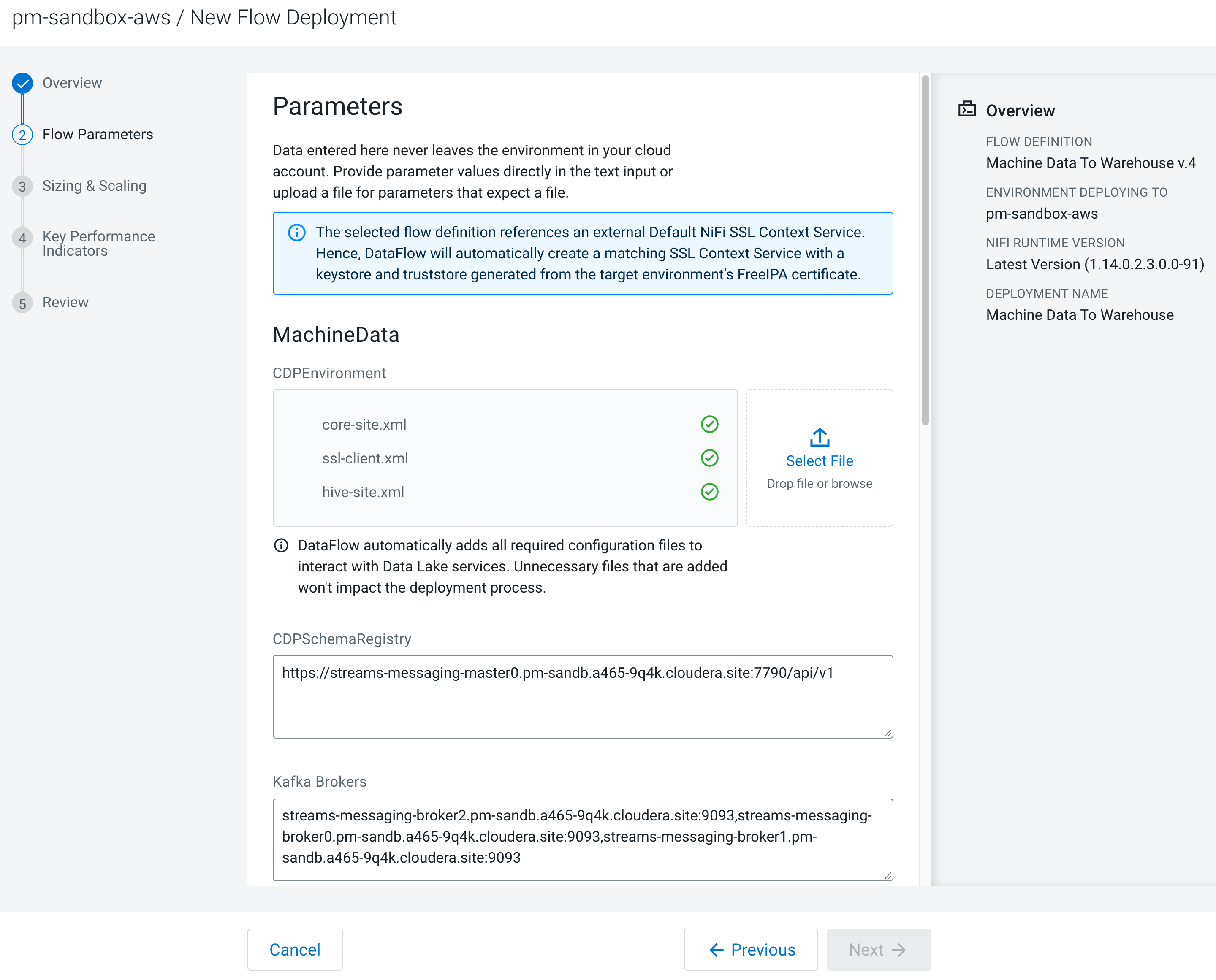

Simple, wizard driven NiFi deployments

When a new deployment is initiated from the central Flow Catalog, CDF-PC uses a wizard to walk the user through the deployment process. The wizard allows users to specify values for flow parameters like connection strings, usernames and passwords that are required to run the data flow. Users can also upload additional dependencies like configuration files or JDBC drivers. These resources are automatically mounted and available to all NiFi nodes eliminating the tedious task of manually copying files to every NiFi node in traditional deployments.

Figure 5: CDF-PC provides simple, wizard driven NiFi deployments

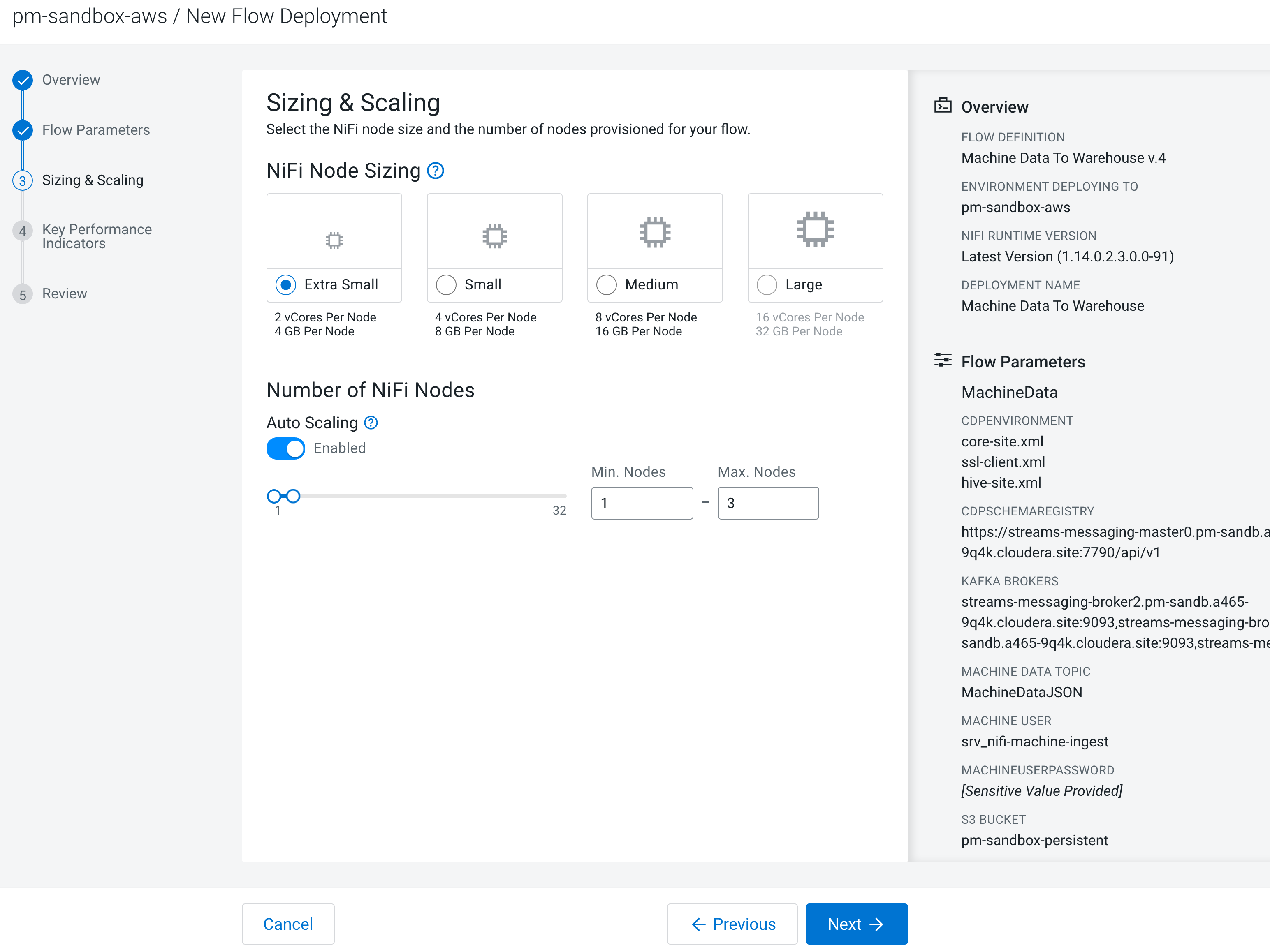

Auto-scaling flow deployments based on CPU utilization

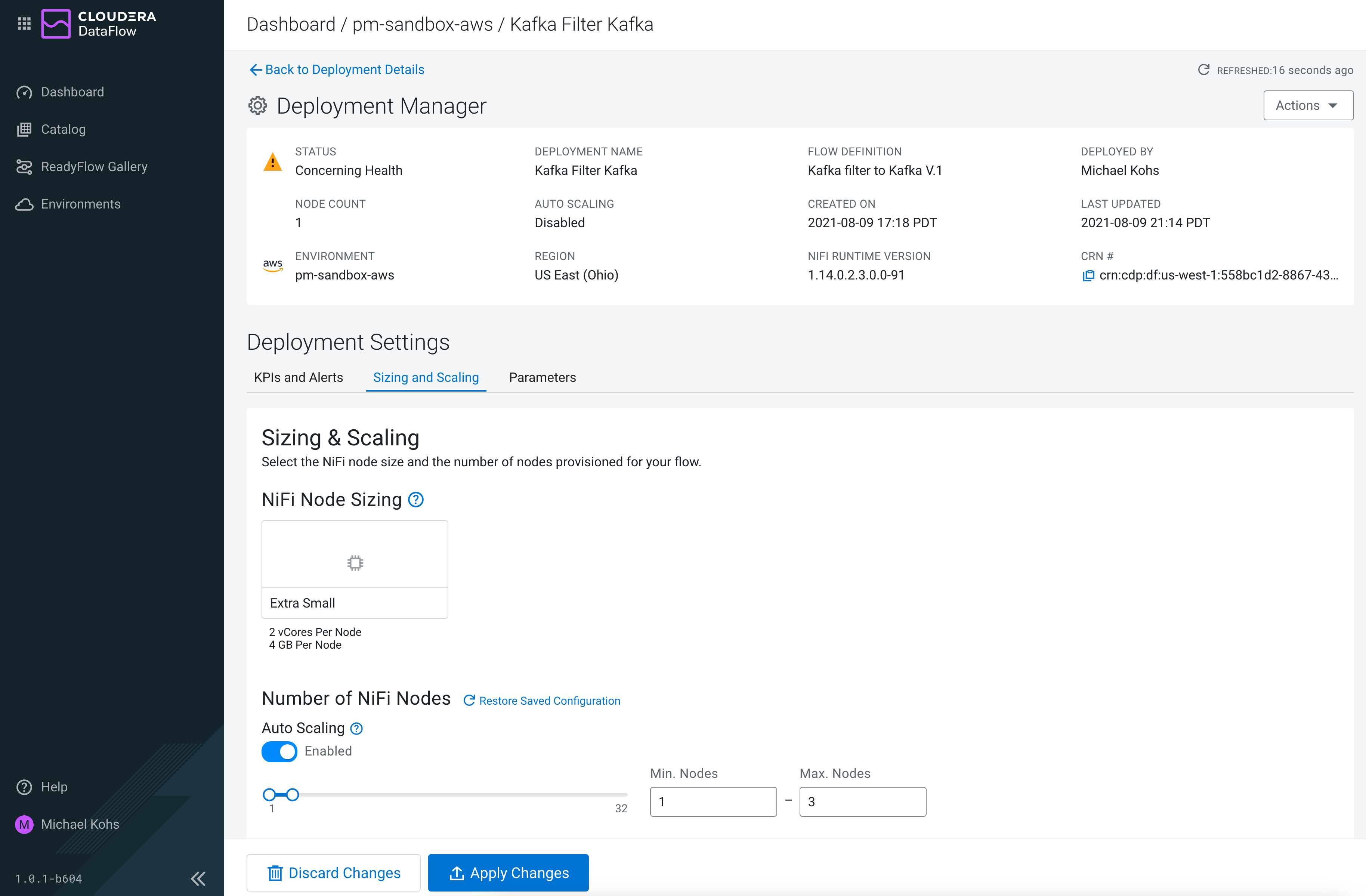

An important step in the deployment wizard is to provide sizing & scaling information about the flow deployment. Users can select from predefined NiFi Node sizes and specify how many NiFi Nodes they want to provision. Turning on auto-scaling for a flow deployment is as simple as flipping a switch and specifying a lower and upper scaling boundary. CDF-PC constantly monitors the CPU utilization of the resulting NiFi deployment and will scale it up or down as needed without any user intervention. Even after deployments have been created, users can adjust the scaling boundaries for existing deployments to react to changing processing requirements.

Figure 6: With CDF-PC autoscaling is as simple as flipping a switch

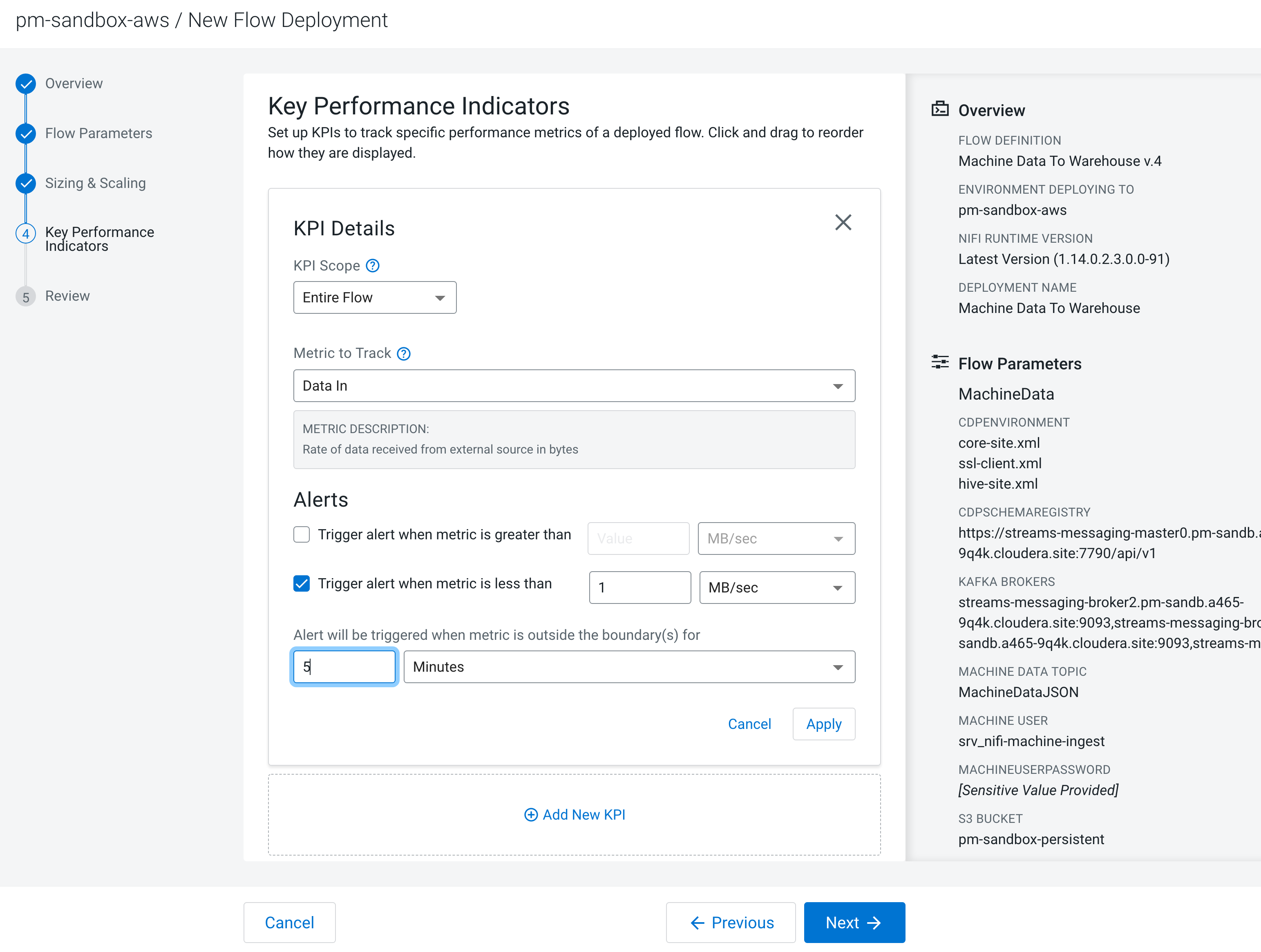

Use KPIs to track important data flow metrics

When stepping through the deployment wizard, CDF-PC allows users to create KPIs and Alerts to track important metrics for their deployments. KPIs can be defined on the entire data flow to track metrics like how much data the flow is sending to or receiving from external systems, as well as on individual NiFi components such as process groups, processors and connections. Each KPI can optionally trigger alerts if a certain condition is met. For example, users could define a KPI for the Entire Flow that tracks the Data In metric and triggers an alert whenever the flow is receiving data at a rate of less than 1 MB/s for five minutes.

Figure 7: Define KPIs and alerts to stay on top of important data flow metrics

A single pane of glass to monitor and manage flow deployments

As discussed earlier in this post, monitoring data flows across traditional NiFi clusters is challenging and requires configuration of 3rd party monitoring tools to gain a global view of all data flows.

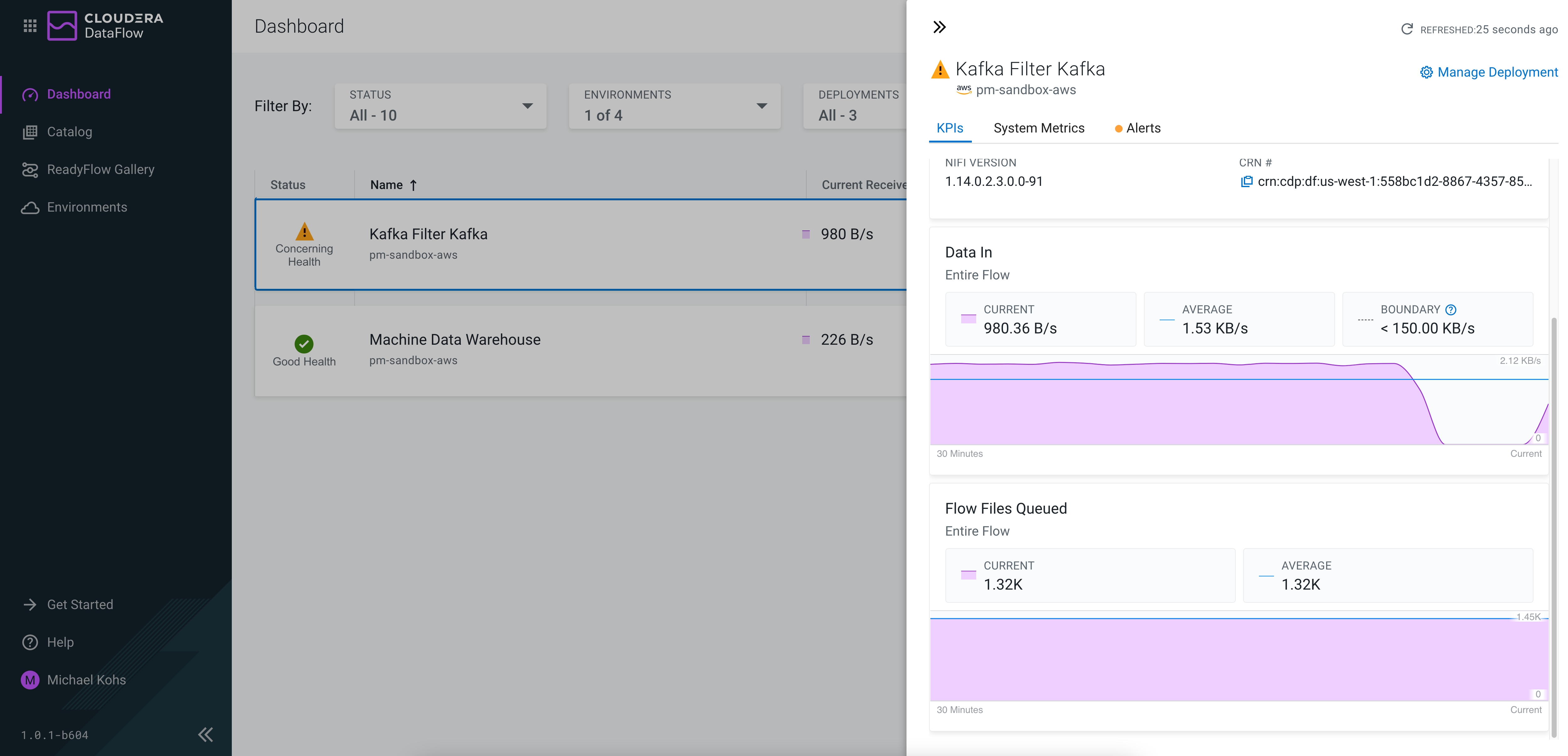

With CDF-PC every flow deployment can be centrally monitored and managed in the Dashboard. The Dashboard has been designed to allow users to quickly identify whether any of their data flows is not performing as expected and requires attention. Users get information about the current and historical flow performance and have detailed monitoring data available for the KPIs that they defined when deploying the flows.

The deployments also surface status events, warnings and error messages to inform users about the health of their flow deployments.

Figure 8: The monitoring dashboard provides a central view of all data flows and KPIs allow to track flow component metrics without going to the NiFi UI

From the Dashboard, users can reach the Deployment Manager from where they can manage the existing deployments allowing them to update parameter values, change the auto-scaling and sizing configuration or add/edit KPIs without having to redeploy their data flow.

Figure 9: The Deployment Manager allows users to change deployment configurations without redeploying the data flow

Resource Isolation between flow deployments

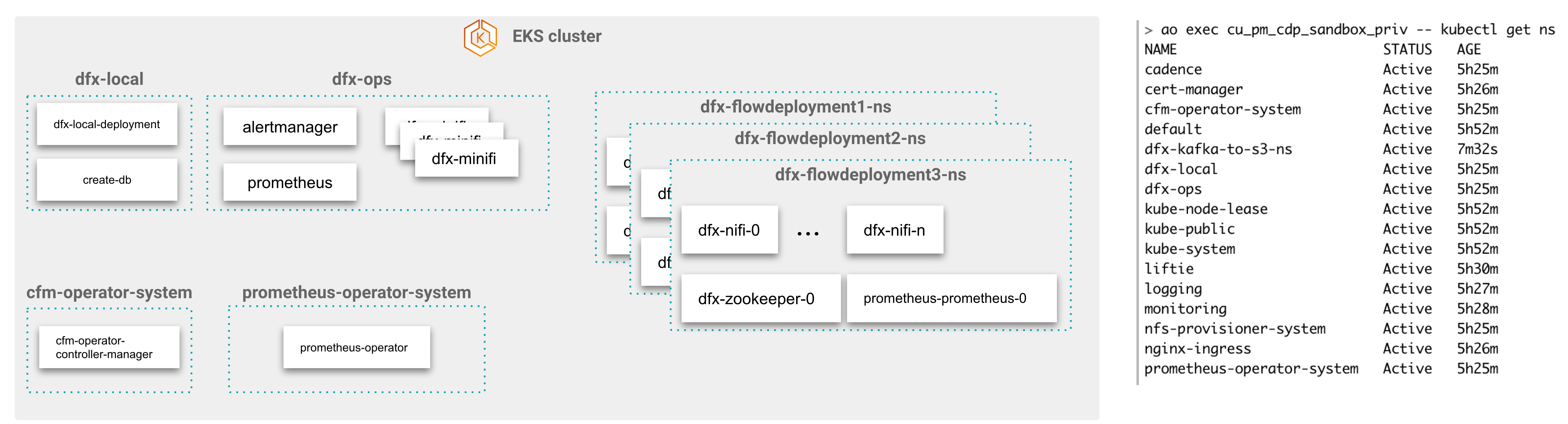

Let’s take a closer look at what happens when users deploy a flow definition using the Deployment Wizard. When a deployment request is submitted, CDF-PC provisions a new namespace in the shared Kubernetes cluster. It configures and deploys the NiFi pods following the specification that users provided during the Sizing & Scaling section of the Deployment Wizard. Therefore, every row that is displayed in the monitoring dashboard represents a NiFi cluster running in its own namespace.

By using dedicated namespaces for each deployment CDF-PC can provide resource and failure domain isolation between different data flows. Each deployment can scale independently resulting in great flexibility in how users want to provision their data flows. Unlike in traditional NiFi deployments where isolating flows to different clusters comes at the cost of increased management overhead and loss of central monitoring, CDF-PC provides isolation capabilities without requiring any additional work of the user and powering the central monitoring Dashboard.

Figure 10: Each flow deployment is using its own dedicated namespace and resources on a shared Kubernetes cluster

Upgrade NiFi versions with the click of a button

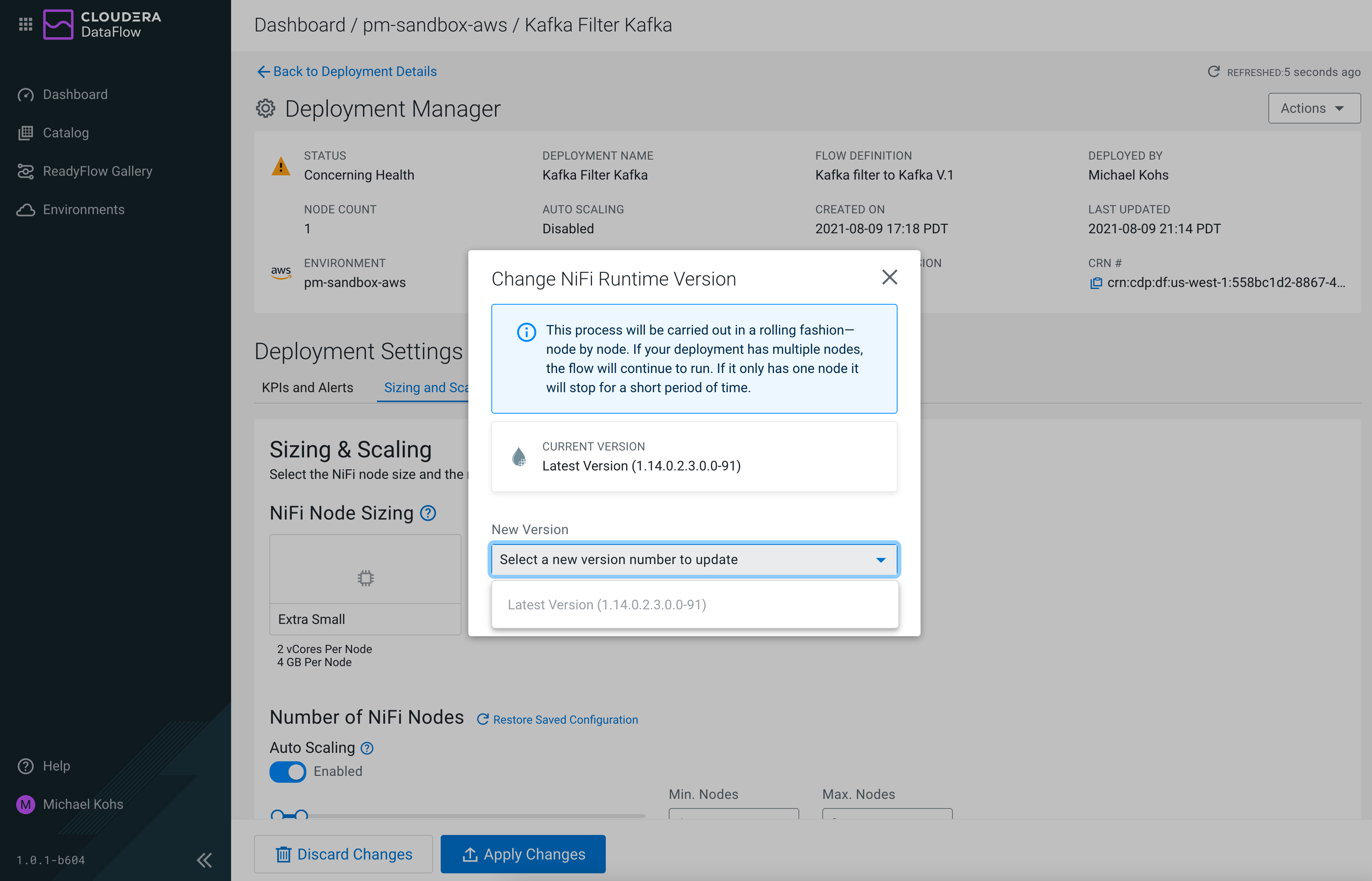

Upgrading NiFi versions to apply new maintenance releases or hotfixes is a common task for NiFi administrators. In traditional NiFi deployments, users need to monitor repositories, download the required bits and manually apply the hotfix.

Compare this to CDF-PC where new NiFi hotfixes are automatically made available to all users as soon as Cloudera releases them. From the Deployment Manager users can select the Change NiFi Runtime Version for existing deployments, pick the latest version and initiate the upgrade. If the deployment has more than one NiFi node provisioned, the upgrade will be carried out node by node in a rolling fashion.

Figure 11: Users can change the NiFi runtime version for existing deployments

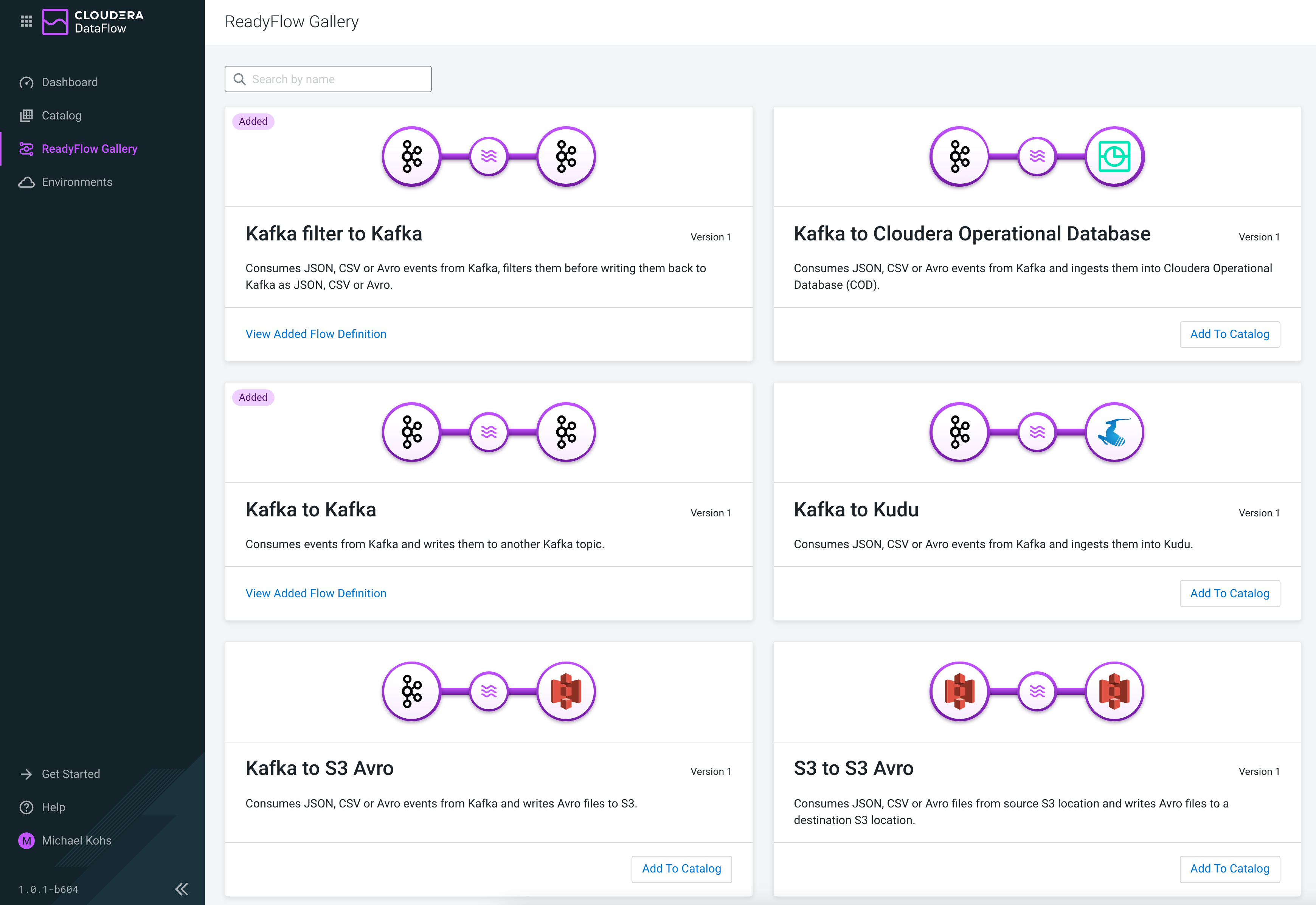

Deploy your first data flow in less than 5 minutes with ReadyFlows

We talked a lot about how CDF-PC helps NiFi users to run their existing NiFi data flows in a cloud-native way. But what about users who have data movement needs but are not yet familiar with Apache NiFi?

CDF-PC ships with ReadyFlows for common data movement use cases that help users get started with using NiFi for their data movement needs. ReadyFlows can be added to the central catalog and are deployed like any other flow definition stored in the catalog. After adding them to the catalog, users can initiate the Deployment Wizard and provide the required parameters to configure the ReadyFlow. With ReadyFlows new users can deploy their first data flows in less than five minutes without prior NiFi experience needed.

Figure 12: The ReadyFlow gallery helps users get started with the most common data flows

What’s next?

With this release of Cloudera DataFlow for the Public Cloud we’re entering a new era of running Apache NiFi data flows. CDF-PC enables Apache NiFi users to run their existing data flows on a managed, auto-scaling platform with a streamlined way to deploy NiFi data flows and a central monitoring dashboard making it easier than ever before to operate NiFi data flows at scale in the public cloud.

As we continue to expand and optimize CDF-PC, stay tuned for more exciting news and announcements.

Register for our webinar to watch a live demonstration of CDF-PC, and learn more about use cases and technical details on our product page and official documentation.

Looks great!

is there already an API available for the Flow deployment?

Thanks Daniel!

Right now you can enable DataFlow for a CDP environment using the CLI and we’ll be adding support for deployments through the CLI in our next release.

Hi Daniel,

Our recent CDF-PC release added the ability to programmatically create flow deployments using the CDP CLI. For more details, check out our latest blog titled “How to Automate Apache NiFi Data Flow Deployments in the Public Cloud”

Hello,

Do you have an idea on when CDF-PC will be available on Azure ? (in tech preview and GA)

Hi Delio,

CDF-PC will be available on Azure as Tech Preview very soon. Please reach out to your Cloudera Account representative and we can help you understand how to participate in the Tech Preview. Looking forward to it!

CDF-PC has been generally available on Azure since early February 2022. Read more here: https://blog.cloudera.com/announcing-the-ga-of-cloudera-dataflow-for-the-public-cloud-on-microsoft-azure/