We’ve read many predictions for 2023 in the data field: they cover excellent topics like data mesh, observability, governance, lakehouses, LLMs, etc. Here at DataKitchen, we wanted to take a different approach: look at a three-year horizon. What will the world of data tools be like at the end of 2025? The crazy idea is that data teams are beyond the boom decade of “spending extravagance” and need to focus on doing more with less. This will drive a new consolidated set of tools the data team will leverage to help them govern, manage risk, and increase team productivity.

What will exist at the end of 2025?

A combined, interoperable suite of tools for data team productivity, governance, and security for large and small data teams.

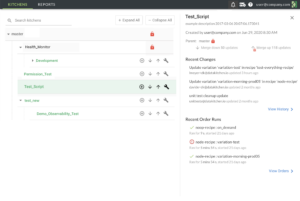

- DataOps Automation (Orchestration, Environment Management, Deployment Automation)

- DataOps Observability (Monitoring, Test Automation)

- Data Governance (Catalogs, Lineage, Stewardship)

- Data Privacy (Access and Compliance)

- Data Team Management (Projects, Tickets, Documentation, Value Stream Management)

What are the drivers of this consolidation?

- Recession: the party is over. Central IT Data Teams focus on standards, compliance, and cost reduction. They are moving away from direct connection with ‘the business.’. They are data enabling vs. value delivery. Their software purchase behavior will align with enabling standards for line-of-business data teams who use various tools that act on data.

- Enterprises are more challenged than ever in their data sprawl, so reducing risk and lowering costs drive software spending decisions. Driving new opportunities and expansion takes a back seat.

- The vendor landscape for addressing risk, cost, productivity, and governance often overlaps. The vendor sprawl leaves enterprises to integrate and rationalize their approach.

- Ultimately, there will be an interoperable toolset for running the data team, just like a more focused toolset (ELT/Data Science/BI) for acting upon data. As an analogy, the DevOps space has seen consolidation in code storage, CI/CD, team workflow, value stream management, testing, and other tools into one platform. And the tools for acting on data are consolidating: Tableau does data prep, Altreyx does data science, Qlik joined with Talend, etc.

- It’s code -> governance, not governance->code. Most data governance tools today start with the slow, waterfall building of metadata with data stewards and then hope to use that metadata to drive code that runs in production. In reality, the ‘active metadata’ is just a written specification for a data developer to write their code. Modern tools like dbt auto-generate lineage and catalogs from the code, giving a more accurate and timely set of governance metadata.

- Data Observability is booming in popularity, with a dozen startups in the space. This is because data teams realize that untested production systems produce error-prone output. And their business customers want more data trust. And they need to save time-consuming re-work.

- Data Infrastructure cost management (or FinOps) will become necessary. Those big cloud bills will get a looking at – and the CFO will ask hard questions – what work is important? Can we do this cheaper? And data teams will have to provide the data to understand the cost per data journey by the team.

- There is an opportunity to enable Data Stewarts to go beyond their current passive role in governance definition to a more active role in helping improve data testing and observability by reviewing and configuring production data tests.

- Ultimately, data teams want to change their production data systems with low risk quickly. They need a complete ‘deployable unit’ of ETL/Data Science/BI code and changes to the catalog, lineage, and privacy/security metadata in one unit. Can that be done with minimal vendor tools?

- The code, configuration, and metadata about your data are the Intellectual Property of your data teams. The vendor who owns that database of record in the enterprise will drive lots of value and ‘stickiness.’

- The explosion of tools that act on data: 50 ELT or ETL tools, 50 data science tools, and 50 data visualization tools. Most companies have several types of tools in production. Most companies are moving to the cloud and have their own proprietary data tools suite. Our problems are simple: too many tools, too many errors, and insufficient value delivered.

- Data Privacy and the risk of data misuse are part of the compliance and standards central data teams need to enforce.

- Data teams are underperforming; they will look to the changes that IT organizations have adopted with Agile and DevOps over the past five years and up their game with an Agile, DataOps approach.

- The changing role of central IT data teams in large companies: large companies typically have a ‘corporate’ data function. They were once the center of the data universe at many companies. However, over the past decade, line of business data teams have carried the burden of insight generation for the business. These teams are the hub, helping to enable many spokes. With a hub and spoke or data enablement model, central teams are about guardrails. Each line of the business spoke has the freedom to make their own ETL/BI/data science tool choice while delivering value to their data customer. The central teams will become raw data loading functions, with required standards for governance, security, privacy, observability, testing, and production operations.

Why would this consolidation not happen?

- The software people and DevOps tools will take over the data space. Data Teams and Software/IT want to be agile, but as more software people work in data teams, they will consolidate on a single toolset rooted in software developers’ tools.

- The cloud vendors are building data capabilities at a frantic rate, and their ‘walled garden’ will make it hard for any vendor to compete on tools for the data team. Azure is ahead in this area.

- Like Oracle in the 2000s, Snowflake and Databricks will win on being the everything platform for data analytics.

Conclusion

We are entering into tough few years economically. We are heading into ‘data winter.’ just like the software field had a multi-year crunch 20 years ago. Perhaps out of this will come a data culture obsessed with creating value for their customers instead of adopting the latest cool tech buzzword, a culture that tests, iterates, and continuously improves efficiency.

Enterprise data teams are still challenged with their data sprawl and making their customers happy. They can’t just spend millions on new tech and hope it will deliver value next year. So the prime drivers will be reducing risk and lowering costs. Driving new opportunities and expansion takes a back seat. The prediction is this: those challenges create an opportunity to create a single integrated set of tools rooted in DataOps principles to help these teams govern, manage risk, and increase team productivity.