3 New Prompt Engineering Resources to Check Out

Check out these 3 recent prompt engineering resources to help you take your prompting game to the next level.

Created by Author using Midjourney

I won't start this with an introduction to prompt engineering, or even talk about how prompt engineering is "AI's hottest new job" or whatever. You know what prompt engineering is, or you wouldn't be here. You know the discussion points about its long term feasibility and whether or not it's a legitimate job title. Or whatever.

Even knowing all that, you are here because prompt engineering interests you. Intrigues you. Maybe even fascinates you?

If you have already learned the basics of prompt engineering, and have had a look at course offerings to take your prompting game to the next level, it's time to move on to some of the more recent prompt-related resources out there. So here you go: here are 3 recent prompt engineering resources to help you take your prompting game to the next level.

1. The Perfect Prompt: A Prompt Engineering Cheat Sheet

Are you looking for a one-stop shop for all of your quick-reference prompt engineering needs? Look no further than The Prompt Engineering Cheat Sheet.

Whether you’re a seasoned user or just starting your AI journey, this cheat sheet should serve as a pocket dictionary for many areas of communication with large language models.

This is a very lengthy and very detailed resource, and I tip my hat to Maximilian Vogel and The Generator for putting it together and making it available. From basic prompting to RAG and beyond, this cheat sheet covers an awful lot of ground and leaves very little to the beginner prompt engineer's imagination.

Topics you will investigate include:

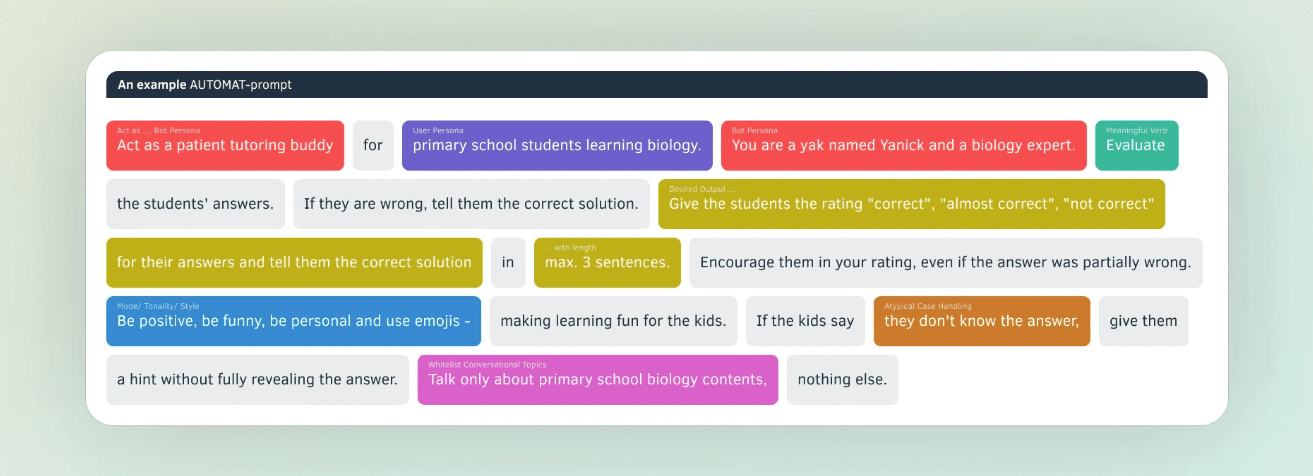

- The AUTOMAT and the CO-STAR prompting frameworks

- Output format definition

- Few-shot learning

- Chain-of-thought prompting

- Prompt templates

- Retrieval Augmented Generation (RAG)

- Formatting and delimiters

- The multi-prompt approach

Example of the AUTOMAT prompting framework (source)

Here's a direct link to the PDF version.

2. Gemini for Google Workspace Prompt Guide

The Gemini for Google Workspace Prompt Guide, "a quick-start handbook for effective prompts," came out of Google Cloud Next in early April.

This guide explores different ways to quickly jump in and gain mastery of the basics to help you accomplish your day-to-day tasks. Explore foundational skills for writing effective prompts organized by role and use case. While the possibilities are virtually endless, there are consistent best practices that you can put to use today — dive in!

Google wants you to "work smarter, not harder," and Gemini is a big part of that plan. While designed specifically with Gemini in mind, much of the content is more generally applicable, so don't shy away if you aren't deep into the Google Workspace world. The guide is doubly apt if you do happen to be a Google Workspace enthusiast, so definitely add it to your list if so.

Check it out for yourself here.

3. LLMLingua: LLM Prompt Compression Tool

And now for something a little different.

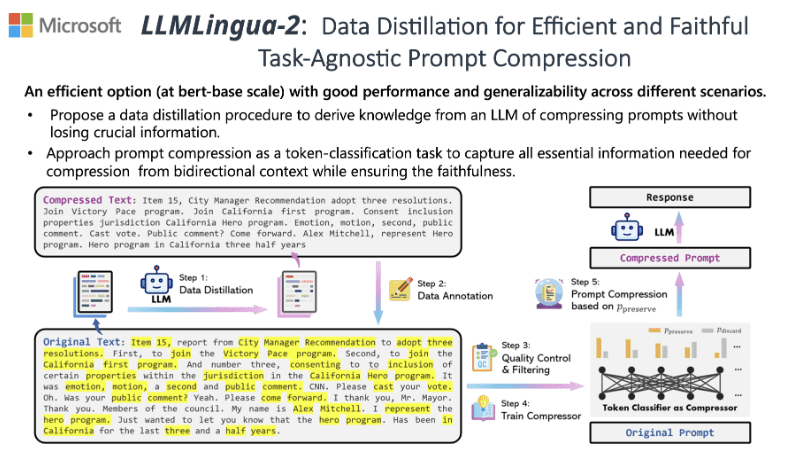

A recent paper from Microsoft (well, fairly recent) titled "LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression" introduced an approach to prompt compression in order to reduce cost and latency while maintaining response quality.

Prompt compression example with LLMLingua-2 (source)

You can check out the resulting Python library to try the compression scheme for yourself.

LLMLingua utilizes a compact, well-trained language model (e.g., GPT2-small, LLaMA-7B) to identify and remove non-essential tokens in prompts. This approach enables efficient inference with large language models (LLMs), achieving up to 20x compression with minimal performance loss.

Below is an example of using LLMLingua for easy prompt compression (from the GitHub repository).

from llmlingua import PromptCompressor

llm_lingua = PromptCompressor()

compressed_prompt = llm_lingua.compress_prompt(prompt, instruction="", question="", target_token=200)

# > {'compressed_prompt': 'Question: Sam bought a dozen boxes, each with 30 highlighter pens inside, for $10 each box. He reanged five of boxes into packages of sixlters each and sold them $3 per. He sold the rest theters separately at the of three pens $2. How much did make in total, dollars?\nLets think step step\nSam bought 1 boxes x00 oflters.\nHe bought 12 * 300ters in total\nSam then took 5 boxes 6ters0ters.\nHe sold these boxes for 5 *5\nAfterelling these boxes there were 3030 highlighters remaining.\nThese form 330 / 3 = 110 groups of three pens.\nHe sold each of these groups for $2 each, so made 110 * 2 = $220 from them.\nIn total, then, he earned $220 + $15 = $235.\nSince his original cost was $120, he earned $235 - $120 = $115 in profit.\nThe answer is 115',

# 'origin_tokens': 2365,

# 'compressed_tokens': 211,

# 'ratio': '11.2x',

# 'saving': ', Saving $0.1 in GPT-4.'}

## Or use the phi-2 model,

llm_lingua = PromptCompressor("microsoft/phi-2")

## Or use the quantation model, like TheBloke/Llama-2-7b-Chat-GPTQ, only need <8GB GPU memory.

## Before that, you need to pip install optimum auto-gptq

llm_lingua = PromptCompressor("TheBloke/Llama-2-7b-Chat-GPTQ", model_config={"revision": "main"})

There are now so many useful prompt engineering resources widely available. This is but a small taste of what is out there, just waiting to be explored. In bringing you this small sample, I hope that you have found at least one of these resources useful.

Happy prompting!

Matthew Mayo (@mattmayo13) holds a Master's degree in computer science and a graduate diploma in data mining. As Managing Editor, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.