Use Amazon OpenSearch Ingestion to migrate to Amazon OpenSearch Serverless

AWS Big Data

FEBRUARY 27, 2024

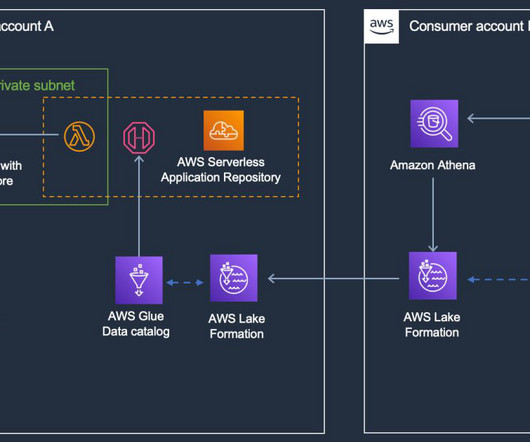

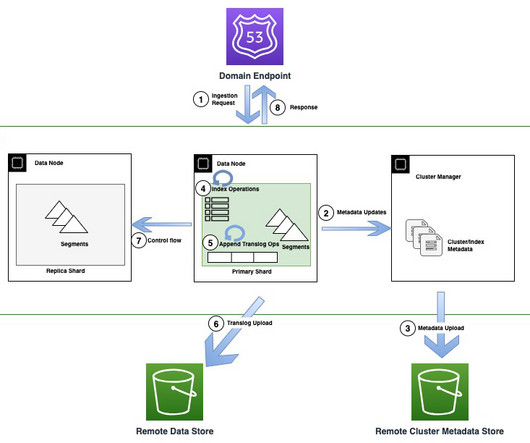

Migration of metadata such as security roles and dashboard objects will be covered in another subsequent post. Update the following information for the source: Uncomment hosts and specify the endpoint of the existing OpenSearch Service endpoint. For now, you can leave the default minimum as 1 and maximum as 4.

Let's personalize your content