The Syntax, Semantics, and Pragmatics Gap in Data Quality Validate Testing

Data Teams often have too many things on their ‘to-do’ list. Customers are asking for new data, people need questions answered, and the tech stack is barely running – data engineers don’t have time to create tests. They have a backlog full of new customer features or data requests, and they go to work every day knowing that they won’t and can’t meet customer expectations.

To understand the time crunch that data engineers face and how it prevents them from testing their data sufficiently, we can think about three categories of data tests, using linguistics as an analogy: data syntax vs. semantics vs. pragmatics. In linguistics, Syntax is the study of sentence structure and grammar rules. While people can do what they want with language (and many often do), syntax helps ordinary language users understand how to organize words to make the most sense.

On the other hand, semantics is the study of the meaning of sentences. The sentence “Colorless green ideas sleep furiously” makes syntactic sense but is meaningless. Pragmatics takes semantics one step further because it studies the meaning of sentences within a particular context.

Let’s apply this idea from linguistics to data engineering challenges.

Syntax-Based Profiling and Testing: By profiling the columns of data in a table, you can look at values in a column to understand and craft rules about what is allowed for a column. For instance, if a column is filled with US zip codes, a row should not have the word ‘’bacon” in it. Data engineers need a quick way to profile data quickly and automatically cast a wide net to catch data anomalies. It’s analogous to setting up a burglar alarm in your home by deploying sensors at all possible entrances to catch a burglar who may only try one window. Automatically creating tests from profile data allows teams to maintain maximum sensitivity to real problems while minimizing false positives that are not worth the follow-up.

Semantics-Based Business Rule Testing. What is a meaningful test for your business? Do you know as a data engineer? For example, you can compare current data to previous or expected values. These tests rely upon historical values as a reference to determine whether data values are reasonable (or within the range of reasonable). For example, a test can check the top fifty customers or suppliers. Did their values unexpectedly or unreasonably go up or down relative to historical values? What is the acceptable rate of change? 10%? 50%? Data engineers are only sometimes able to make these business judgments. They must thus rely on data stewards or business customers to ‘fill in the blank’ on various data testing rules.

Pragmatics-Based Custom Testing. Many companies have widely diverging business units. For example, a pharmaceutical company may be organized into Research and Development (R&D), Manufacturing, Marketing and Sales, Supply Chain and Logistics, Human Resources (HR), and Finance and Accounting. Each unit will have unique data sets with specific data quality test requirements. Drug discovery data is so different from manufacturing data that data test cases require unique domain knowledge or a specific, pragmatic business context based on each group’s unique data and situation.

How to Do Data Syntax-Based Testing: DataOps TestGen

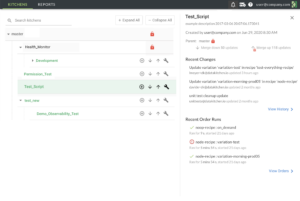

DataOps TestGen’s first step is to profile data and produce a precise understanding of every table and column. It looks at 51 different data characteristics that have proven critical to developing practical data tests, regardless of the data domain. TestGen then performs 13 ‘Bad Data’ detection tests, providing early warnings about data quality issues, identifying outlier data, and ensuring data are of the highest quality.

One of the standout features of DataOps TestGen is the power to auto-generate data tests. With a library of 28 distinct tests automatically generated based on profiling data, TestGen simplifies the testing process and saves valuable time. These tests require minimal or no configuration, taking the heavy lifting out of your hands, so you can focus on what matters – extracting insights from your data.

How to Do Data Semantics-Based Testing: DataOps TestGen

TestGen also offers 11 business rule data tests that, with minimal configuration, can be used for more customized tests. These tests allow users to customize testing protocols to fit specific business requirements with a “fill in the blank” model, offering a perfect blend of speed and robustness in data testing. These types of tests ensure your data not only meets general quality standards but also aligns with your unique business needs and rules. Data stewards, who may know more about the business than a data engineer, can quickly change a setting to adjust the parameters of a data test – without coding.

How to Do Data Pragmatics-Based Testing: DataOps Automation

Every company is unique. Every company has complex data and tools within each team, product, and division. Monitoring and testing the data to ensure its reliability continually is crucial. It is crucial to build domain-specific data validation tests and, for example, the results of data models for accuracy and relevance, evaluate the effectiveness of data visualizations. Checking the result of a model, an API call, or data-in-use in a specific analysis tool is critical to ensure that data delivery mechanisms are operating optimally. DataOps Automation provides robust testing and evaluation processes throughout the ‘last mile’ of the Data Journey.

Conclusion

DataKitchen’s DataOps Observability product enables this Data Journey monitoring and alerting. DataOps Observability is designed to seamlessly extract these status details and test results, including those produced by DataOps TestGen and Automation from every Data Journey, quickly with no changes to current jobs and processes, and compare them to expectations and alert when variances exist – allowing data teams to use and share the data test results that solve the syntax, semantics, and pragmatics gap in data quality validation testing.