Manage your data warehouse cost allocations with Amazon Redshift Serverless tagging

AWS Big Data

MARCH 27, 2023

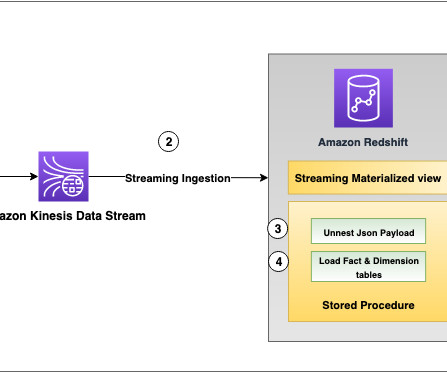

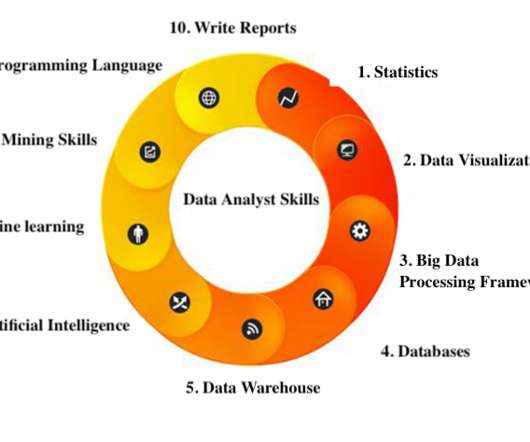

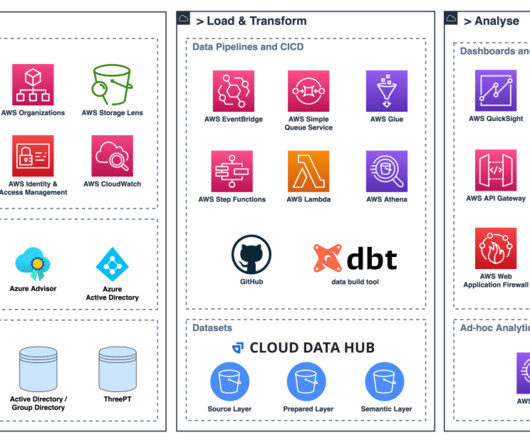

Amazon Redshift Serverless makes it simple to run and scale analytics without having to manage your data warehouse infrastructure. Tags allows you to assign metadata to your AWS resources. In Cost Explorer, you can visualize daily, monthly, and forecasted spend by combining an array of available filters.

Let's personalize your content