Generative AI with Large Language Models: Hands-On Training

This 2-hour training covers LLMs, their capabilities, and how to develop and deploy them. It uses hands-on code demos in Hugging Face and PyTorch Lightning.

Image by Author

Introduction

Large language models (LLMs) like GPT-4 are rapidly transforming the world and the field of data science. In just the past few years, capabilities that once seemed like science fiction are now becoming a reality through LLMs.

The Generative AI with Large Language Models: Hands-On Training will introduce you to the deep learning breakthroughs powering this revolution, with a focus on transformer architectures. More importantly, you will directly experience the incredible breadth of capabilities the latest LLMs like GPT-4 can deliver.

You will learn how LLMs are fundamentally changing the game for developing machine learning models and commercially successful data products. You will see firsthand how they can accelerate the creative capacities of data scientists while propelling them toward becoming sophisticated data product managers.

Through hands-on code demonstrations leveraging Hugging Face and PyTorch Lightning, this training will cover the full lifecycle of working with LLMs. From efficient training techniques to optimized deployment in production, you will learn directly applicable skills for unlocking the power of LLMs.

By the end of this action-packed session, you will have both a foundational understanding of LLMs and practical experience leveraging GPT-4.

Image from Training

Training Outlines

The training has 4 short modules that introduce you to Large Language Models and teach you to train your own large language model and deploy it to the server. Apart from that, you will learn about the commercial value that comes with LLMs.

1. Introduction to Large Language Models (LLMs)

- A Brief History of Natural Language Processing

- Transformers

- Subword Tokenization

- Autoregressive vs. Autoencoding Models

- ELMo, BERT, and T5

- The GPT (Generative Pre-trained Transformer) Family

- LLM Application Areas

2. The Breadth of LLM Capabilities

- LLM Playgrounds

- Staggering GPT-Family progress

- Key Updates with GPT-4

- Calling OpenAI APIs, including GPT-4

3. Training and Deploying LLMs

- Hardware Acceleration (CPU, GPU, TPU, IPU, AWS chips)

- The Hugging Face Transformers Library

- Best Practices for Efficient LLM Training

- Parameter-efficient fine-tuning (PEFT) with low-rank adaptation (LoRA)

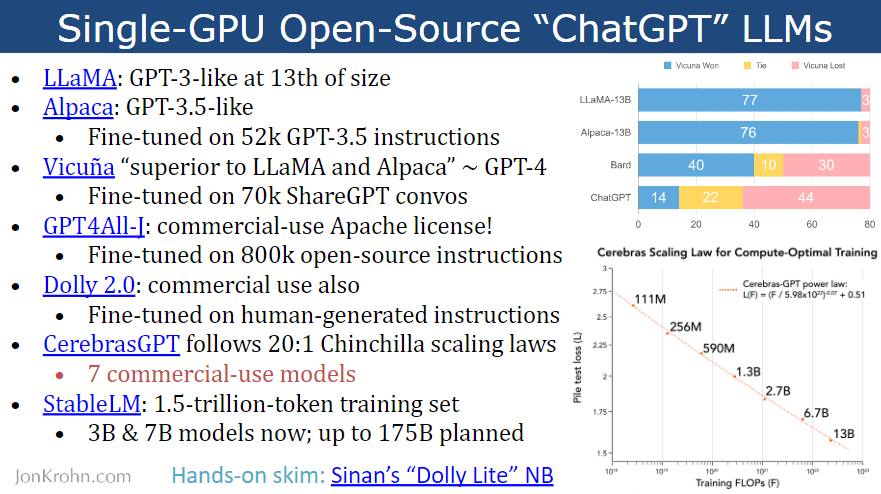

- Open-Source Pre-Trained LLMs

- LLM Training with PyTorch Lightning

- Multi-GPU Training

- LLM Deployment Considerations

- Monitoring LLMs in Production

4. Getting Commercial Value from LLMs

- Supporting ML with LLMs

- Tasks that can be Automated

- Tasks that can be Augmented

- Best Practices for Successful A.I. Teams and Projects

- What's Next for A.I.

Resources

The training includes links to external resources such as source code, presentation slides, and a Google Colab notebook. These resources make it interactive and useful for engineers and data scientists who are implementing Generative AI into their workspace.

Image from Training

Here is a list of essential resources needed to build and deploy your own LLM model using Huggingface and Pytorch Lighting:

- Presentation Slides

- GitHub Code Source

- Google Colab (T5-Finetune)

- Youtube Video

- Jon Krohn (Official Website)

Discover the secret to success in just 2 hours! Don't wait any longer!

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in Technology Management and a bachelor's degree in Telecommunication Engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.