Towards optimal experimentation in online systems

It is sometimes useful to think of a large-scale online system (LSOS) as an abstract system with parameters $X$ affecting responses $Y$. Here, $X$ is a vector of tuning parameters that control the system's operating characteristics (e.g. the weight given to Likes in our video recommendation algorithm) while $Y$ is a vector of outcome measures such as different metrics of user experience (e.g., the fraction of video recommendations resulted in positive user experiences). If we wish to tune the system parameters $X$ for optimal performance of $Y$, there are several challenges:

- The relationship between $X$ and $Y$ may be complex and poorly understood

- It may be impossible to simultaneously maximize every element of $Y$, requiring trade offs

- There may be hard constraints on the $X$ and $Y$, either individually or in combination, that limit what we deem to be acceptable operating points for the system

This blog post discusses such a comprehensive approach that is used at Youtube. If the relationship of $X$ to $Y$ can be approximated as quadratic (or any polynomial), and the objective and constraints as linear in $Y$, then there is a way to express the optimization as a quadratically constrained quadratic program (QCQP). Such an approach tells us what next set of experiments to run to simultaneously improve our understanding of the relationship between $X$ and $Y$, and find close-to-optimal operating points that lie within our operating constraints. Crucially, it takes into account the uncertainty inherent in our experiments. It is a big picture approach, worthy of your consideration.

Experiments, Parameters and Models

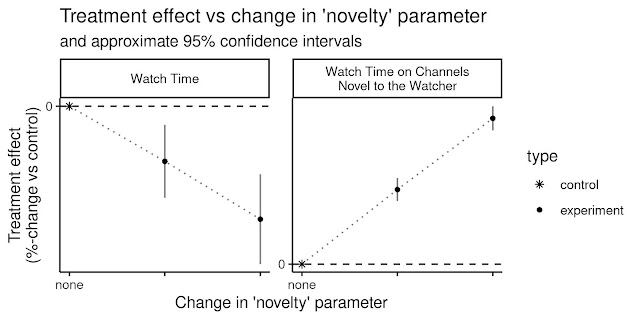

At Youtube, the relationships between system parameters and metrics often seem simple — straight-line models sometimes fit our data well. Figure 1 shows an example of real experiments where there was clearly a straight-line relationship between changes made to a parameter and changes observed in metrics.We can experiment more efficiently by relying on these straight-line relationships, if relationships between parameters and metrics are indeed well-described by straight lines. In geometry, two points or measurements determine a line, but in our setting, only one experiment would be needed to learn a straight-line model relating parameters and metrics, because we already know that the line goes through the origin (if we do not change system parameters, then metrics will not change either). If only one experiment was truly needed, then it was wasteful and inefficient to run several of them, as we did in the study shown in Figure 1. How could we have run our studies more efficiently?

Figure 2 indicates that experiments that change parameters more are more efficient. Taking measurements at parameter settings further from control parameter settings leads to a lower variance estimate of the slope of the line relating the metric to the parameter. That is true generally, not just in these experiments — spreading measurements out is generally better, if the straight-line model is a priori correct. (And sometimes even if it is not[1].) It is also a sound strategy when experimenting with several parameters at the same time.

|

| Figure 2: Spreading measurements out makes estimates of model (slope of line) more accurate. |

Also, it is theoretically possible that the only improvements to the system might involve changing several parameters at once — see Figure 3 for an example. In that case, it is absolutely necessary to experiment with more than one parameter at a time to have any chance of finding improvements: experiments with one parameter at a time are doomed. And in general, experimenting with several parameters at once gives us a better chance to succeed quickly. Perhaps there are 12 parameters and only one of them needs to be changed to a better value — a 12-parameter experiment may reveal this more quickly than 12 sequential one-parameter experiments.

Designing experiments on several parameters

For a single-parameter experiment in which the relationship between parameters and metrics was well-approximated by a straight line, it appeared that spreading measurements out was the right way to run more efficient experiments. This remains a good strategy when experimenting with many parameters at the same time, and also when the relationship between parameters and metrics is not exactly a straight line but rather a low-degree polynomial, as often happens in experiments at YouTube.In this section we describe central composite designs, our go-to recipe for "spreading measurements out'" in multiple-parameter experiments. In a later section, we say more about what "well spread-out'' should mean for such experiments by connecting a quantitative measure of "spread'" with model variance minimization.

|

| Figure 4: Visualization of a central composite design. |

If there are many parameters, for example if $p = 20$, then we might not be able to run all $2^p$ possible ways of setting each parameter to a lower or higher value. The workaround is to use a fractional factorial, which is a subset of all $2^p$ possibilities with some special symmetry properties.

For example if there are five parameters $x_1, \ldots x_5$ whose default values are zero, that can be safely changed by $\pm 1$ in conjunction with other parameter changes, or by $\pm \alpha$ on their own for some $\alpha \geq 1$, we might run the central composite design in Table 1.

Consider the fractional factorial part of this design, and focus on any column, e.g. the $x_1$ column: 8 of the 16 experiments set parameter $x_1$ to a low value $-1$, and the other 8 set $x_1$ to the high value $+1$; this is true for any parameter. Now consider any two parameters, e.g. $x_1$ and $x_2$: there are four possible ways of setting these parameters to $\pm 1$, and all four possibilities appear an equal number of times in the design. Likewise, for any 3 parameters/columns, each of the 8 possible combinations of $\pm 1$ appears an equal number of times. These symmetry properties can protect against some kinds of bias and make it possible to analyze fractional factorial designs simply by grouping. To estimate the effect of increasing parameter $x_1$, for example, we can compare the average response across the 8 experiments where $x_1=+1$ to the average response across the 8 experiments where $x_1=-1$: the effects of other parameters will mostly average out to zero by symmetry. Our experimentation platform supports this kind of grouped-experiments analysis, which allows us to see rough summaries of our designed experiments without much work.

We use these designs frequently, and so can you. Finding fractional factorial components on your own might be tricky, but NIST provides some useful fractional factorials[2], and the R package FrF2 [3][4] provides many more. For more on fractional factorial designs and orthogonal arrays, see [5][6][7].

Modeling live experiment data

Data scientists at YouTube are rarely involved in the analysis of typical live traffic experiments. Multiparameter experiments, however, generate richer data than standard A/B tests, and automated t-tests alone are insufficient to analyze them well. Designed experiments thus create requirements and opportunities for data scientists to assist engineering teams with inference about individual live experiments. To illustrate that, I'll describe some basic features of an analysis of a real multiparameter experiment.We set up a suite of experiments that varied 12 parameters of the YouTube Discovery System. With twelve parameters there are $2^{12} = 4096$ distinct ways of increasing or decreasing each parameter: we tried 256 of these, and added center (control) points and axial points to make a central composite design as described above, for a total of 290 experiments.

The data showed us that metrics are not exactly straight-line functions of parameters.

|

| Figure 5: Treatment effect vs parameter number 4. Estimates were produced by grouping experiments according to the value of the fourth parameter, and comparing metrics to control-arm experiments. |

Figure 5 displays one of clearest nonlinear relationships between a metric and a parameter in this study — the plot of treatment effect vs parameter looks like a parabola. There were also indications that some parameters were interacting with each other, so we used a second-order embedding when fitting our first model, which was

$$

\begin{equation}

y_i = \mu (1 + f(x_i)^\intercal \beta) + \epsilon_i\tag{1}

\end{equation}

$$where

- $i$ indexes experiments

- $y_i$ represents a metric measurement in experiment $i$ — for example the number of videos viewed by users in that experiment

- $\mu$ represents the expected value of the metric in the control arm

- $x_i = (x_{i1}, \ldots, x_{ip})$ represents system parameters 1 through $p=12$ in experiment $i$. In this particular experiment, and whenever possible, we choose parameter coordinates that represent change in system parameters: thus $x = 0$ represents control or production system parameters.

- $f(x)$ represents the quadratic feature embedding without an intercept, involving all linear terms and two-parameter interactions and quadratic effects, i.e., $f(x) = (x_1, \ldots, x_p, x_1^2 , \ldots x_p^2, x_1x_2, \ldots, x_{p-1}x_p)$ and $\beta$ represents the corresponding model coefficients.

- $\epsilon_i$ represent random error terms that are independent as i varies.

\begin{equation*}

\begin{split}

\Delta_i &= \frac{y_i}{\widehat{\mu}} - 1 \\

\widehat{\mu} &= \textrm{avg} \{ y_i \mid \textrm{experiment i is a control experiment} \}

\end{split}

\end{equation*}

$$Then we regress $\Delta_i$ on $f(x)$, by solving the least-squares problem$$

\widehat{\beta} = \arg \min \sum_i | \Delta_i - f(x_i)^\intercal \beta |^2

$$We have $O(10)$ main outcome metrics we care most about, and we fit separate models as above for each one.

There are enough users in each of our experiments that the implications of the central limit theorem hold fairly well: for us, the residual errors $\epsilon_i$ in (1) seem roughly Gaussian, which justifies use of simple linear models. If our experiments were smaller then we might need to model our metrics with Poisson, Binomial or other GLMs instead. This could complicate analysis and experiment design too. For linear models, the D-optimal experiment design (to be discussed later) does not depend on the unknown model coefficients $\beta$, but this is not true for general GLMs. Worth keeping in mind if you want to try these ideas when measurement error is not Gaussian.

Distilling a decision from the model

After we train metric models as above (1) we are left with the problem of what to do next? Or, more concretely, what changes in parameter settings (what $x$) would improve metrics the most? Our main tools for answering this question are (a) a vocabulary or language for describing what we want, called QCQP, and (b) software for solving QCQP which is readily available.We want to find parameter changes that will improve user experience metrics, but there are many metrics we care about, so tradeoffs need to be considered. A good way of deciding between multiple objectives is to align on exchange rates that specify equivalences[8]. For example, the value of helping a user find a new channel to which they want to subscribe to might be worth approximately the same as helping a user find $K$ videos they want to watch from a channel with which they are already familiar, for some value of $K$. Like currency exchange rates, equivalences allow us to convert expected changes in different measurements of user value into one common currency, or simple numeric summary: for example Subscriptions + Views-from-channels-to-which-the-user-is-currently-subscribed / $K$. Given clear metric exchange rates from stakeholders, our task is to find parameter settings that maximize a linear combination of treatment effects on different metrics, with weights in the linear combination derived from the exchange rates.

Suppose therefore that we have decided to find parameter settings that maximize a particular linear combination of $N$ metrics. We train $N$ different models, one per metric as described in the previous section, leading to $N$ estimates $\widehat \beta_1, \ldots, \widehat \beta_N$, one per metric, as before. We want to optimize over possible choices of $x$ the linear combination$$

\begin{equation}

L(x) = \lambda_1 f(x)^\intercal \widehat{\beta}_1 + \ldots + \lambda_N f(x)^\intercal \widehat{\beta}_N \tag{2}

\end{equation}

$$which represents a prediction for a linear combination of treatment effects in several metrics.

Because we chose a quadratic embedding — i.e., because $f(x) = (x_1, \ldots, x_p, x_1^2, \ldots, x_p^2, x_1x_2, \ldots, x_{p-1} x_p)$ involves only pairwise products and no terms of higher order, each term in the linear combination (2) is a quadratic function of $x$. Thus for each $i$ there is a matrix $A$, vector $b$, and constant $c$ such that $f(x)^\intercal\beta_i = x^\intercal A_ x + b_i^\intercal x + c_i$. The linear combination $L$ is likewise a quadratic function (its $A$, $b$ and $c$ are linear combinations of the $A_i$s, $b_i$s and $c_i$s).

So, the first optimization problem that comes to mind when thinking about what to do next is "$\max q(x)$'' for a particular quadratic function $q$ derived from our model -- a quadratic optimization problem. However, big decreases in any one dimension of user value are not well tolerated by decision-makers, even if they lead to big increases in others. We want all metrics to go up, or at least to ensure that none go down very much. This is a consequence of having multiple stakeholders with different preferences involved in the decision process. Therefore, we need to limit losses in the individual metrics that comprise the linear combination we maximize.

In symbols, the problem "maximize a linear combination of metrics while keeping all individual component metrics from going down too much'" looks like this:$$

\begin{split}

\max \lambda_1 f(x)^\intercal \widehat{\beta}_1 + \ldots + \lambda_N f(x)^\intercal \widehat{\beta}_N \\

\textrm{subject to } f(x)^\intercal\widehat\beta_i \geq \ell_i \textrm{ for } i = 1 \ldots N

\end{split}

$$Here $\ell_i$ is a lower bound on the acceptable changes in metric $i$. The functions in the objective and the constraints above are all quadratic, so the problem above is a quadratically constrained quadratic program (QCQP).

After we train our model (1), we seek parameter changes that improve our metrics, a problem that can be usefully described as a QCQP. But can we solve QCQP? In general, QCQP instances can be NP-hard, which is to say, very hard. However, we do not need the very-best-possible QCQP solution, we just need to improve user experiences somewhat: we don’t need to solve NP hard problems exactly, we just need parameter updates that improve on the current settings.

For the problems we have encountered in practice, the optimization software we use very quickly finds such parameter updates or indicates that no improvement is possible. Indeed, the speed with which we can type problem descriptions into our computers is more of a rate-limiting factor than the running time of the open-source solver we use. Our main tools are the difference-of-convex-programs paradigm[9] and the embedded conic solver[10]; the reference [11] is also very useful. And for studies involving few parameters, grid search is also possible.

Risk and Robustness

Our estimates $\widehat{\beta}$ of the "true'' coefficients $\beta$ of our model (1) depend on the random data we observe in experiments, and they are therefore random or uncertain. So too, are any parameter updates we calculate using those estimates, which makes parameter updates risky and uncertain. There is also uncertainty related to our modeling choices — did we select the correct polynomial embedding function $f(x)$, or is the true relationship better described by a different polynomial embedding? Ideally, the process we use to compute parameter updates should be robust, i.e., not overly sensitive to our particular modeling choices. In this section we’ll discuss how we approach these two kinds of uncertainty with QCQP.Uncertainty in coefficient estimates

\begin{equation}

(f(x)^\intercal \widehat{\beta}^{(1)} - f(x)^\intercal \widehat \beta )^2 + \ldots + (f(x)^\intercal \widehat{\beta}^{(K)} - f(x)^ \intercal \widehat \beta )^2 \tag{3}

\end{equation} which differs slightly from the textbook estimator[13]: (3) is slightly more convenient to compute and has a small upward bias for fixed $x_{new}$. The summands in this variance estimate represent the squared difference between predictions from the main model trained on all data and predictions from jackknife models trained on subsets of the data at parameter settings $x_{new}$. We prefer parameter updates $x$ at which the predicted increase in user value is moderate but certain, to updates where there is a larger predicted increase in user value but a great deal of uncertainty.

For example, between a parameter update yielding a predicted $X\% \pm 2X\%$ increase in a quality metric, and another update that is predicted to yield a $0.5X\% \pm 0.1X\%$ increase in the same metric, we prefer to proceed with the latter option. The reason is that if we find any way to improve user experiences at all, we can make a launch with potentially long-lasting impact to all of YouTube's many users, and we can optionally continue iterating on experiments if it seems that further parameter improvement is possible. On the other hand, if our guess about effective parameter updates does not improve user experiences, then there will be no launch, user experiences will not be improved, and further experimentation may be deprioritized or halted due to lack of progress. Utility or risk for us is close to a step function: it is important to find some improvement, and less important to make that improvement as big as possible right away.

\begin{split}

f(x)^\intercal \widehat{\beta}^{(i)} - f(x)^\intercal \widehat{\beta} - \epsilon \leq 0 \\

f(x)^\intercal \widehat{\beta} - f(x)^\intercal \widehat{\beta}^{(i)} - \epsilon \leq 0

\end{split} for $i = 1 \ldots 20$, which are equivalent to \begin{equation}

f(x)^\intercal \widehat{\beta} - \epsilon \leq f(x)^\intercal \widehat{\beta}^{(i)} \leq f(x)^\intercal \widehat{\beta} + \epsilon \tag{4}

\end{equation} constraining the parameters in such a way that predictions from the jackknife models will be close to the prediction from the main model trained on all data and making (3) small. If the prediction variance (3) at the solution of our QCQP seems too high for a particular choice of $\epsilon$, we can simply try a smaller value of $\epsilon$ in the constraints (4). This is easy, but it does have a real limitation. The jackknife estimate (3) is more or less OK for a fixed value of $x$, but when $x$ is chosen to minimize (3), we expect the estimate to have negative bias.

\[

\lambda_1 f(x)^\intercal\widehat\beta_1 +\ldots + \lambda_N f(x)^\intercal\widehat\beta_N \geq \ell

\]then we can add replicates of the same lower bound constraint with jackknife model coefficients in place of the main model coefficients

\begin{equation}

\lambda_1 f(x)^\intercal\widehat\beta^{(i)}_1 +\ldots + \lambda_N f(x)^\intercal\widehat\beta^{(i)}_N \geq \ell \tag{5}

\end{equation}In the display above, $\widehat\beta^{(i)}_j$ above represent coefficients in the $i$th jackknife model for the $j$th metric. This is similar to using only the left-hand side of (4), meaning roughly tolerating uncertainty in the preferred direction. A similar trick for "robustifying'' the objective works with the QCQP objective too, if we introduce a slack variable as explained below. The robust optimization literature has many other relevant ideas, some of which we are beginning to explore in our work.

Uncertainty related to modeling choices

In our experiments we often find that straight-line models are incorrect: we regularly see data patterns depart from straight-line models in ways that seem too big to be due to mere chance and also somewhat well-approximated by quadratic curves. Nevertheless, empirically, predictions from models that use simple linear embeddings can be better than predictions from models with quadratic embeddings: apparently there is a gap between knowing that the true relationship between parameters and metrics is not exactly a straight line on one hand, and understanding nonlinearity well enough to exploit it well on the other. Our studies occasionally fall in this gap.Use two models

\begin{split}

& \max q(x)\\

&\textrm{over all possible } x

\end{split}where quadratic function $q$ represents the predicted value of a metric that we want to increase, we can instead work on the problem

& \max s \\

&\textrm{subject to } q(x) \geq s \\

&\textrm{over all possible } (x, s)

\end{split}This move turns a quadratic objective into a linear objective in a new slack variable s, plus one quadratic constraint involving the original parameters and that slack variable. However, it doesn’t change the QCQP-ness of the problem. Thus, if we have two QCQP instances, representing optimization problems for two models, involving quadratic and linear embeddings, say, then we can rewrite them as optimizing over a single slack variable representing the minimum predicted value from both models, as follows:

\begin{split}

& \max s \\

&\textrm{subject to } q_{\textrm{linear}}(x) \geq s \\

&\textrm{subject to } q_{\textrm{quadratic}}(x) \geq s \\

&\textrm{over all possible } (x, s)

\end{split}Finding a decision procedure that works well if one set of assumptions is correct, and not too badly if a different set of assumptions is correct, is a classic strategy for making decision procedures robust, discussed for example in Chapter 1 of [16], and in [17].

Regularization

Another classic idea for making decision procedures robust, found e.g., in [16] and [18], is to use regularization to select a model that uses the simplest adequate set of assumptions from a large collection of possibilities. We sometimes use $L^1$ regularization when we fit models to our live experiment data to select the most important non-linear components of the embedding function $f(x)$. The Lasso gives us different estimates of model coefficients $\widehat\beta$, but these are not different in shape or kind from the estimates we get from non-regularized regression. So regularization, for example via Lasso, can easily be combined with QCQP to make decisions more robust.Some practical advice

This section mentioned strategies for mitigating model uncertainty. The main point was to describe some options and say that QCQP is expressive enough to encompass them. But it is natural to want practical advice too, so I will hazard a few general remarks from personal experience.In my experience the Lasso is more helpful the more parameters there are in the system. The number of possible second-order terms obviously grows quadratically in the number of parameters, and in the 12-parameter experiment previously described, the fully quadratic embedding has 12 linear terms and 78 second-order terms. Interactions between parameters in different YouTube recommendation subsystems definitely exist, but important interactions and quadratic effects seem relatively rare. So, most of those 78 parameters are not needed, and while regularization may create a little bias in prediction, it reduces variance more than enough to compensate. In your setting, too, it may be the case that regularization is more necessary the more parameters you attempt to optimize at once. Another piece of advice - offered more tentatively - is that seeking parameter-updates where variance (3) is bounded by imposing two-sided uncertainty constraints (4) may be less effective than using one-sided constraints, e.g. as in (5).

Optimal Design

Suppose $y_i = f(x_i)^\intercal \beta + \epsilon_i$ with $\epsilon_i$ independent and identically distributed for $i=1 \ldots N$, and that $\widehat\beta$ is the least-squares estimate of $\beta$. The variance of the prediction for a new point $x_{new}$ from the fitted model will be\[

\textrm{Var}(f(x_{new})^\intercal \widehat \beta ) = f(x_{new})^\intercal \textrm{Var}(\widehat \beta)f(x_{new}).

\] From this it appears that minimizing $\textrm{Var}(\widehat \beta)$ will tend to minimize model prediction variance too, though it depends on the value of $x_{new}$. As it turns out, minimizing $\det (f(X)^\intercal f(X))^{-1}$ is equivalent to minimizing the maximum possible prediction variance over different points $x_{new}$ where one might want a prediction. That is the main content of the General Equivalence Theorem: see [19] for a precise statement and proof. A design which minimizes this determinant is called D-optimal.

Because of its great practical importance, D-optimality is very well studied and there are a number of algorithms for solving it (e.g., the Fedorov-Wynn exchange algorithm[20]). At YouTube we have had good experiences with the R package OptimalDesign[21]; and modern general-purpose optimization software can handle this problem too[22][23][24].

So, what is the connection between D-optimality and central composite designs? As it happens, fractional factorial designs are D-optimal for linear models that use simple linear embeddings, i.e., $f(x) = x$, and central composite designs are nearly D-optimal for models that include quadratic components and pairwise interactions: not quite optimal, but pretty close. This is the main reason they are good from our perspective, yet they have several other desirable properties too: see [25] for further discussion.

Here is how we think about experiment design. First, we pick a subset of parameter space sufficiently small that the relationship between parameters and metrics is easy to model, e.g. with a low-order polynomial, but big enough so that it is likely to contain some parameter settings meaningfully better for our users than the current settings. This task is very context-dependent, so it is difficult to say anything useful and general about it. Next we choose a set of experiments to run, in that space of parameters, that maximizes information useful for model-fitting. Spreading points out increases information, in general, but it makes low-order models less relevant: e.g. spreading points out is ideal for learning the simple straight-line model, but the more we spread points out, the less likely it is that the relationship actually is a straight line. So there is some tension between spreading points out (to reduce variance of estimates in the working model) and keeping points close (to reduce bias from model misspecification). Spending some experiment budget on data for checking the need for a more complicated higher order model can be useful — see [25] for discussion on this.

In other contexts experiments may have a fixed cost, which sometimes compels the use of low-resolution fractional factorial designs. At YouTube — and perhaps in your work — cost is proportional to the size and duration of experiments, and experimental "material'' is essentially infinitely divisible. There is no fixed per-experiment cost, so we can set up as many experiments as we want, accepting that doing so makes the measurements in each one less precise. This makes it possible for us to use high resolution designs, and even the so-called approximate designs that take measurements of different resolutions at different points. Perhaps you can do so too.

Eloquent optimization

The QCQP vocabulary for describing optimization problems has vast expressive capacity, allowing us, as we have already seen, to optimize target metrics while limiting losses in other metrics, mitigate uncertainty due to statistical noise and achieve robustness with respect to modeling choices. This section concludes our description of how we use QCQP for making good decisions based on models by enforcing parameter constraints and optimizing metric trends. The key idea in this section is to add more quadratic constraints — doing so preserves the QCQP-ness of the problem and does not (in principle at least) change the techniques needed to solve it.Our parameter selection task is a quadratically constrained quadratic program (QCQP), which is to say a problem of the form

\begin{split}

& \min q_0(x) \\

& \textrm{ such that } q_i(x) \leq 0 \textrm { for } i = 1 \ldots N \\

& \textrm{ over } x

\end{split}for quadratic functions $q_0, \ldots , q_N$. By introducing a slack variable $s$, setting $z = (s, x)$ and $\tilde q_0(z) = q(x) - s$, $\tilde q_i(z) = q(x)$ $i=1\ldots N$, any QCQP can be rewritten to involve the minimization of a linear objective subject to quadratic constraints:

\begin{split}

& \min s \\

& \textrm{ such that } \tilde q_i(z) \leq 0 \textrm { for } i = 0 \ldots N \\

& \textrm{ over } z

\end{split}

The remarkable flexibility of this problem class derives from the many desiderata — objectives we want to optimize or constraints we want to satisfy — that can be encoded in quadratic functions and added to the QCQP constraint set.

- QCQP allows us to constrain parameters: To reduce risks associated with trying to generalize far beyond the support of our training data, we constrain the solutions to our parameter optimization problems to lie in a region of parameter space close to our experiments by adding constraints to the QCQP.

- QCQP can handle ANCOVA: In analysis of A/B tests, pre-experiment metric measurements are used by methods like ANCOVA[26], CUPED[27] and PrePost[28] to reduce variance of treatment effect estimates. We use PrePost in most of our A/B tests, so we have pre-experiment metric measurements readily available that we can use as covariates in our models. Including these covariates in our models makes coefficient estimates much more accurate.

- QCQP is not merely for quadratic embeddings: Note that $v = u \times u$ and $w = u \times v$ together imply that $w = u^3$ : this demonstrates that cubic functions (like $u^3$) can be written as quadratic functions (like $u \times v$) in additional variables (like $v$) subject to quadratic constraints (like $v= u \times u$). The same is true for higher-degree polynomials: they can be written as quadratic functions in additional variables subject to quadratic constraints. So if we needed a cubic or higher-order polynomial embedding for our model, the QCQP vocabulary could accommodate the description of the resulting parameter-selection problem.

Conclusion

This blog post was about three powerful ideas for experiment-driven product improvement through parameter optimization:- Online experiments are training data for a statistical model.

- The choice of model has specific implications about what training data is most valuable and informative, i.e., what experiments ought to be run.

- The fitted model contains an answer to the question "what to try next" that can be efficiently extracted with optimization tools. These tools help us make intelligent tradeoffs between different objectives, select good options with minimum uncertainty, and achieve robustness with respect to modeling choices.

References

Factorial 2-Level Designs. Journal of Statistical Software, 56(1):1-56, 2014.

[14] D. Bertsimas and D. den Hertog. Robust and Adaptive Optimization. Dynamic Ideas LLC, 2022.

[15] A. Prékopa. Stochastic Programming. Mathematics and Its Applications. Springer Netherlands, 2013.

[16] E.L. Lehmann and J.P. Romano. Testing Statistical Hypotheses. Springer Texts in Statistics. Springer New York, 2006.

[17] Peter J. Kempthorne. Controlling Risks under Different Loss Functions: The Compromise Decision Problem. The Annals of Statistics, 16(4):1594– 1608, 1988.

[18] Charles J. Stone. Admissible Selection of an Accurate and Parsimonious Normal Linear Regression Model. The Annals of Statistics, 9(3):475 – 485, 1981.

[19] J. Kiefer. General Equivalence Theory for Optimum Designs (Approximate Theory). The Annals of Statistics, 2(5):849–879, 1974.

[20] Luai Al Labadi. Some refinements on Fedorov’s algorithms for constructing D-optimal designs. Brazilian Journal of Probability and Statistics, 29(1):53 – 70, 2015.

[21] Radoslav Harman and Lenka Filova. OptimalDesign: A Toolbox for Computing Efficient Designs of Experiments, 2019. R package version 1.0.1.

[22] Steven Diamond and Stephen Boyd. CVXPY: A Python-embedded modeling language for convex optimization. Journal of Machine Learning Research, 17(83):1–5, 2016.

[23] Akshay Agrawal, Robin Verschueren, Steven Diamond, and Stephen Boyd. A rewriting system for convex optimization problems. Journal of Control and Decision, 5(1):42–60, 2018.

[24] Guillaume Sagnol and Maximilian Stahlberg. PICOS: A Python interface to conic optimization solvers. Journal of Open Source Software, 7(70):3915, 2022.

[25] G.E.P. Box and N.R. Draper. Empirical Model-Building and Response Surfaces. Wiley Series in Probability and Statistics. Wiley, 1987.

[26] R.A. Fisher. Statistical Methods for Research Workers. Biological monographs and manuals. Oliver and Boyd, 1925.

[27] Alex Deng, Ya Xu, Ron Kohavi, and Toby Walker. Improving the sensitivity of online controlled experiments by utilizing pre-experiment data. Proceedings of the Sixth ACM International Conference on Web Search and Data Mining, WSDM ’13, page 123–132, New York, 2013.

[28] Jacopo Soriano. Percent change estimation in large scale online experiments. arXiv, 2019.

Comments

Post a Comment