The Perilous State of Today’s Data Environments

Data teams often navigate a labyrinth of chaos within their databases. The core issue plaguing many organizations is the presence of out-of-control databases or data lakes characterized by:

- Unrestrained Data Changes: Numerous users and tools incessantly alter data, leading to a tumultuous environment.

- Extrinsic Control Deficit: Many of these changes stem from tools and processes beyond the immediate control of the data team.

- Unregulated ETL/ELT Processes: The absence of stringent data quality tests in ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) processes further exacerbates the problem.

As a result, data teams are often left shouldering the blame for poor data quality, feeling powerless in the face of changes imposed by others.

A Call for Rapid Problem Identification and Resolution

Data teams urgently need tools and strategies to identify data issues before they escalate swiftly. The key lies in proactively detecting anomalies and notifying responsible parties to implement corrections. The goal is to establish a system that can:

- Continual Monitoring of Tables (or buckets):

- Implement automated monitoring systems that continuously track changes across all database tables.

- Monitor for freshness, schema changes, volume, field health/quality, new tables, and usage.

- Identifying Anomalies:

- Use advanced algorithms to detect anomalies in data patterns.

- Establish baseline metrics for normal database operations, enabling the system to flag deviations as potential issues.

- Assigning Responsibility and Prompting Action:

- Integrate the monitoring system with a user activity log to trace specific user or tool data changes.

- Develop an automated notification system to alert responsible parties about the detected anomalies.

- Notification to Affected Parties:

- Once a problem is identified and the responsible party is notified, informing those impacted by the change is crucial.

- Implement a communication protocol that swiftly informs stakeholders, allowing them to brace for or address the potential impacts of the data change.

- Building a Culture of Accountability:

- Encourage a culture where data integrity is everyone’s responsibility.

- Offer training and resources to help all users understand the impact of their actions on the database.

- Iterative Improvement:

- Constantly refine your monitoring and anomaly detection systems based on feedback and new challenges.

- Adopt an iterative approach, characteristic of DataOps and Agile methodologies, to continuously improve data processes and systems.

Solutions to Reign in the Chaos

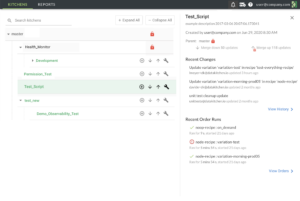

- Implementing Data Observability Platforms: Tools like DataKitchen’s DataOps Observability provide an overarching view of the entire Data Journey. They enable continuous monitoring of data transformations and integrations, offering invaluable insights into data lineage and changes.

- Adopting Automated Data Quality Tests: Utilizing DataKitchen DataOps TestGen, data teams can introduce automated quality checks within their ETL/ELT processes. Monitor freshness, schema changes, volume, and column health are standard. These tests can identify inconsistencies, errors, and anomalies in real time, providing an early warning system for potential data issues.

- Setting Up Alert Systems: Establishing automated alerts that notify relevant stakeholders about identified anomalies is critical. This system should be capable of discerning who made the change, what was changed, and who might be impacted by it.

- Creating a Culture of Data Responsibility: Cultivating an environment where each stakeholder understands the impact of their data interactions promotes accountability. It is essential to educate all users on the importance of data integrity and their role in maintaining it.

- Streamlining Communication Channels: Develop clear and efficient communication protocols to swiftly reach out to individuals responsible for data anomalies and those affected by them. This approach ensures quick resolution and minimizes the impact of data issues.

The Path Forward

The journey to taming a disorderly database environment is complex but achievable. By leveraging advanced data observability tools, automated testing, and fostering a culture of accountability, data teams can transition from reactive to proactive. This shift reduces the burden of blame and enhances the overall data quality, leading to more reliable and trustworthy data ecosystems.

Conclusion

In conclusion, the key to mastering the chaotic database environment lies in embracing technology and fostering a culture of shared responsibility. By continually monitoring databases, identifying anomalies, effectively communicating with responsible and affected parties, and fostering a culture of accountability, data teams can transition from being the bearers of bad news to champions of data integrity. The journey isn’t easy, but with the right approach, tools, and mindset, the chaos of the dastardly, dark, disorderly database can be transformed into an orderly, efficient, and trustworthy data environment.