In the first blog of the Universal Data Distribution blog series, we discussed the emerging need within enterprise organizations to take control of their data flows. From origin through all points of consumption both on-prem and in the cloud, all data flows need to be controlled in a simple, secure, universal, scalable, and cost-effective way. With the rapid increase of cloud services where data needs to be delivered (data lakes, lakehouses, cloud warehouses, cloud streaming systems, cloud business processes, etc.), controlling distribution while also allowing the freedom and flexibility to deliver the data to different services is more critical than ever.

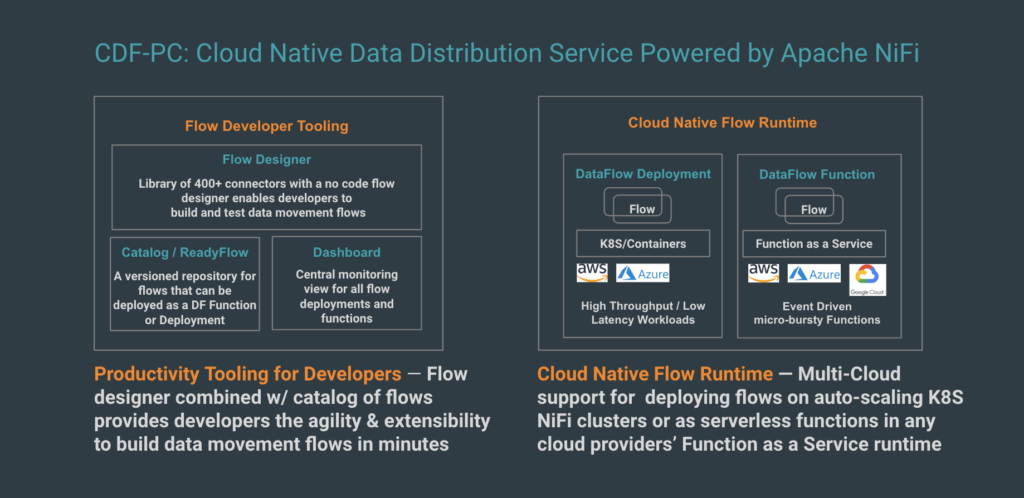

Cloudera DataFlow for the Public Cloud (CDF-PC), a cloud native universal data distribution service powered by Apache NiFi, was built to solve the data collection and distribution challenges with the four key capabilities: connectivity and application accessibility, indiscriminate data delivery, prioritized streaming data pipelines, and developer accessibility.

In this second installment of the Universal Data Distribution blog series, we will discuss a few different data distribution use cases and deep dive into one of them.

Data distribution customer use cases

Companies use CDF-PC for diverse data distribution use cases ranging from cybersecurity analytics and SIEM optimization via streaming data collection from hundreds of thousands of edge devices, to self-service analytics workspace provisioning and hydrating data into lakehouses (e.g: Databricks, Dremio), to ingesting data into cloud providers’ data lakes backed by their cloud object storage (AWS, Azure, Google Cloud) and cloud warehouses (Snowflake, Redshift, Google BigQuery).

There are three common classes of data distribution use cases that we often see:

- Data Lakehouse and Cloud Warehouse Ingest: CDF-PC modernizes customer data pipelines with a single tool that works with any data lakehouse or warehouse. With support for more than 400 processors, CDF-PC makes it easy to collect and transform the data into the format that your lakehouse of choice requires. CDF-PC provides the flexibility to treat unstructured data as such and achieve high throughput by not having to enforce a schema, or give unstructured data a structure by applying a schema and use the NiFi expression language or SQL queries to easily transform your data.

- Cybersecurity and Log Optimization: Organizations can lower the cost of their cybersecurity solution by modernizing data collection pipelines to collect and filter real-time data from thousands of sources worldwide. Ingesting all device and application logs into your SIEM solution is not a scalable approach from a cost and performance perspective. CDF-PC allows you to collect log data from anywhere and filter out the noise, keeping the data stored in your SIEM system manageable.

- IoT & Streaming Data Collection: This use case requires IoT devices at the edge to send data to a central data distribution flow in the cloud, which scales up and down as needed. CDF-PC is built for handling streaming data at scale, allowing organizations to start their IoT projects small, but with the confidence that their data flows can manage data bursts caused by adding more source devices and handle intermittent connectivity issues.

IoT and streaming data collection use case: collect POS data from the edge and globally distribute to multiple cloud services

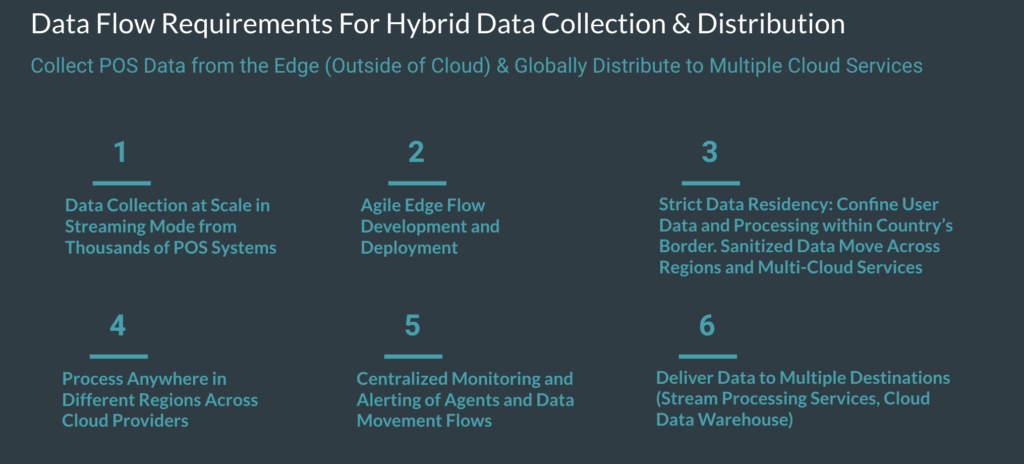

Lets double-click on the IoT and streaming data collection use case category with a specific use case of a global retail company and see how CDF-PC was used to solve the customer’s data distribution needs. The customer is a multinational retail company who wants to collect data from point of sale (POS) systems across the globe and distribute them to multiple cloud services with six key requirements.

- Requirement 1: The company has thousands of point of sale systems and need a scalable way to collect the data in real-time streaming mode.

- Requirement 2: Developers need an agile low-code approach to develop edge data collections flows in different regions, and then easily deploy them to thousands of point of sale systems.

- Requirement 3: Data residency requirements. The POS data and the processing of that data cannot occur outside the region of origination until the data has been redacted based on local geo rules.

- Requirement 4: These different geo rules require the use of different cloud providers and the need to be able to process data in different regions.

- Requirement 5: Given the distributed nature of the requirements, centralized monitoring across regions and cloud providers is critical.

- Requirement 6: Need the ability to deliver data to diverse destinations and services including Cloud Provider Analytics Services, Snowflake, and Kafka without requiring multiple point solutions.

Addressing the hybrid data collection and distribution requirements with a data distribution service

The solution was implemented using the latest release of Cloudera DataFlow for the Public Cloud (CDF-PC) and Cloudera Edge Management (CEM):

- CDF-PC 2.0 Release: Supports the latest Apache NiFi Release 1.16 and new inbound connections feature that make it easy to create ingress gateway endpoints for edge clients to stream data into the service. New connectors have also been added that make it easy to ingest/stream data into cloud warehouses like Snowflake.

- CEM 4.0 Release: The latest release of CEM not only provides edge flow management capabilities but advanced agent management and monitoring.

Check out the following video to see how CDF-PC and CEM were used to solve these six requirements for their data distribution use case:

The solution recap

The below diagram describes how the solution was implemented to address the above requirements.

- We used Cloudera Edge Management to develop edge data collection flows that ingest the POS data as close to data origination as possible and stream the data to a data distribution service. The latest release of CEM not only provides edge flow management capabilities but advanced agent management and monitoring. The decentralized streaming data collection approach addresses scale and agility needs of requirement 1 and 2.

- Each POS MiNiFi agent will stream data to a distribution flow powered by CDF for Public Cloud. The distribution flow will run in the region and cloud provider as dictated by the geography that the POS data originates from. This addresses the data residency and process anywhere needs of requirement 3 and 4.

- Double clicking on one of these data distribution NiFi flows, we see it consists of three components: ingest, process, and distribute. Since we have hundreds of thousands of clients generating POS data, using a connector to connect to each of these clients is not a scalable model. We showed that the latest CDF Public Cloud release now supports setting up ingress gateways across any cloud provider in a matter of a few clicks that will automate creation of load balancers, DNS records, and certificates. The ingress gateway allows each POS client to stream data into this gateway endpoint.

- Once the data reaches the ingress gateway, the NIFi distribution flow will perform routing, filtering, and readaction before delivering to downstream services including Cloudera Streams Processing and Snowflake, addressing requirement 6. In the latest release of CDF Public Cloud, we have made ingestion into Snowflake easier with the new Snowflake connection pool controller service.

- Finally, CDF-PC and CEM provide a centralized monitoring and management view across all edge agents and regional data distribution flows across multiple cloud providers addressing requirement 5 around centralizing view of the distributed assets.

Getting started

To learn more about implementing your own IoT use cases, ingesting data into your data lakes and lakehouses, or delivering data to various cloud services, take our interactive product tour or sign up for a free trial.