Tuning Adam Optimizer Parameters in PyTorch

Choosing the right optimizer to minimize the loss between the predictions and the ground truth is one of the crucial elements of designing neural networks.

With the rapidly expanding data universe and the ability of artificial neural networks to deliver high performance, the world is moving towards solving complex problems never tackled before. But wait, there is a catch - building a robust neural network architecture is not a trivial task.

Choosing the right optimizer to minimize the loss between the predictions and the ground truth is one of the crucial elements of designing neural networks. This loss minimization is performed in batches of the training data leading to slow convergence of the parameters' value. The optimizers dampen the sideways movement in the parameter space that speeds up the model convergence and in turn lead to faster deployment. This is the reason a lot of emphasis has been put on developing high-performance optimizer algorithms.

The article will explain how Adam optimizer, one of the commonly used optimizers, works and demonstrate how to tune it using the PyTorch framework.

The Adam Optimizer

Adaptive Moment Estimation aka Adam optimizer is an optimization technique and a derivative of the gradient descent algorithm. When working with large data and dense neural network architecture, ADAM proves to be more efficient as compared to Stochastic Gradient descent or momentum-based algorithms. It is essentially the best of the ‘gradient descent with momentum’ algorithm and the ‘RMSProp’ algorithm. Let us first understand these two optimizers to build a natural progression to the Adam optimizer.

Gradient Descent with Momentum

This algorithm accelerates convergence by factoring in the exponentially weighted average of the gradients in the last n steps. Mathematically,

Image by Author

Note that smaller? means small steps towards minima and large? means large steps. Similar to weight updation, bias is updated as shown in the equations below:

Momentum not only speeds up convergence but also has the ability to skip over local minimas.

The figure below illustrates that the blue path (gradient descent with momentum) has less oscillations as compared to the red path (gradient descent only) thus converging faster towards the minima.

Source: Gradient Descent Explained

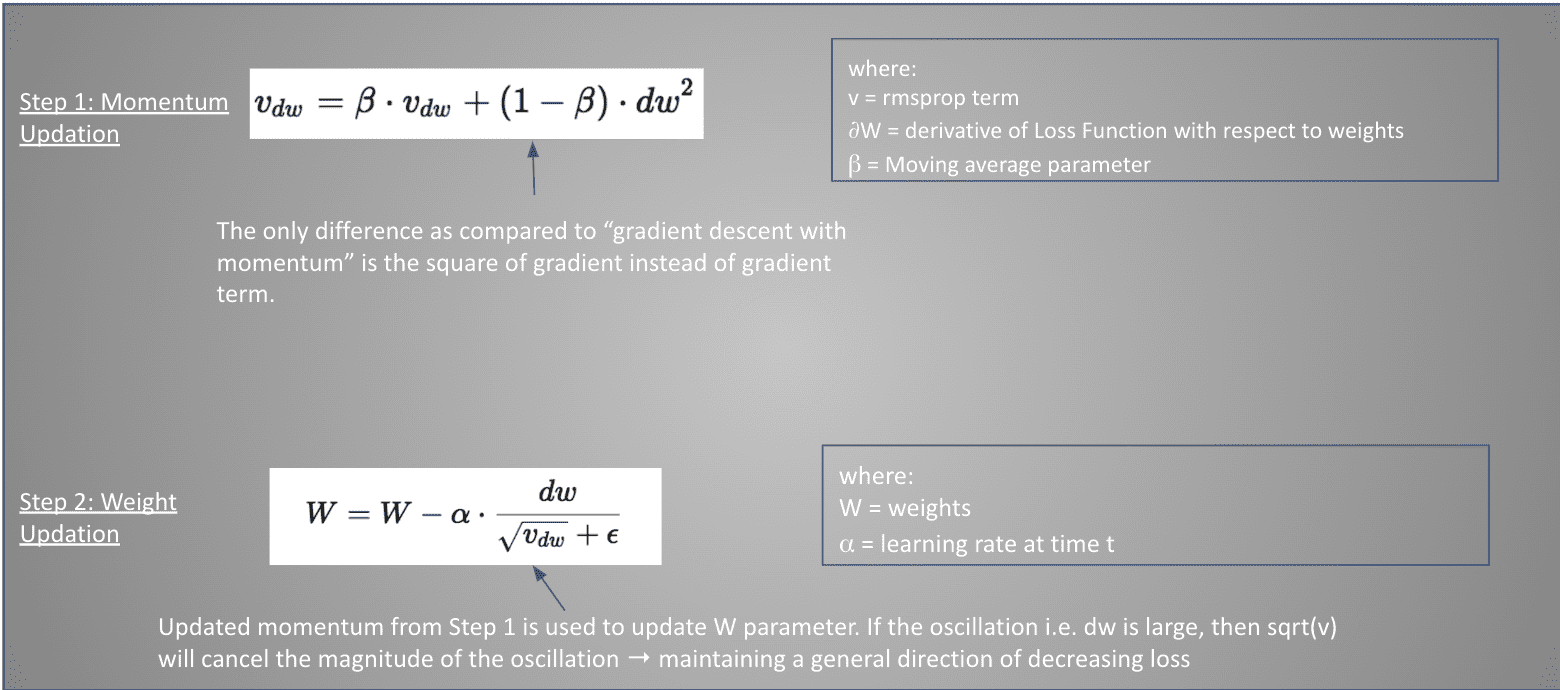

RMSProp

Root mean square propagation aka RMSprop is an adaptive learning algorithm that adjusts the oscillations according to the gradient magnitude. Unlike the momentum approach, it reduces the step size when the gradient increases.

A small value of epsilon is added to avoid dividing by zero error.

Image by Author

Adam optimizer combines “gradient descent with momentum” and “RMSprop” algorithms. It gets the speed from “momentum” (gradient descent with momentum) and the ability to adapt gradients in different directions from RMSProp. The combination of the two makes it powerful. As shown in the below figure, the ADAM optimizer (yellow) converges faster than momentum (green) and RMSProp (purple) but combines the power of both.

Source: Paperspaceblog

Now that you have understood how an Adam optimizer works, let’s dive into the tuning of Adam hyperparameters using PyTorch.

Tuning Adam Optimizer in PyTorch

ADAM optimizer has three parameters to tune to get the optimized values i.e. ? or learning rate, ? of momentum term and rmsprop term, and learning rate decay. Let us understand each one of them and discuss their impact on the convergence of the loss function.

Learning Rate (alpha or Lr)

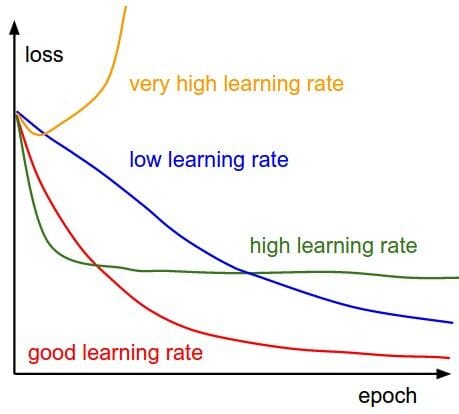

Learning Rate, also referred to as the step size, is the ratio of parameter update to gradient/momentum/velocity depending on the optimization algorithm used.

Source: CS231n Convolutional Neural Networks for Visual Recognition

A small learning rate would mean slower model training i.e. it would require many updates to the parameters to reach the point of minima. On the other hand, a large learning rate would mean large steps or drastic updates to the parameters thus often tends to divergence instead of convergence.

An optimal learning rate value (default value 0.001) means that the optimizer would update the parameters just right to reach the local minima. Varying learning rate between 0.0001 and 0.01 is considered optimal in most of the cases.

Source: Setting the learning rate of your neural network

Betas

β1 is the exponential decay rate for the momentum term also called first moment estimates. It’s default value in PyTorch is 0.9. The impact of different beta values on the momentum value is displayed in the image below.

Source: Stochastic Gradient Descent with momentum

β2 is the exponential decay rate for velocity term also called the second-moment estimates. The default value is 0.999 in the PyTorch implementation. This value should be set as close to 1.0 as possible on the issues pertaining to sparse gradient, especially in image-related problems. As there is little to no change in pixel density and colors in most of the high-resolution images, it gives rise to a tiny gradient (even smaller square of gradient) leading to slow convergence. A high weightage to velocity term avoids this situation by moving in the general direction of minimizing a loss function.

Learning Rate Decay

Learning rate decay decreases ? as the parameter values reach closer to the global optimal solution. This avoids overshooting the minima often resulting in faster convergence of the loss function. The PyTorch implementation refers to this as weight_decay with default value being zero.

Epsilon

Although not a tuning hyperparameter, it is a very tiny number to avoid any division by zero error in the implementation. The default value is 1e-08.

The PyTorch code to initialize the optimizer with all the hyperparameters is shown below.

torch.optim.Adam(params, lr=0.001, betas=(0.9, 0.999), eps=1e-08, weight_decay=0)

The remaining hyperparameters such as maximize, amsgrad, etc can be referred to in the official documentation.

Summary

In this article you learned the importance of optimizers, three different optimizers, and how Adam optimizer combines the best of momentum and RMSProp optimizer to lead the optimizer chart in performance. The post followed up on the internal working of the Adam optimizer and explained the various tunable hyperparameters and their impact on the speed of convergence.

Vidhi Chugh is an AI strategist and a digital transformation leader working at the intersection of product, sciences, and engineering to build scalable machine learning systems. She is an award-winning innovation leader, an author, and an international speaker. She is on a mission to democratize machine learning and break the jargon for everyone to be a part of this transformation.