Today, every company should be using Generative AI capabilities to complement machine learning techniques, streamline operations, and ultimately work smarter. Chat-based use cases will quickly become the new normal. That means increasingly more demand from the business to develop and deploy these use cases enterprise-wide.

However, many IT organizations have restricted or banned free-to-use applications like ChatGPT in the enterprise. And rightfully so — transparency, security, and reliability are real concerns, and mitigating those risks at scale is a challenge.

Even if employees have access to mainstream large language models (LLMs), these base models cannot access an organization's proprietary data. Ultimately, this severely limits enterprise value for ChatGPT-like applications.

Scaling AI With RAG

The good news is that retrieval-augmented generation (RAG) has emerged as a game changer for leveraging proprietary data. RAG is an information retrieval technique that allows LLMs to generate responses that are context-aware. It does this by retrieving relevant information (e.g., your company’s data, research or news documents, domain-specific knowledge) before crafting a response to user queries. For example, an LLM-based chat application that answers insurance policy questions based on your corpus of policies.

Once you understand the role of RAG for Generative AI in the enterprise, it’s easy to imagine the use cases. Different departments may use multiple RAG-based chat applications to improve efficiency in various tasks.

So the questions then become: How can you build tens — or hundreds — of these applications at scale? How can Generative AI or data teams address the accelerated demands for chat interfaces with a scalable framework? How can they ensure that building and maintaining these interfaces doesn’t consume too many resources?

Dataiku Answers for Smart & Responsible Innovation

Dataiku Answers is a packaged, scalable web application. It democratizes enterprise-ready LLM chat and RAG usage across business processes and teams. With Dataiku Answers, data teams can build Generative AI-powered chat applications using RAG at enterprise scale that are:

Simple & Scalable

With Generative AI evolving quickly, data teams should stay flexible with their model and service choices. Applications that are "hardwired" to the underlying LLM create dependencies that can be difficult and expensive to remove once the application is deployed in the organization.

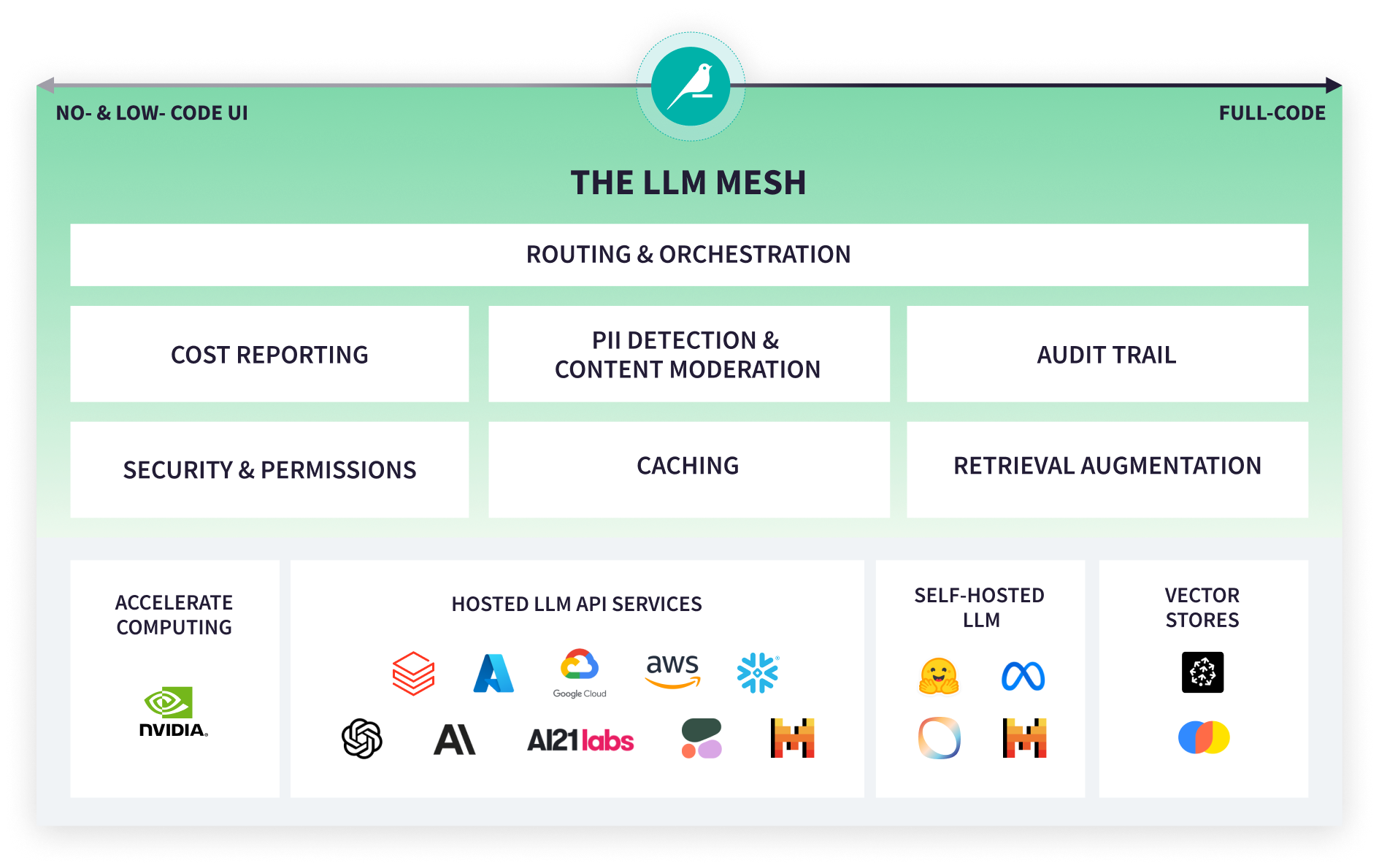

The Dataiku LLM Mesh is a common backbone for Generative AI applications. It is designed to address precisely this challenge, reshaping how analytics and IT teams securely access Generative AI models and services.

Thanks to the power of the LLM Mesh, connect Dataiku Answers to your choice of LLM, vector store/database, and knowledge bank in a matter of a few clicks. Plus, quickly adjust technological choices to evolve with requirements or new technologies as they arise.

Customizable

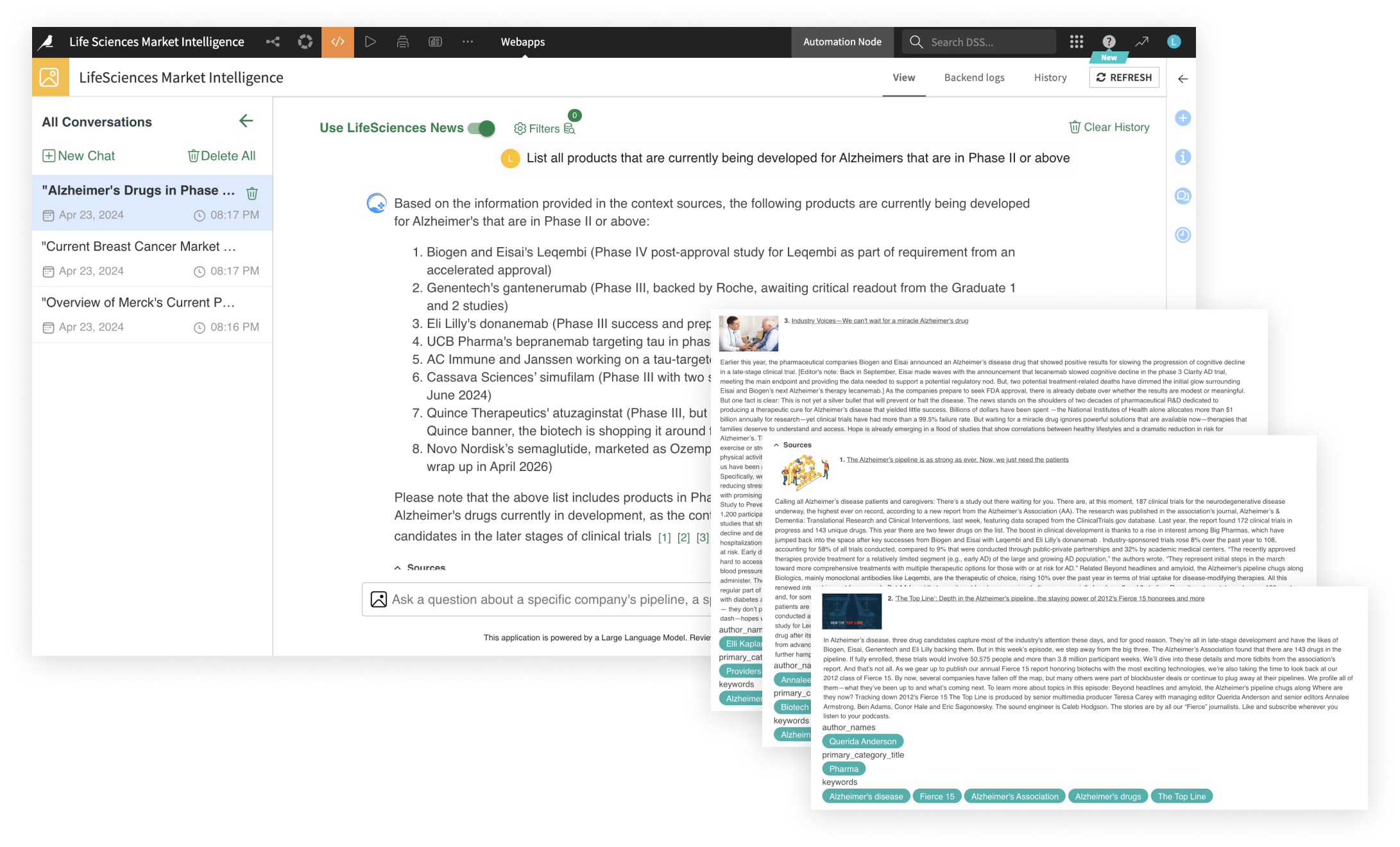

Dataiku Answers is fully customizable. Teams can set up detailed prompts, parameters, and metadata for a result aligned with each business objective.

For example, you can configure options for positive and negative feedback, enabling end users to interact and rate their experience. You can also customize Dataiku Answers by entering detailed prompts. E.g., if the LLM is to function as a life sciences analyst, the prompt instructs the chatbot only to use substantiated facts from the provided knowledge bank as the basis of the response and to structure the cited research in a clear and chronological order, with bullet points for clarity where possible, with language aligned to the field of life science.

“Data leaders have been asking how they can deploy RAG-powered chatbots more easily throughout their organizations. Dataiku Answers already addresses this need for more than 20 global retailers, manufacturers, and other organizations. In a matter of a few weeks, we have seen them meet a variety of needs from opening a company-wide LLM chat to their corporate documents down to creating domain-specific chatbots for investor relations, procurement, sales, and other teams.”

— Sophie Dionnet, Global VP, Product and Business Solutions, at Dataiku

Transparent & Governed

Dataiku Answers embeds quotes in answers for more confidence in accurate responses. That means simplified access to sources and metadata for each response item for maximum trust and explainability. It is also fully governed, with the ability to monitor all conversations and user feedback.

Because Dataiku Answers is powered by the LLM Mesh, it leverages dedicated components for AI service routing, personally identifiable information (PII) screening, LLM response moderation, as well as performance and cost tracking. The Dataiku LLM Mesh also provides caching capabilities, delivering cost-effective and consistent answers to repeated questions for improved experience and impact.

Dataiku Answers also offers full segregation of access for different departments to use different augmented models. For example, the claims department can have access to a model that "sees" in-depth customer policy docs. Legal has access to a model that has vendor contracts and terms in their knowledge bank. The LLM Mesh ensures that it keeps the two distinct and private for "need-to-know" security.

Bottom line: IT remains in control of LLM use. They have full visibility into how teams across the organization are using and optimizing Generative AI. And business teams get answers from their specific content, for maximized impact, powered by a common, scalable backbone.

“I’m looking forward to the LLMs. That’s where the innovation comes into the picture. The LLM Mesh will allow us to innovate in an agnostic way, which is of essence when it comes to data security and compliance.”

— Analytics & Data Science Product Owner, Global Pharmaceutical Company | Forrester: The Total Economic Impact™ Of Dataiku Study

Real-Life Dataiku Answers Use Cases

Large organizations worldwide are already using Dataiku Answers to provide accurate answers for a range of use cases:

- A procurement team uses Dataiku Answers to have a trusted, natural language, question-and-answer approach to all contracts.

- An investment relationship team uses Dataiku Answers to speed up call analysis and improve executive readiness for analyst relationship management.

- A Tier 1 retailer is deploying Dataiku Answers for 20,000+ users as both an LLM Chat and internal documentation AI assistant.

- A global pharma company is rolling out Dataiku Answers to all its field sales teams to facilitate understanding of sales processes and programmatic activities for each and every marketed drug.

Plus, hundreds of other companies are using Dataiku to put real Generative AI use cases into production:

- See how Doosan used Dataiku to develop its first transformative Generative AI project in less than one month.

- Fast food chain Whataburger uses LLMs in Dataiku to extract key information from the reviews and build a high-visibility dashboard.

- Ørsted uses Dataiku and Generative AI to ensure its executive management has a more aligned understanding of market dynamics, for a 100% time savings over a manual approach.

- Heraeus uses LLMs in Dataiku to support its lead identification and qualification process, ultimately saving time and increasing sales conversion.

- With the help of Generative AI and Dataiku, LG Chem provides an AI service that helps employees find safety regulation information quickly and accurately

Why Choose Dataiku for Your Enterprise Chat Needs?

According to Deloitte, a whopping 79% of business and technology leaders expect Generative AI to drive substantial transformation within their organization and industry over the next three years. However, the same study finds that less than one in four organizations are building differentiated AI. The vast majority are relying on standard, off-the-shelf AI solutions that increase costs without setting them apart.

Today, companies put a lot of time and effort (not to mention budget) into managing AI Infrastructure, including data management, storage, and GPUs. However, in the future, AI infrastructure will likely be largely standardized.

That means the true differentiator for companies will be their investment in human capital and in fostering transformative change with AI.

At Dataiku, we're all about this people-first approach to AI. We believe that the keys to long-term success lie in enhancing productivity, fostering enablement, and ensuring robust governance.

Dataiku is the universal AI platform where you can build, operationalize, and manage differentiated artificial intelligence (AI). With Dataiku, you have the flexibility to:

- Connect to existing infrastructure. Dataiku integrates with the technology of today and tomorrow so you can experiment and switch underlying architectures with ease.

- Support everyone in working with data. From data to domain experts, Dataiku helps you meet them where they are with no, low, and full-code features.

- Underpin all processes with the governance and right level of oversight required to leverage AI at scale and make informed decisions about risks and resources.