Using Empirical Bayes to approximate posteriors for large "black box" estimators

The Unofficial Google Data Science Blog

NOVEMBER 4, 2015

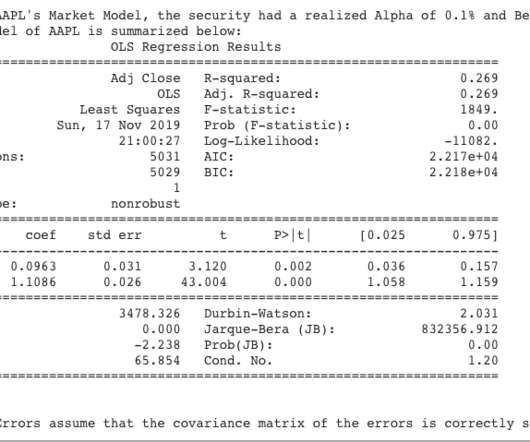

Posteriors are useful to understand the system, measure accuracy, and make better decisions. Methods like the Poisson bootstrap can help us measure the variability of $t$, but don’t give us posteriors either, particularly since good high-dimensional estimators aren’t unbiased. Figure 4 shows the results of such a test.

Let's personalize your content