The Lean Analytics Cycle: Metrics > Hypothesis > Experiment > Act

Occam's Razor

APRIL 8, 2013

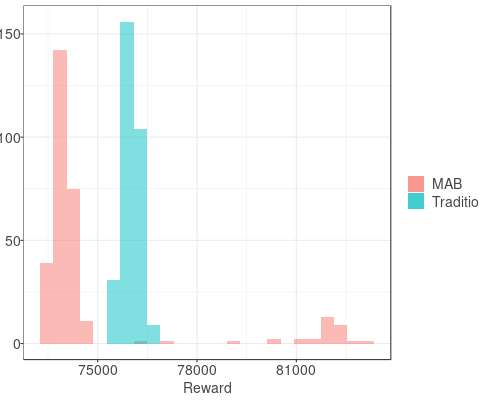

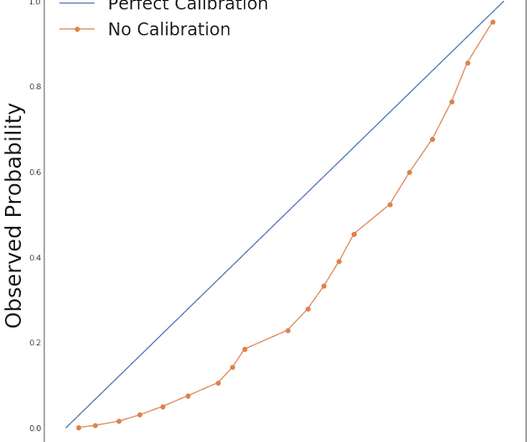

Sometimes, we escape the clutches of this sub optimal existence and do pick good metrics or engage in simple A/B testing. Testing out a new feature. Identify, hypothesize, test, react. But at the same time, they had to have a real test of an actual feature. You don’t need a beautiful beast to go out and test.

Let's personalize your content