The trinity of errors in financial models: An introductory analysis using TensorFlow Probability

O'Reilly on Data

JANUARY 22, 2019

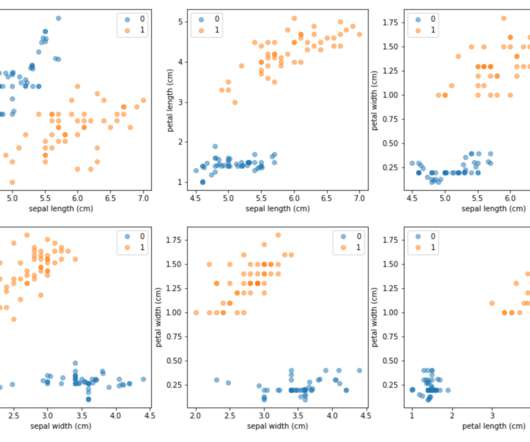

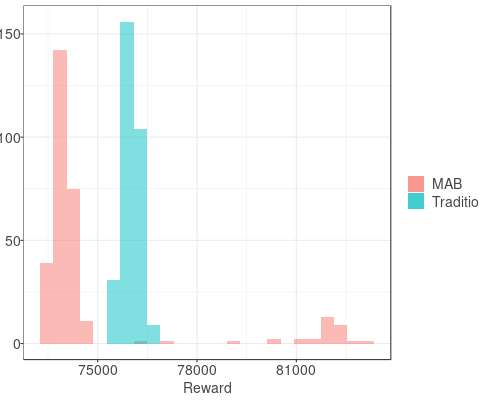

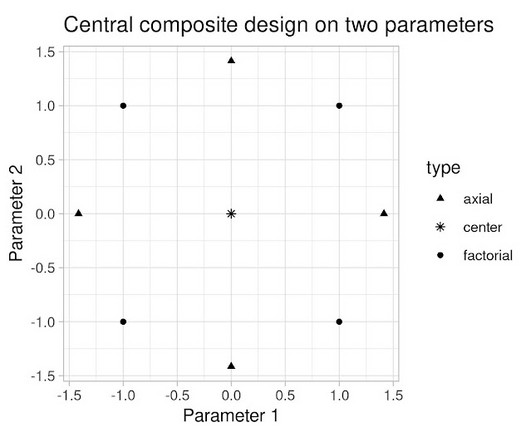

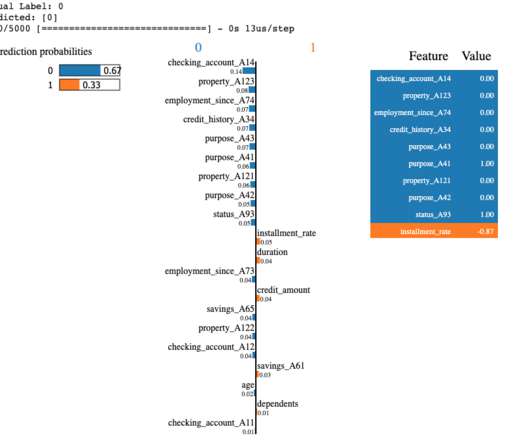

In this blog post, we explore three types of errors inherent in all financial models, with a simple example of a model in TensorFlow Probability (TFP). They trade the markets using quantitative models based on non-financial theories such as information theory, data science, and machine learning. Finance is not physics.

Let's personalize your content