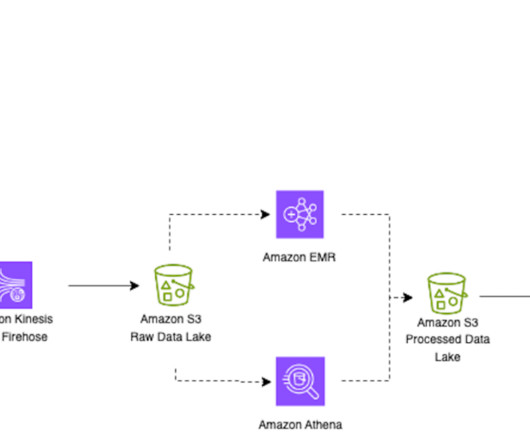

Use Apache Iceberg in a data lake to support incremental data processing

AWS Big Data

MARCH 2, 2023

Apache Iceberg is an open table format for very large analytic datasets, which captures metadata information on the state of datasets as they evolve and change over time. Iceberg has become very popular for its support for ACID transactions in data lakes and features like schema and partition evolution, time travel, and rollback.

Let's personalize your content