Automate schema evolution at scale with Apache Hudi in AWS Glue

AWS Big Data

FEBRUARY 7, 2023

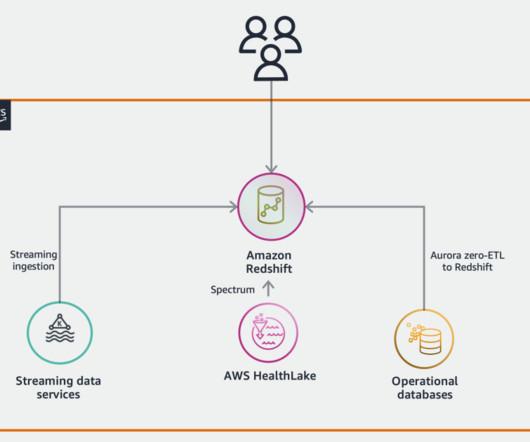

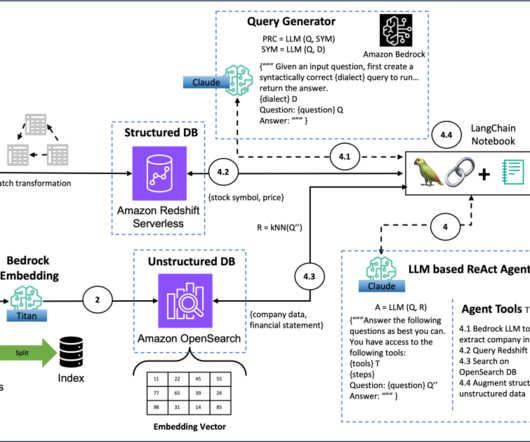

In the data analytics space, organizations often deal with many tables in different databases and file formats to hold data for different business functions. Apache Hudi supports ACID transactions and CRUD operations on a data lake. You don’t alter queries separately in the data lake. and save it.

Let's personalize your content