Top Data Lakes Interview Questions

Analytics Vidhya

OCTOBER 17, 2022

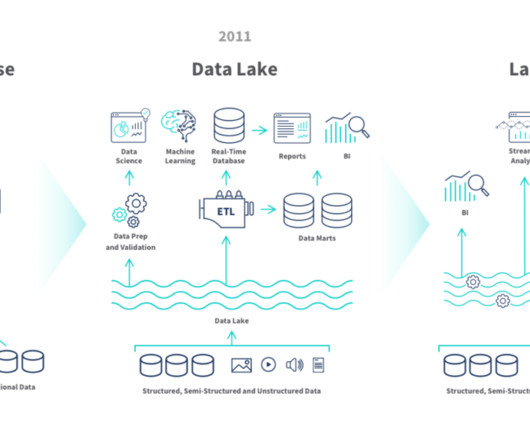

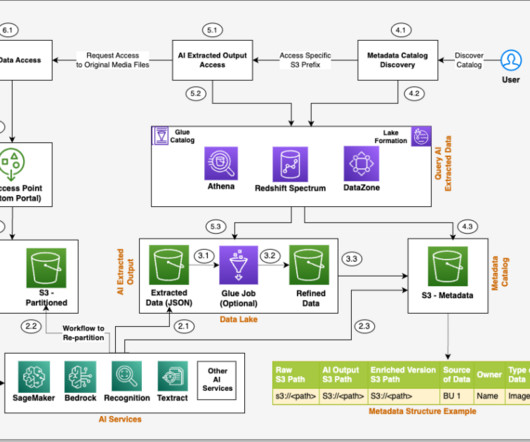

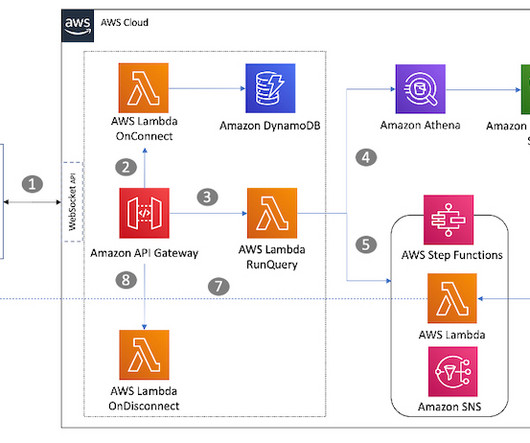

This article was published as a part of the Data Science Blogathon. Introduction A data lake is a centralized repository for storing, processing, and securing massive amounts of structured, semi-structured, and unstructured data. Data Lakes are an important […].

Let's personalize your content