How does a data catalog work?

Alation

FEBRUARY 27, 2020

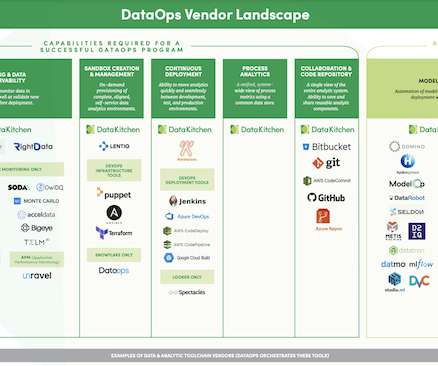

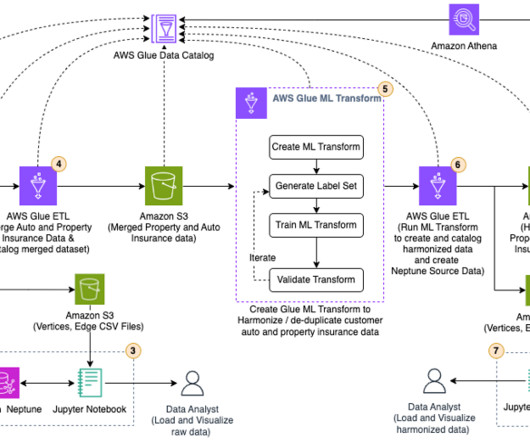

The post How does a data catalog work? The architectures of the past for BI and analytics – the Corporate Information Factory or the Bus Architecture – are now only one part of a complete analytical environment. Figure 1 gives you a good idea of […]. appeared first on Alation.

Let's personalize your content