Introducing the AWS ProServe Hadoop Migration Delivery Kit TCO tool

AWS Big Data

FEBRUARY 6, 2023

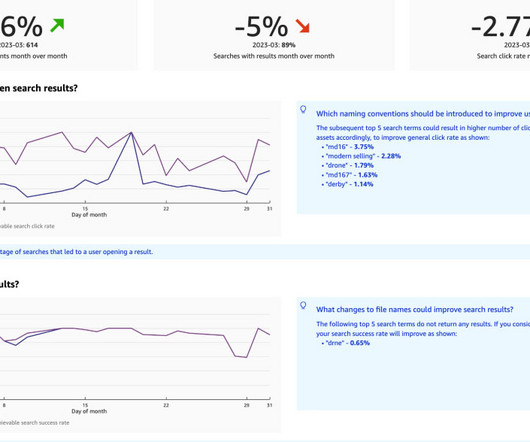

To solve this, we’re introducing the Hadoop migration assessment Total Cost of Ownership (TCO) tool. The self-serve HMDK TCO tool accelerates the design of new cost-effective Amazon EMR clusters by analyzing the existing Hadoop workload and calculating the total cost of the ownership (TCO) running on the future Amazon EMR system.

Let's personalize your content