Business Strategies for Deploying Disruptive Tech: Generative AI and ChatGPT

Rocket-Powered Data Science

FEBRUARY 15, 2023

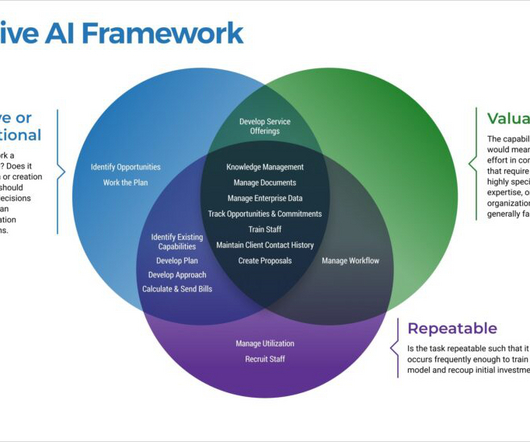

Third, any commitment to a disruptive technology (including data-intensive and AI implementations) must start with a business strategy. Those F’s are: Fragility, Friction, and FUD (Fear, Uncertainty, Doubt). These changes may include requirements drift, data drift, model drift, or concept drift.

Let's personalize your content