Amazon Redshift announcements at AWS re:Invent 2023 to enable analytics on all your data

AWS Big Data

NOVEMBER 29, 2023

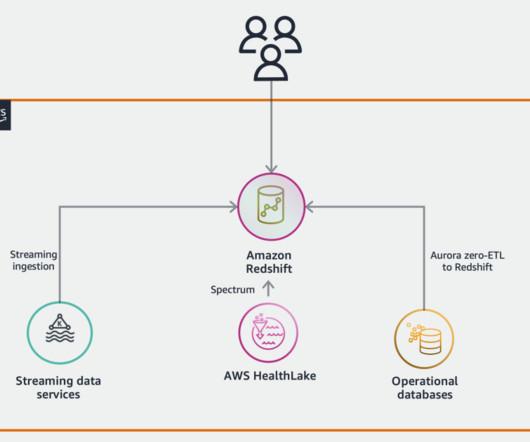

This cloud service was a significant leap from the traditional data warehousing solutions, which were expensive, not elastic, and required significant expertise to tune and operate. Use one click to access your data lake tables using auto-mounted AWS Glue data catalogs on Amazon Redshift for a simplified experience.

Let's personalize your content