Reference guide to build inventory management and forecasting solutions on AWS

AWS Big Data

APRIL 11, 2023

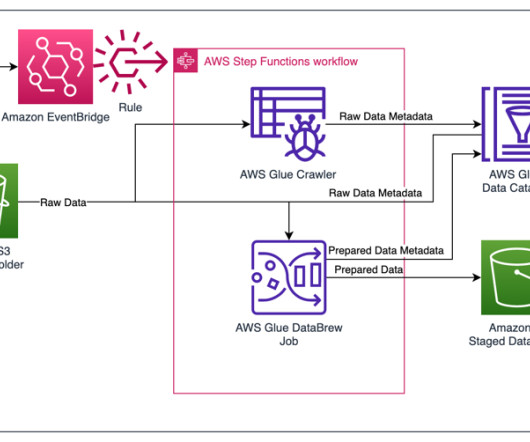

By collecting data from store sensors using AWS IoT Core , ingesting it using AWS Lambda to Amazon Aurora Serverless , and transforming it using AWS Glue from a database to an Amazon Simple Storage Service (Amazon S3) data lake, retailers can gain deep insights into their inventory and customer behavior.

Let's personalize your content