The Ten Standard Tools To Develop Data Pipelines In Microsoft Azure

DataKitchen

JULY 27, 2023

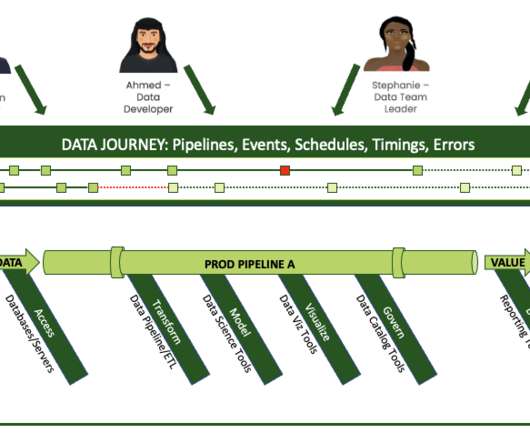

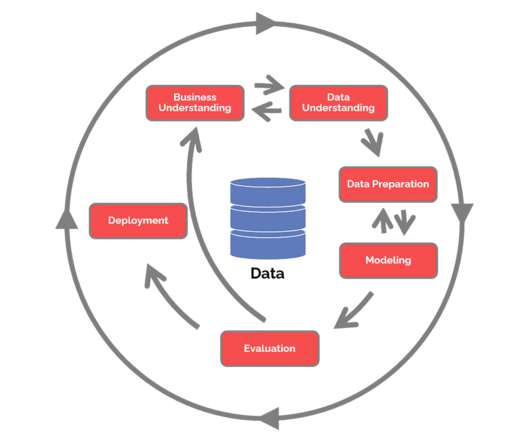

Here are a few examples that we have seen of how this can be done: Batch ETL with Azure Data Factory and Azure Databricks: In this pattern, Azure Data Factory is used to orchestrate and schedule batch ETL processes. Azure Blob Storage serves as the data lake to store raw data. Azure Machine Learning).

Let's personalize your content