Use AWS Glue ETL to perform merge, partition evolution, and schema evolution on Apache Iceberg

AWS Big Data

MARCH 4, 2024

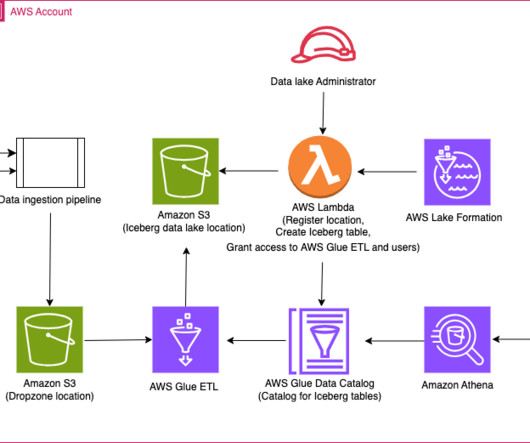

Apache Iceberg manages these schema changes in a backward-compatible way through its innovative metadata table evolution architecture. Due to the security requirements of different organizations, they need to manage fine-grained access control for the analysts through Lake Formation.

Let's personalize your content