Top 3 Trends in Data Infrastructure for 2021

Get your data engineering function ahead of the curve with orchestration platforms, data discovery engines, and data lakehouses.

Jan 2021 · 3 min read

Topics

RelatedSee MoreSee More

blog

What Mature Data Infrastructure Looks Like

Unlocking the value of data in an organization starts with having the right data infrastructure and tooling foundations. Here’s a look at the current state and future trends of data infrastructure.

Kenneth Leung

6 min

blog

14 Essential Data Engineering Tools to Use in 2024

Learn about the top tools for containerization, infrastructure as code (IaC), workflow management, data warehousing, analytical engineering, batch processing, and data streaming.

Abid Ali Awan

10 min

blog

An Introduction to Data Orchestration: Process and Benefits

Find out everything you need to know about data orchestration, from benefits to key components and the best data orchestration tools.

Srujana Maddula

9 min

blog

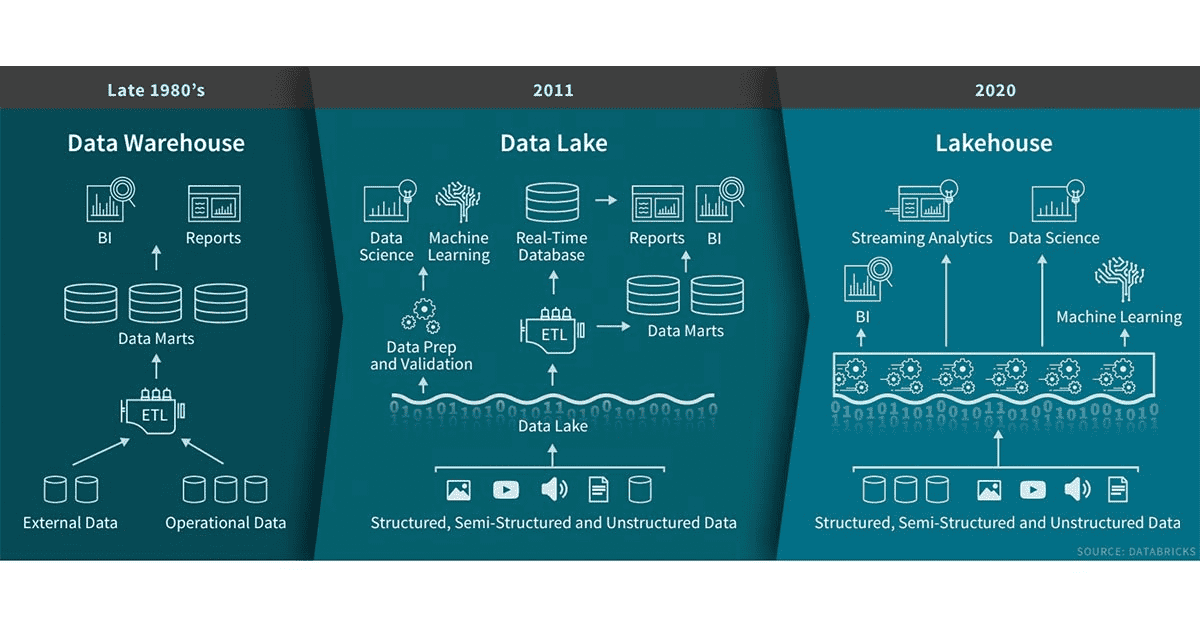

Data Lakes vs. Data Warehouses

Understand the differences between the two most popular options for storing big data.

DataCamp Team

4 min

blog

How to Build Adaptive Data Pipelines for Future-Proof Analytics

Leverage data warehousing techniques combined with business logic to build a scalable and sustainable approach to data analytics.

Sanjana Putchala

10 min

blog

Cloud Computing and Architecture for Data Scientists

Discover how data scientists use the cloud to deploy data science solutions to production or to expand computing power.

Alex Castrounis

13 min