10 Best Big Data Analytics Tools You Need To Know in 2023

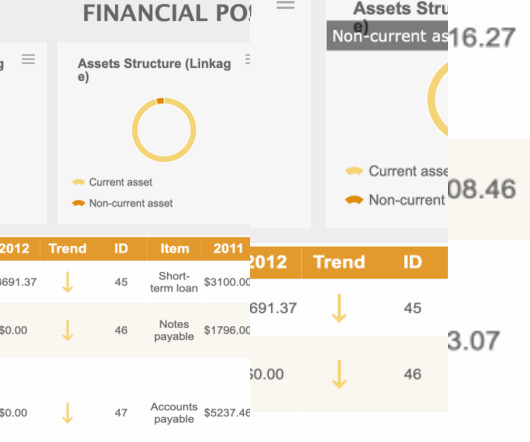

FineReport

APRIL 26, 2023

Apache Hadoop Apache Hadoop is a Java-based open-source platform used for storing and processing big data. It is based on a cluster system, allowing it to efficiently process data and run it parallelly. It can process structured and unstructured data from one server to multiple computers and offers cross-platform support to users.

Let's personalize your content