ML internals: Synthetic Minority Oversampling (SMOTE) Technique

Domino Data Lab

MAY 20, 2021

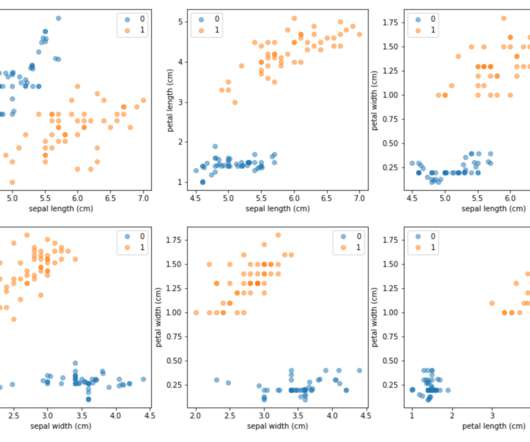

Insufficient training data in the minority class — In domains where data collection is expensive, a dataset containing 10,000 examples is typically considered to be fairly large. In their 2002 paper Chawla et al. propose a different strategy where the minority class is over-sampled by generating synthetic examples.

Let's personalize your content