Transforming Big Data into Actionable Intelligence

Sisense

MARCH 14, 2021

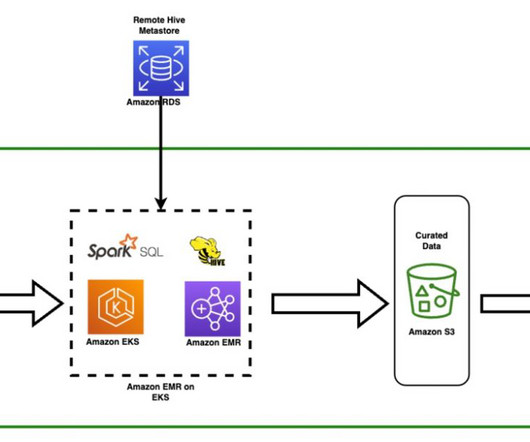

Looking at the diagram, we see that Business Intelligence (BI) is a collection of analytical methods applied to big data to surface actionable intelligence by identifying patterns in voluminous data. As we move from right to left in the diagram, from big data to BI, we notice that unstructured data transforms into structured data.

Let's personalize your content