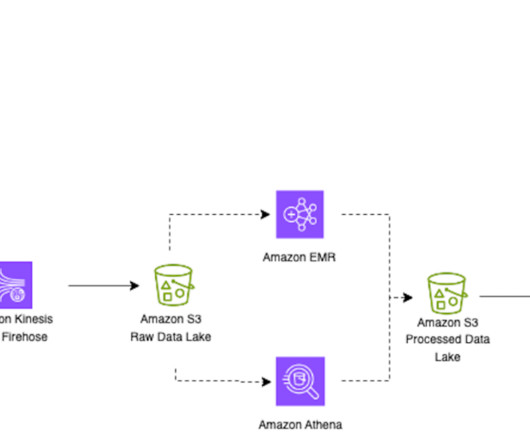

Use Apache Iceberg in your data lake with Amazon S3, AWS Glue, and Snowflake

AWS Big Data

APRIL 3, 2024

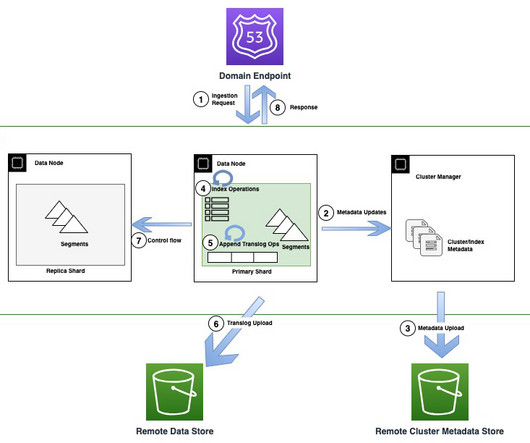

Iceberg tables maintain metadata to abstract large collections of files, providing data management features including time travel, rollback, data compaction, and full schema evolution, reducing management overhead. Snowflake integrates with AWS Glue Data Catalog to retrieve the snapshot location.

Let's personalize your content