AI at Scale isn’t Magic, it’s Data – Hybrid Data

Cloudera

OCTOBER 11, 2022

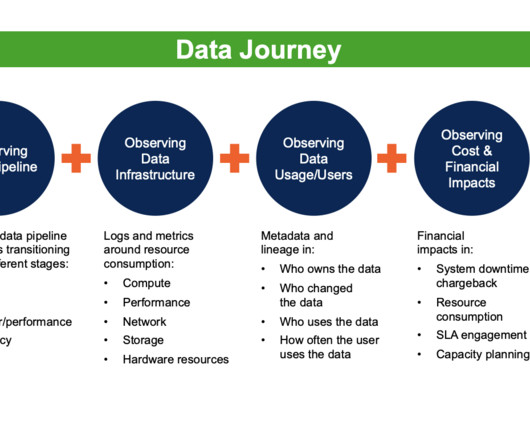

Al needs machine learning (ML), ML needs data science. Data science needs analytics. And they all need lots of data. The takeaway – businesses need control over all their data in order to achieve AI at scale and digital business transformation. Doing data at scale requires a data platform. .

Let's personalize your content