Secure cloud fabric: Enhancing data management and AI development for the federal government

CIO Business Intelligence

DECEMBER 19, 2023

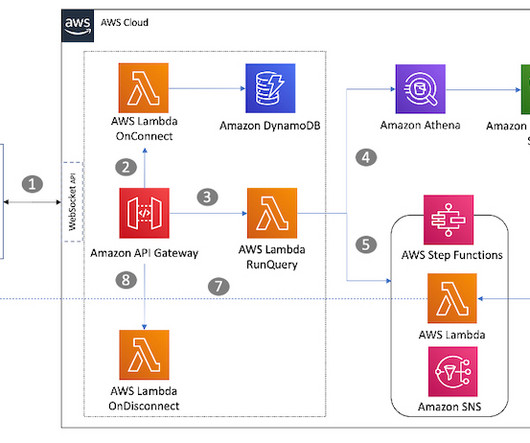

However, establishing and maintaining such connections can be a complex and costly process, especially as the volume of data being transmitted continues to grow. Similarly, connecting to data lakes presents both privacy and security concerns.

Let's personalize your content