Explaining black-box models using attribute importance, PDPs, and LIME

Domino Data Lab

AUGUST 1, 2021

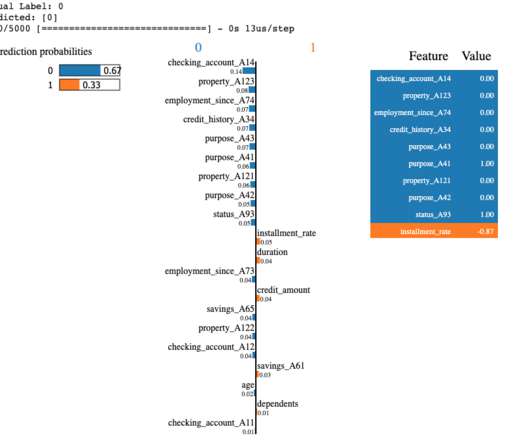

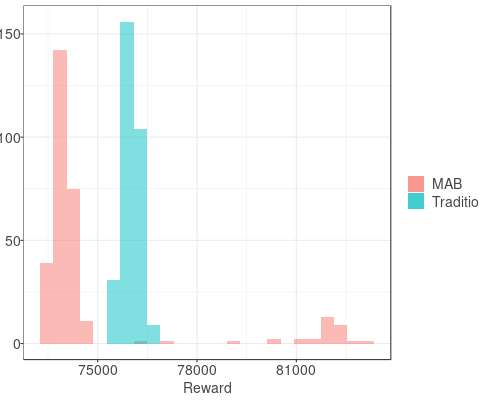

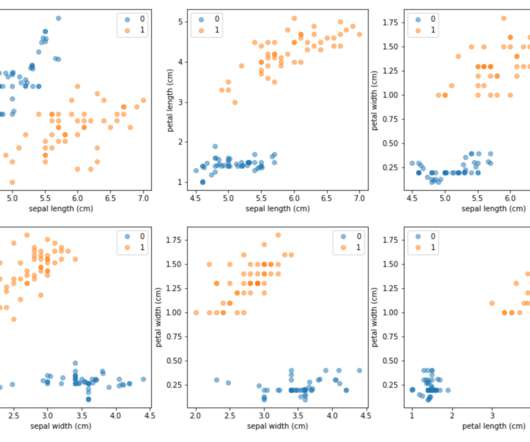

In this article we cover explainability for black-box models and show how to use different methods from the Skater framework to provide insights into the inner workings of a simple credit scoring neural network model. The interest in interpretation of machine learning has been rapidly accelerating in the last decade. See Ribeiro et al.

Let's personalize your content