Measuring Validity and Reliability of Human Ratings

The Unofficial Google Data Science Blog

JULY 18, 2023

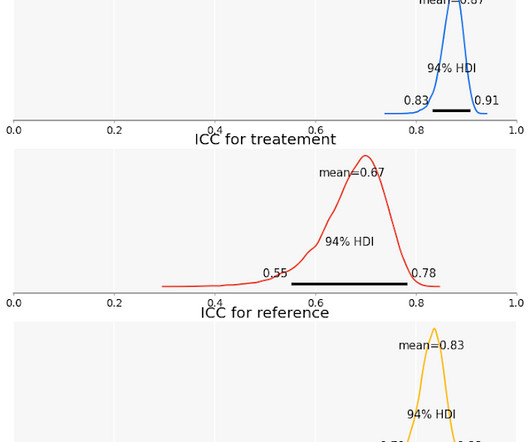

If they roll two dice and apply a label if the dice rolls sum to 12 they will agree 85% of the time, purely by chance. Throughout, we’ll refer to our model-derived measurement of inter-rater reliability as the Intraclass Correlation Coefficient (ICC). The raw agreement will be (⅚ * ⅚ + ⅙ * ⅙) = 72%.

Let's personalize your content