Data Visualization Inspiration: Analysis To Insights To Action, Faster!

Occam's Razor

NOVEMBER 18, 2014

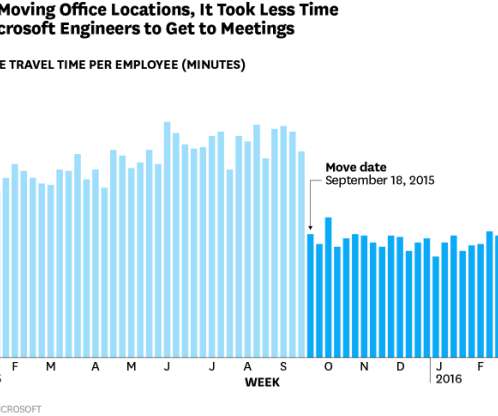

Like a vast majority on planet Earth, I love data visualizations. A day-to-day manifestation of this love is on my Google+ or Facebook profiles where 75% of my posts are related to my quick analysis and learnings from a visualization. Data visualized is data understood. But for a visual person like me, this is the ah-ha moment.

Let's personalize your content