Why you should care about debugging machine learning models

O'Reilly on Data

DECEMBER 12, 2019

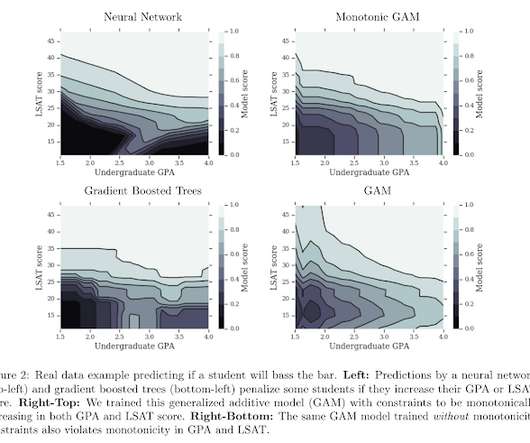

1] This includes C-suite executives, front-line data scientists, and risk, legal, and compliance personnel. These recommendations are based on our experience, both as a data scientist and as a lawyer, focused on managing the risks of deploying ML. That’s where model debugging comes in. Sensitivity analysis.

Let's personalize your content