How smava makes loans transparent and affordable using Amazon Redshift Serverless

AWS Big Data

DECEMBER 21, 2023

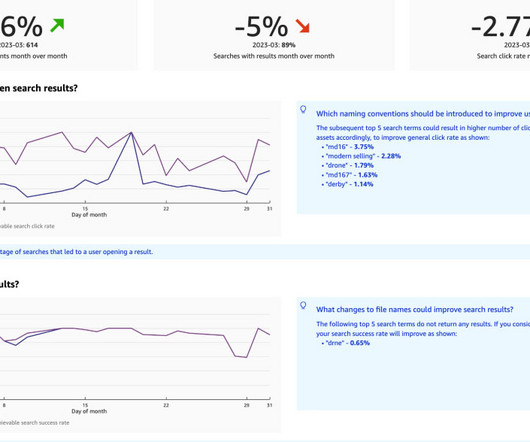

The data volume is in double-digit TBs with steady growth as business and data sources evolve. smava’s Data Platform team faced the challenge to deliver data to stakeholders with different SLAs, while maintaining the flexibility to scale up and down while staying cost-efficient.

Let's personalize your content