How to Manage Risk with Modern Data Architectures

Cloudera

JUNE 29, 2023

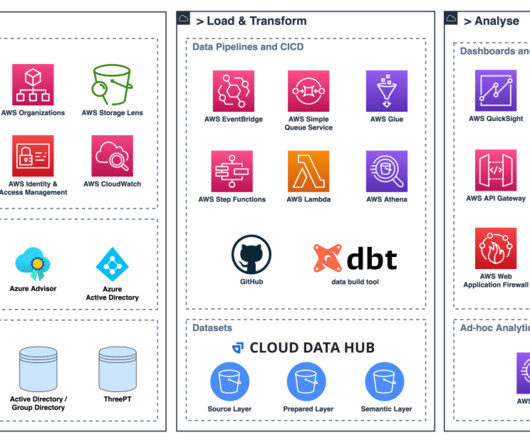

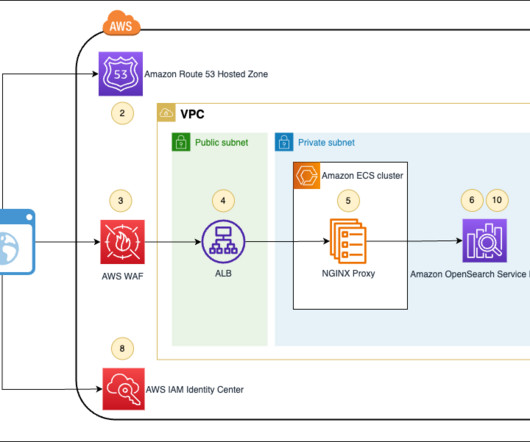

To improve the way they model and manage risk, institutions must modernize their data management and data governance practices. Implementing a modern data architecture makes it possible for financial institutions to break down legacy data silos, simplifying data management, governance, and integration — and driving down costs.

Let's personalize your content