Apache Kafka and the Denodo Platform: Distributed Events Streaming Meets Logical Data Integration

Data Virtualization

OCTOBER 27, 2023

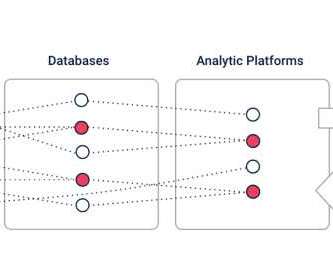

Kafka is used when real-time data streaming and event-driven architectures with scalable data processing are essential.

Let's personalize your content