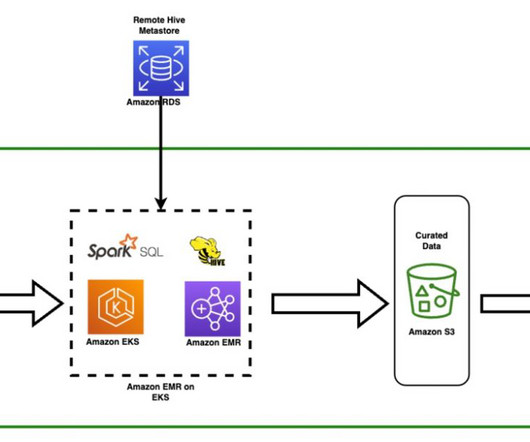

Run Apache Hive workloads using Spark SQL with Amazon EMR on EKS

AWS Big Data

OCTOBER 18, 2023

Spark SQL is an Apache Spark module for structured data processing. They use various AWS analytics services, such as Amazon EMR, to enable their analysts and data scientists to apply advanced analytics techniques to interactively develop and test new surveillance patterns and improve investor protection.

Let's personalize your content