How Amazon Devices scaled and optimized real-time demand and supply forecasts using serverless analytics

AWS Big Data

FEBRUARY 1, 2023

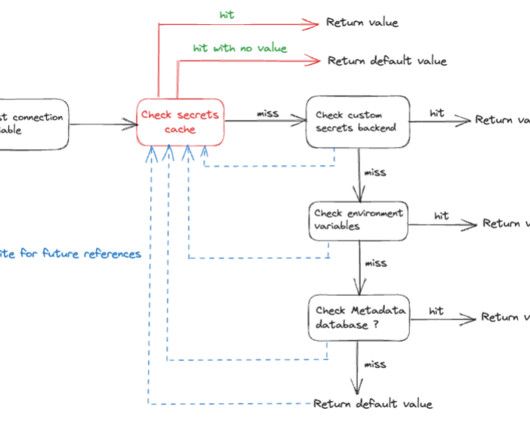

To further optimize and improve the developer velocity for our data consumers, we added Amazon DynamoDB as a metadata store for different data sources landing in the data lake. S3 bucket as landing zone We used an S3 bucket as the immediate landing zone of the extracted data, which is further processed and optimized.

Let's personalize your content