Accelerate HiveQL with Oozie to Spark SQL migration on Amazon EMR

AWS Big Data

APRIL 19, 2023

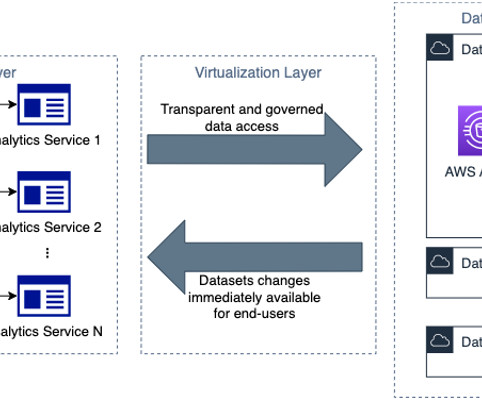

Many customers run big data workloads such as extract, transform, and load (ETL) on Apache Hive to create a data warehouse on Hadoop. Instead, we can use automation to speed up the process of migration and reduce heavy lifting tasks, costs, and risks. The script generates a metadata JSON file for each step.

Let's personalize your content