Implement data warehousing solution using dbt on Amazon Redshift

AWS Big Data

NOVEMBER 17, 2023

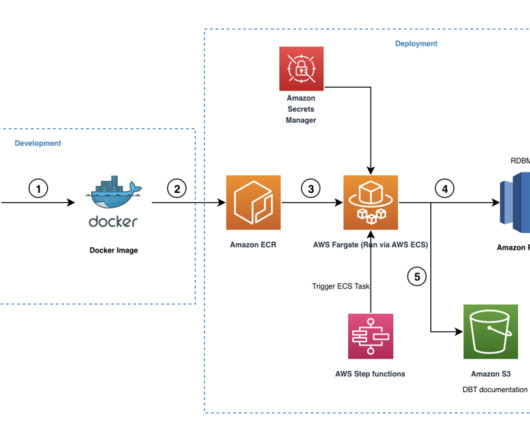

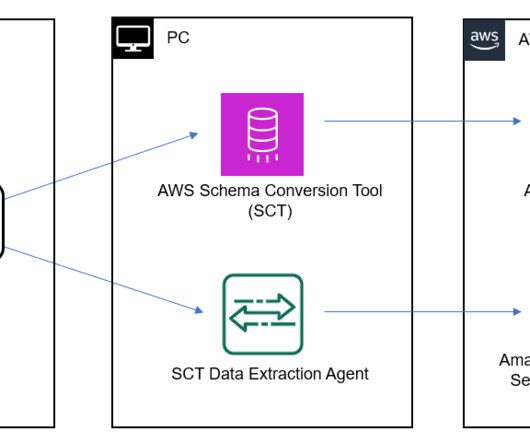

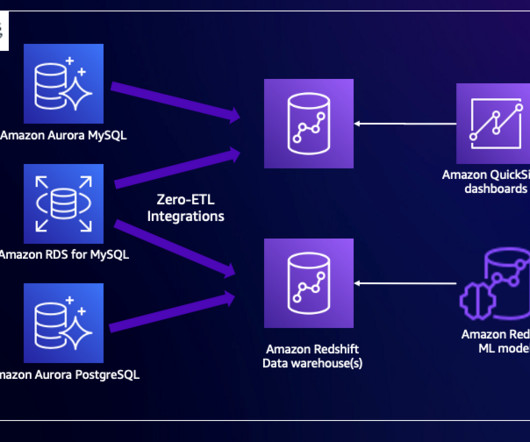

In this post, we look into an optimal and cost-effective way of incorporating dbt within Amazon Redshift. In an optimal environment, we store the credentials in AWS Secrets Manager and retrieve them. Snapshots – These implements type-2 slowly changing dimensions (SCDs) over mutable source tables.

Let's personalize your content