AWS Glue Data Quality is Generally Available

AWS Big Data

JUNE 6, 2023

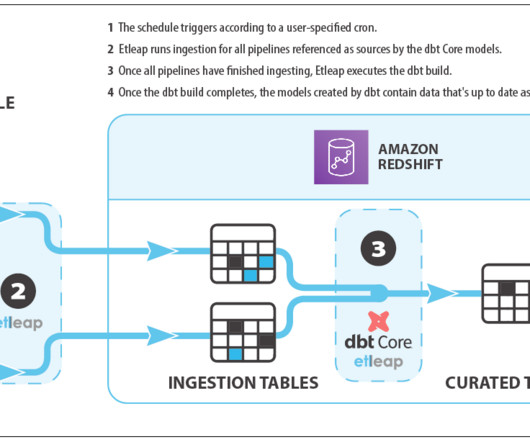

We are excited to announce the General Availability of AWS Glue Data Quality. Our journey started by working backward from our customers who create, manage, and operate data lakes and data warehouses for analytics and machine learning.

Let's personalize your content