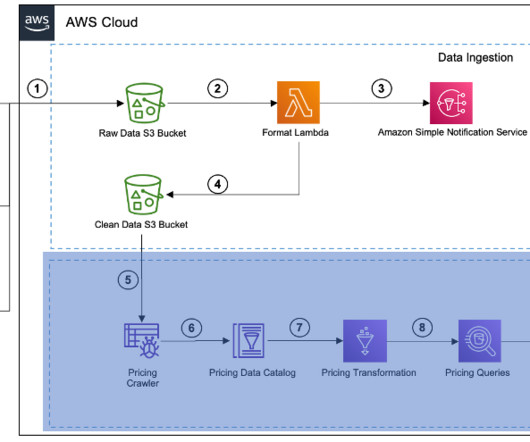

End-to-end development lifecycle for data engineers to build a data integration pipeline using AWS Glue

AWS Big Data

JULY 26, 2023

Many AWS customers have integrated their data across multiple data sources using AWS Glue , a serverless data integration service, in order to make data-driven business decisions. Are there recommended approaches to provisioning components for data integration?

Let's personalize your content