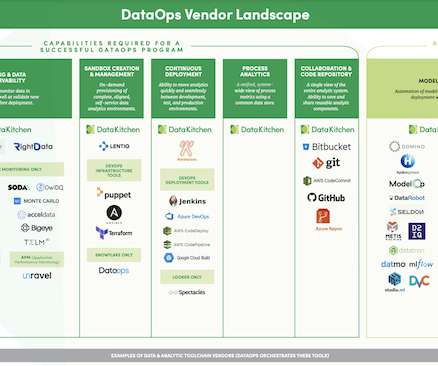

The DataOps Vendor Landscape, 2021

DataKitchen

APRIL 13, 2021

DataOps needs a directed graph-based workflow that contains all the data access, integration, model and visualization steps in the data analytic production process. It orchestrates complex pipelines, toolchains, and tests across teams, locations, and data centers. Meta-Orchestration .

Let's personalize your content